Table of Contents

- In this episode

- FAQ & HowTo Rich results going away for many sites

- TW-BERT

- “From publishers you follow” in the Search results

- Google says removing old articles will not improve quality

- More SEO info

- AI News

- SGE

- ChatGPT

- More AI Info

- Great articles published this week

- Local SEO

- SEO Jobs

- Subscriber Content

- The Search Bar is gearing up

SEO and AI News - A lot of important things happened this week | Episode 299. Aug 14, 2023

Last week’s episode (298) See all episodes Subscriber Content

In this episode we discuss big changes to rich results, insights into Google’s TW-BERT framework, will removing old content help improve quality, new features like content recommendations from publishers you follow, ChatGPT custom instructions update, traffic drops seen starting May 25th, and more.

Subscriber content

In the subscriber content I go into great detail on a site review that I am currently doing. The site has a catastrophic drop in traffic that starts May 25, 2023. It looks like quite a few sites were impacted on this day. My initial hypothesis was that this site was affected by the helpful content system. However, it looks like something more is happening. I used Claude to compare content across Google’s quality questions and an obvious pattern emerged.

We also talk about whether “featured on” sections on your site are a good idea.

Not able to subscribe? You’ll still learn lots each week in newsletter. Sign up here so I can send you an email each week when it’s ready:

FAQ & HowTo Rich results going away for many sites

Google has announced that they are reducing the visibility of FAQ rich results and limiting How-To rich results.

Here are the important points:

- FAQ rich results will be limited to authoritative government and health sites, reduced for other sites

- How-To rich results will only show for desktop searches, not mobile

- Changes will be visible in Search Console reporting data

- Rolls out globally over the next week

- Structured data does not need to be removed proactively

- Mobile sites still need How-To markup for desktop rich results

- Not considered a core search ranking change

This means that many sites that have spent countless hours adding schema will no longer have FAQ rich results in the SERPS, or How-To results on mobile.

Google wasn't messing around.

That's a decline for how-to rich results on mobile down to zero. And as promised by Google, desktop how-to rich results remain.

FAQ rich results are on the decline too. Check GSC search appearance & read Google's announcement if you missed it. pic.twitter.com/qMY8qpY9Gi

— Brodie Clark (@brodieseo) August 10, 2023

Yep 😢

And I just discovered a single page was bringing in 72% of my FAQ clicks. I'll monitor the overall clicks to that page for the next week. pic.twitter.com/VUD2IQ7Clu

— Tony Hill (@tonythill) August 9, 2023

And here we go. Isolating how-to snippets in GSC on mobile yields a massive drop in impressions and clicks (as how-to snippets were removed as of yesterday from the mobile SERPs). Will be interesting to see the change in CTR for sites that heavily used how-to markup. pic.twitter.com/U1pcgPbhLS

— Glenn Gabe (@glenngabe) August 9, 2023

I suspect that once SGE answers are the norm, there will be little need for FAQ content as this information should be in the SGE result.

I’m so angry. This is clearly a move for SGE to provide answers instead.

— Abi White (@AbiWhite7) August 8, 2023

Clearly making way for SGE.

— Jonno 🇬🇧 (@Sitegains) August 8, 2023

I also suspect (although I can’t prove this) that Google encouraged FAQ schema markup so that they could use this information to learn how to extract the important information without the need of schema.

Olaf Kopp has an interesting thread that supports this idea.

😵Significantly less FAQ Rich Snippets in the SERPs☠️

Google has announced that it will deliver significantly fewer FAQ rich snippets in the SERPs. Some SEOs like @brodieseo @Kevin_Indig have shared their guesses as to Google's motives.

Here are my thoughts on it 👇🧵 pic.twitter.com/DMAbZqT81U— ✌️Oᒪᗩᖴ KOᑭᑭ 🔥 (@Olaf_Kopp) August 9, 2023

Here is something I did not know. PAA’s are powered by the same system as featured snippets. FAQ’s are not. Rich Tatum shared with me in the Search Bar that Brodie Clark had written a good article on “the New Featured PAA Snippet“.

TW-BERT

Roger Montii wrote an excellent article on this new Google framework that helps them better understand user intent and then match more relevant documents.

This looks like something we should be paying attention to.

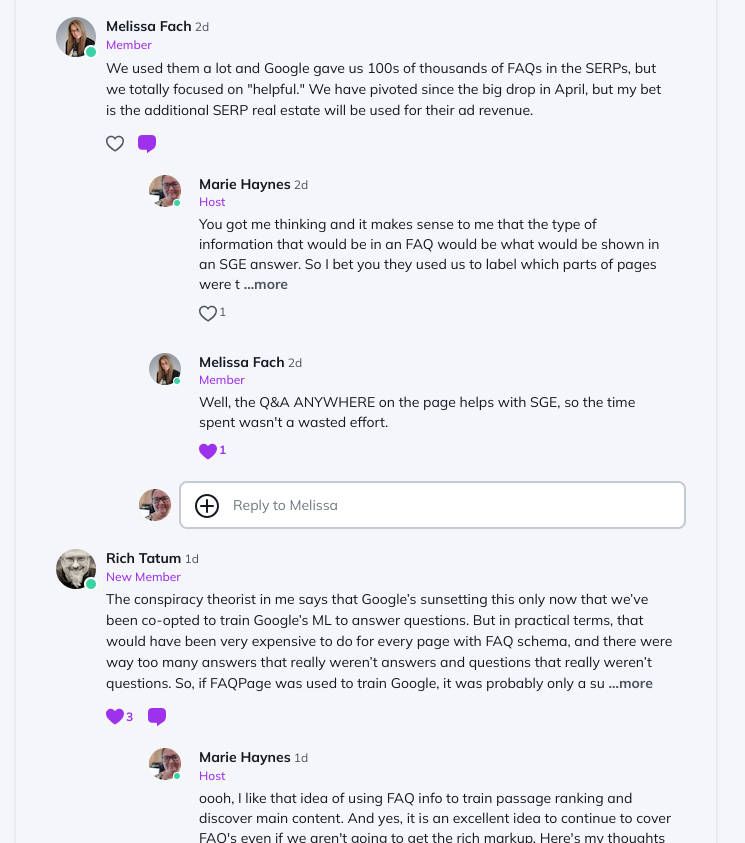

Roger says, “One of the advantages of TW-BERT is that it can be inserted straight into the current information retrieval ranking process, like a drop-in component.” It can be implemented by Google quite easily. Roger speculates that perhaps Google’s deployment of TW-BERT is responsible for the wild ongoing SERP turbulence the SEO weather tools are seeing.

This picture from Algoroo shows that we have seen wild ongoing fluctuations since May. If this turbulence is because of TW-BERT, it would mean that what we are seeing are slight shifts in keyword rankings because Google changed its understanding of intent for those queries.

In the Subscriber content I share about a site that may have been impacted by a significant change to Google’s algorithms starting May 25. I initially thought the site was suffering from being classified by the helpful content system, but I think it’s possible that Google shifting to better understand intent and relevance would be an excellent explanation for the losses they are seeing.

If your rankings have been slipping, it may be because Google got better at understanding what it is that a query is trying to accomplish and determining which content is more likely to be seen as relevant and helpful to a searcher.

“From publishers you follow” in the Search results

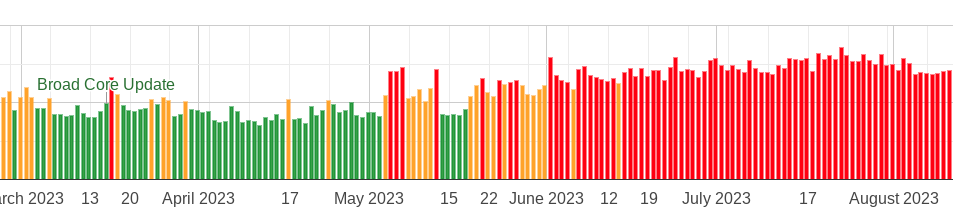

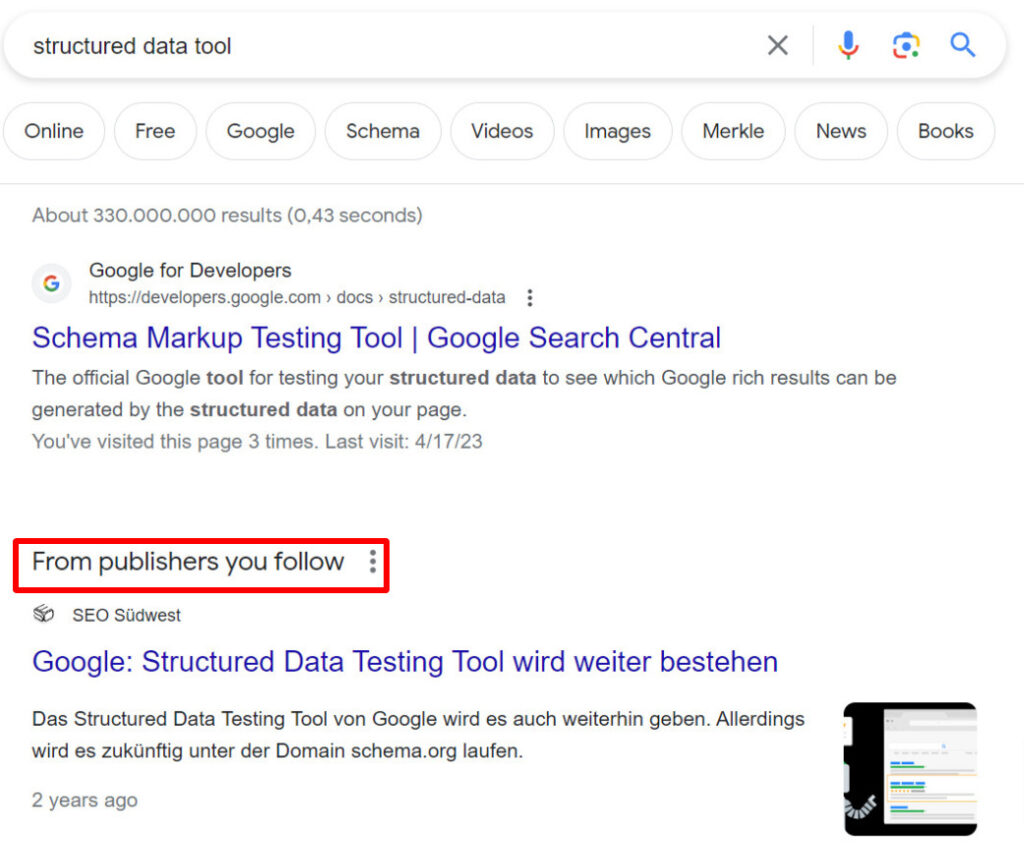

A few episodes ago we discussed people seeing an “updates for you” section pinned to the top of their search results. I anticipated that eventually sites you follow may be shown in here. Sure enough, folks are seeing a new feature that looks like this (shared by SEO Sudwest):

Shared by Glenn Gabe:

You can follow sites by clicking on these 3 dots in Chrome:

I have also been prompted when using Discover to follow sites.

@rustybrick this is new, huh? It says "from publishers you follow" pic.twitter.com/n85x2A91EW

— Mobilanyheter (@mobilanyheter) August 8, 2023

fwiw, I'm in US and this is the first I'm seeing of it too

been following this publication for years (I consult for them), & this is the 1st I'm seeing them in SERPs like this

the dropdown menu links to 'Subscriptions', but no Google News subs (only paid) pic.twitter.com/EZXzCwgPCo

— Oleg Korneitchouk (@olegko) August 8, 2023

Google says removing old articles will not improve quality

Gizmodo reported that CNET has deleted thousands of old articles in an effort to improve their Google performance. They made decisions based on a number of factors such as the age and length of a story, traffic to the article and how frequently Google crawls the page.

In response, Google posted this.

Are you deleting content from your site because you somehow believe Google doesn't like "old" content? That's not a thing! Our guidance doesn't encourage this. Older content can still be helpful, too. Learn more about creating helpful content: https://t.co/NaRQqb1SQx

— Google SearchLiaison (@searchliaison) August 8, 2023

The page itself isn’t likely to rank well. Removing it might mean if you have a massive site that we’re better able to crawl other content on the site. But it doesn’t mean we go “oh, now the whole site is so much better” because of what happens with an individual page.

— Google SearchLiaison (@searchliaison) August 9, 2023

We're not adding up all the "old" pages on a site and deciding a site is so "old" that other pages shouldn't rank well.

— Google SearchLiaison (@searchliaison) August 10, 2023

We were asked if older content is an issue for ranking and if getting rid of it is an issue. That’s not in “isolation” but a specific situation and it’s still no. Just having older content is not necessarily bad. See also: https://t.co/JnhiAhJauZ

— Google SearchLiaison (@searchliaison) August 10, 2023

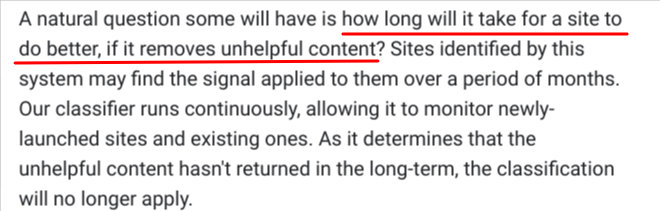

This sparked a debate on whether content pruning has value or not.

I have had cases in the past where we deleted copious amounts of thin content in the form of forum profiles, and posts where there was a question but no answer. In 2014 I had a beautiful Panda recovery after doing this with one site. In 2018, Moz deindexed 75% of their site, again, mostly low quality user profiles and saw a lift of 9% in organic users the next month.

There is an important distinction here. Removing content that never was valuable to anyone is probably a good idea. But removing content just because it’s older and not visited anymore is probably not necessary.

I think I can see where the confusion comes from. According to Ahrefs, CNET’s organic traffic has been in decline since Nov 15, 2022.

Although Google has not confirmed that this date was connected to the helpful content system, based on the number of sites I have reviewed with ongoing losses starting on this day, I believe it is.

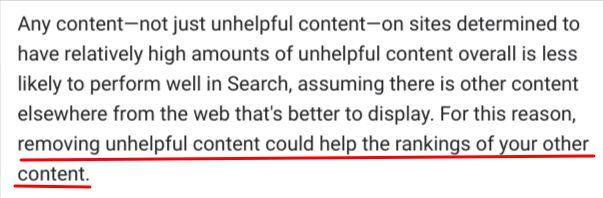

Google’s documentation on the helpful content system is a little vague on what it takes to recover. They do mention removing unhelpful content though.

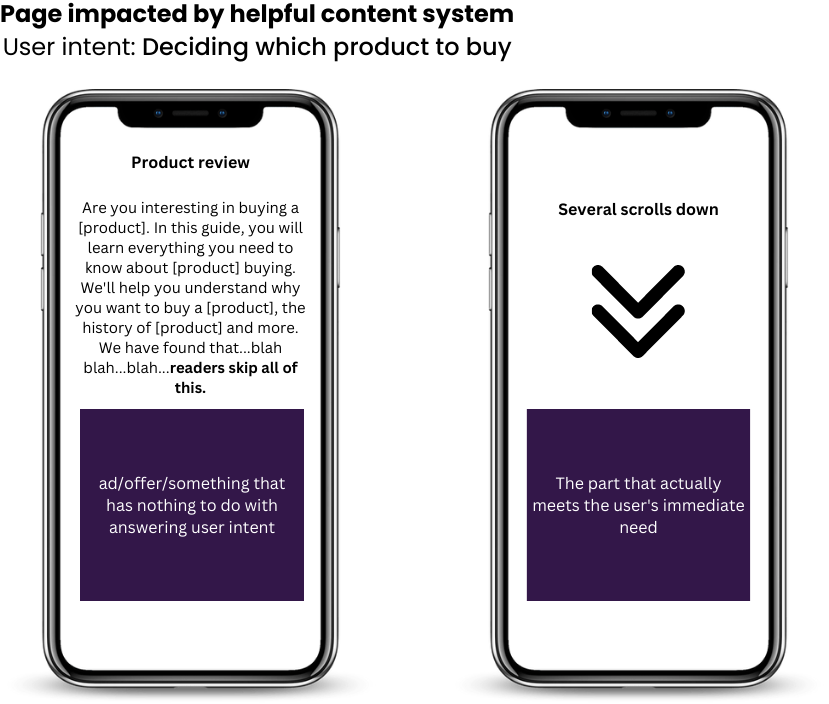

My thought: When Google talks about removing unhelpful content, they are talking about content that does not align with their helpful content criteria. While there are many things to consider in this list, none of them tell us that old content is unhelpful. Rather, content that is unhelpful is content that is likely to be:

- unoriginal

- insightful

- basically the same as everyone else who is writing on that topic

- lacking real world experience or expertise

- padded with words (to look more relevant to search engines) that readers skip over

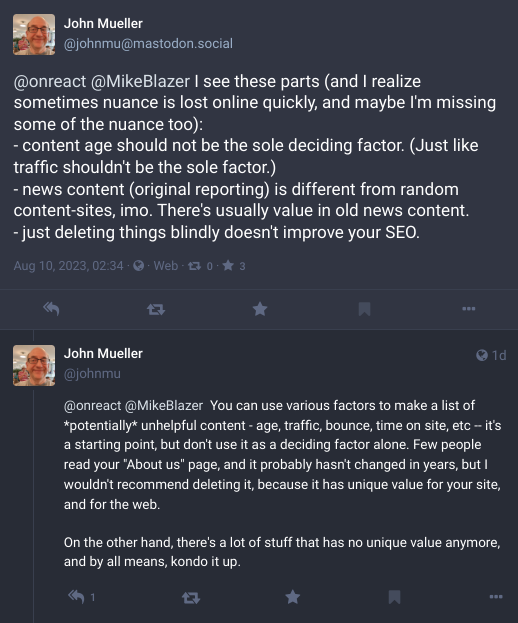

On Mastodon, John Mueller added more thoughts on content pruning:

Is your website consistently the best result to show searchers?

I was asked why a site was impacted by a recent reviews update.

If the reviews system impacted you it means that overall, other sites do a better job at aligning with these criteria than yours.https://t.co/8xCYwcidj6

Best advice I can give is to put yourself in the shoes of someone searching and look at example after example of pages that…

— Marie Haynes (@Marie_Haynes) August 4, 2023

The site owner explained how they have been improving:

- removing affiliate heavy articles

- removing affiliate links that were unrelated

The thing is, while these may make the article somewhat less annoying to searchers, these changes don’t make the content more likely to be the best answer to meet the searcher’s need. And that’s what Google wants to reward.

**This** is what you should be aiming for with your website, if you want to be highly regarded by search engines. It's not the only thing, of course, but "just as good as others" is not a way to stand out. https://t.co/UYr0edVZLS

— John 🧀 … 🧀 (@JohnMu) August 5, 2023

For years, the work of many SEOs was to create something equally as valuable as competitors and then try to SEO it to the top by good use of links and technical improvements. The more Google learns to identify truly helpful content, the less SEO tactics are key to ranking.

You’ll see in the subscriber section how a site I am currently reviewing recently saw losses after ranking for many years. For every single page I analyzed, Google started to elevate content that really was more original and insightful and helpful.

Helpful content workbook

If you want to go even deeper in understanding how to assess user intent and create content that meets that intent, you will definitely find my helpful content workbook useful!

More SEO Info

Does Google use author meta tags / schema to indicate authoritativeness?

Do I understand it right that he says that author meta tags are indicators of authoritativeness?

I always thought them to be trust signals…

It's Janapanese Google Office Hours.

Here: https://t.co/jldGg8kLBt pic.twitter.com/fuiEK3wC7S— 🐝 Olesia Korobka 💙💛🐝 (@Giridja) August 11, 2023

I took the transcript for this video and asked Claude to summarize it as it’s in Japanese. First of all, how amazing is it that I can take a Japanese document and ask questions of it? But next, here is Claude’s summary:

Here is a summary of the key points from the transcript:

- Video is a Japanese Google Search office hours session with Google employees answering questions

- Topics covered include EEAT (Expertise, Authoritativeness, Trustworthiness), handling AI-generated content, optimizing large sites, news topic authority, and more

- Discussion of how Google evaluates EEAT signals like expertise, reputation, original reporting for ranking authoritative content

- Google has long handled generated content, and is using improved ML systems to detect low quality AI content

- Tips on keeping UGC sites clean, using nofollow, having human reviews to maintain quality

- Importance of page-level metrics like author markup for authority, not domain-level factors

- Handling JavaScript rendering: tradeoffs of server-side vs client-side, costs, benefits for SEO

- Emphasis on creating high quality content, not implementation details like SSR vs dynamic rendering

- Overall focus on providing the best search experience for users with authoritative, trustworthy information

I asked Claude, “How does Gary Illyes say in this video EEAT is evaluated?”

I’ve bolded some parts I thought were interesting.

Unfortunately the video transcript does not contain any direct quotes from Gary Illyes specifically discussing how EEAT is evaluated. However, here is a summary of the key points made in the discussion about EEAT:

- EEAT stands for Expertise, Authoritativeness, Trustworthiness. It refers to signals Google uses to promote high quality content in search results.

- Evaluators don’t directly rate or score pages for EEAT. They provide feedback on test samples to help train systems.

- Evaluators aren’t deciding where content appears in search or directly evaluating sites.

- Evaluators go through extensive training with many examples outlined in Google’s Search Quality Evaluator Guidelines.

- It’s difficult to give concrete advice on achieving high EEAT since it depends on the specific content. But in general, having expertise/experience on the topic, being recognized as an authority, and providing trustworthy information are important.

- Following best practices outlined in Google’s guidelines can help, but EEAT is evaluated holistically for each page rather than a checklist.

So in summary, Gary Illyes did not provide direct quotes on how EEAT is evaluated in this particular video. But the overall discussion covered the high-level approach Google takes to training systems that assess EEAT signals algorithmically.

I asked, “what does he say about author meta tags?”

Unfortunately Gary Illyes does not specifically discuss author meta tags in the video transcript provided. However, another speaker, Anna from Google, does mention author metadata in the context of evaluating authority and authoritativeness:

Anna said:

“Well, first of all I think it’s evaluated on a per page basis, per article basis, so for that article if there is a proper author, if it’s a person then it’s a person, if it’s an organization it’s the organization’s name, and maybe it’s the same for the whole domain, I don’t know. But in that form it’s like who wrote it, what’s the source, whether that source is properly specified with metadata and meta tags, those kinds of things, if those things are there that’s better.”

The key points she makes about author metadata:

- Authority is evaluated on a per page/article basis

- Identifying a proper author name, whether a person or organization, is important

- Having this author name/source specified in metadata and meta tags is beneficial

- Though details like metadata help, authority is still determined holistically for each page

So in summary, the speaker highlights author meta tags as one potential signal that can help Google better determine authority and authoritativeness for a page, but there are no direct quotes from Gary Illyes about this topic.

During the session, I realized he was referring to structured data when he mentioned "meta tags." I confirmed this with him afterward.

i.e. Regarding to Google News, It's crucial to add Author property (Person/Organization) to each page in order to demonstrate your E-E-A-T.

— Kenichi Suzuki💫鈴木謙一 (@suzukik) August 12, 2023

Moz has a new metric: Brand Authority

Brand authority is a new score developed by Moz designed to measure a domain’s total brand strength across marketing channels (as opposed to DA which measured your ability to rank on search engines.)

New from Google: Advances in document understanding

In this recently published paper, Google shares about a new dataset called VRDU that will help train their systems to better understand real-world documents like forms and receipts. Other datasets researchers use don’t look enough like real documents. The VRDU dataset uses forms and receipts with complex designs and languages so computers learn to deal with real documents.

Semrush was reporting drops in PAA’s but it was a bug

Glenn Gabe first noticed this.

Hi Glen! We confirmed with our team that it was an issue on our side. It's now fixed, but it will only affect new data. The current drop in data will remain, as we won't be able to restore historical data in this case. Thanks so much for bringing this up to us. – Sasha

— Semrush (@semrush) August 11, 2023

GA4 checklist

Brie Anderson put this GA4 setup checklist together.

MozCon decks

It looks like MozCon was amazing. You can download several of the presentations here. I’d recommend:

Google’s Just Not That Into You: Intent Switches During Core Updates by Lily Ray

Lower Your Shields: The Borg Are Here by Dr. Pete Myers

The Great Reset by Wil Reynolds

AI News

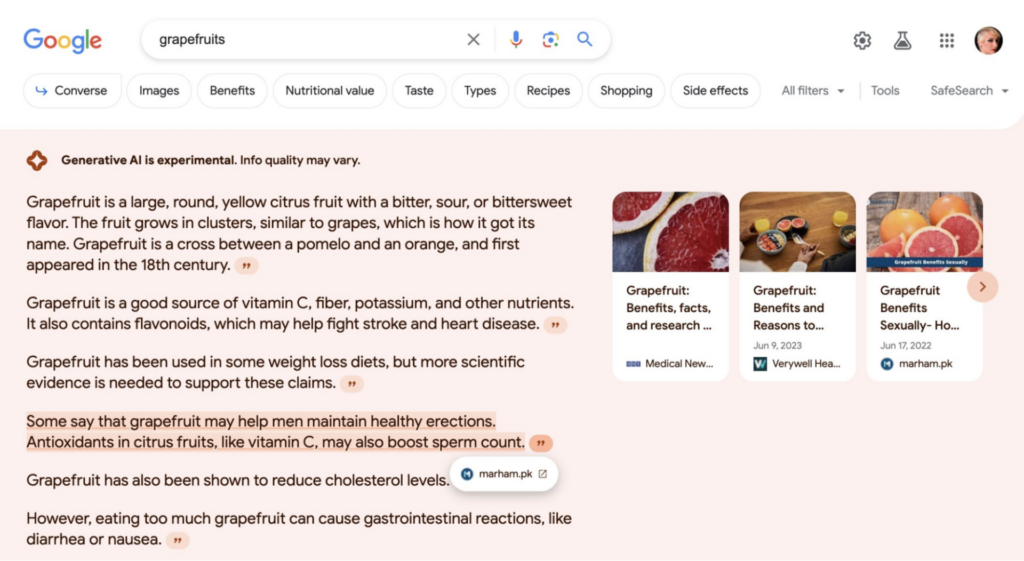

SGE

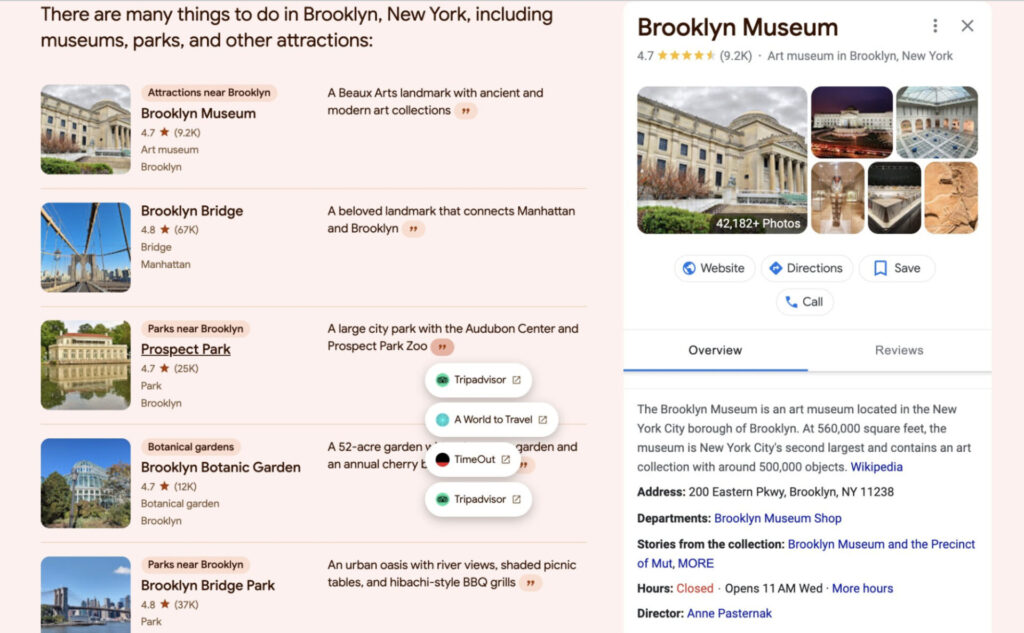

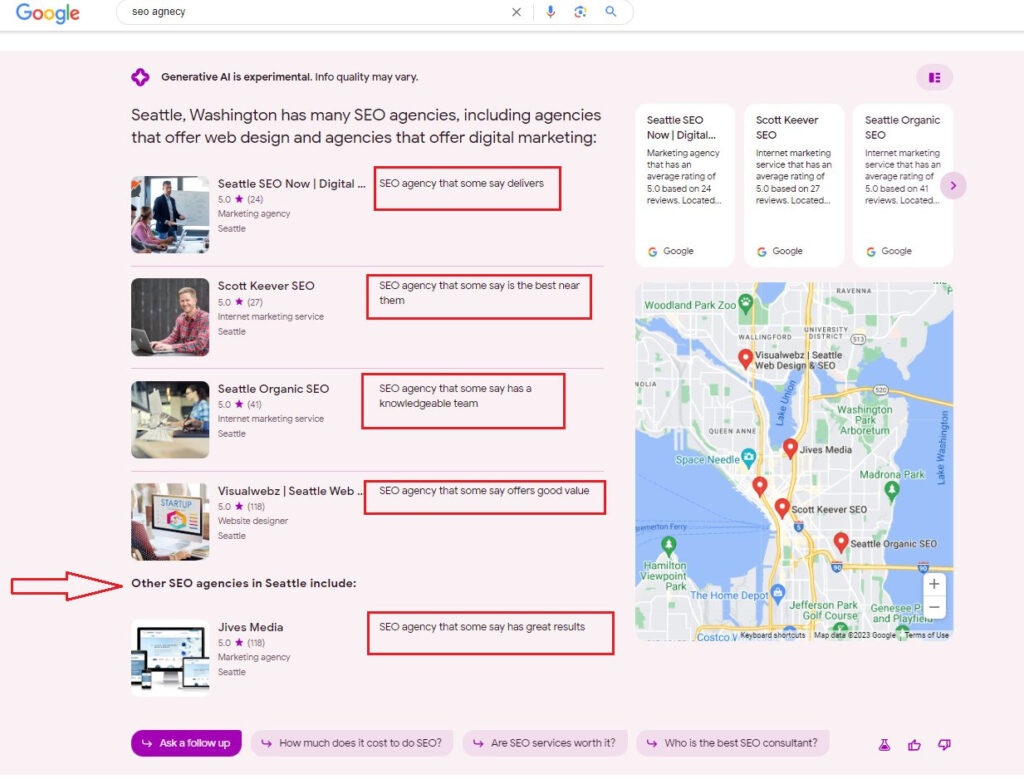

Here are the latest views shared of SGE this week.

Local results – reviews are so important

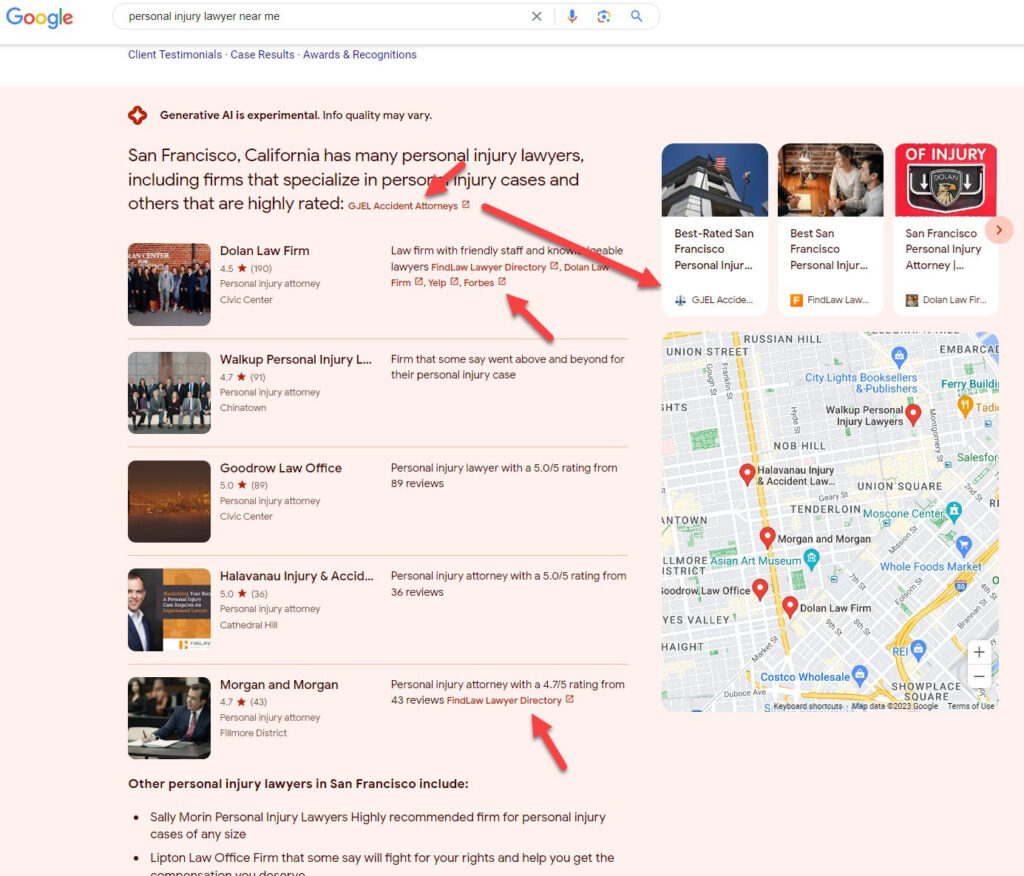

Andy Simpson shared a local search. The query was “personal injury lawyer near me.” The summary of the result came from a lawyer’s site but that firm was not actually mentioned in the SGE recommendations.

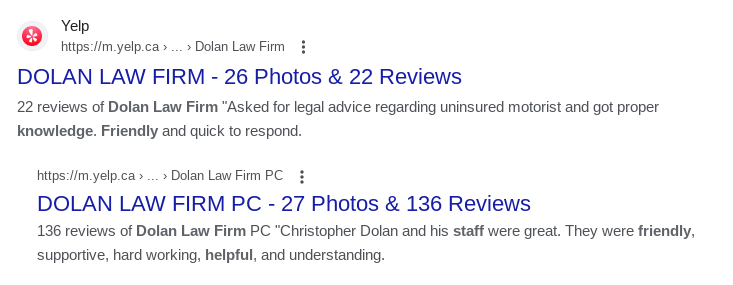

Several of the firms had notes next to them about their reviews. For example, Dolan Law Firm was described as “Law firm with friendly staff and knowledgeable lawyers”, citing FindLaw Lawyer Directory, their own website, Yelp and Forbes.

Where does “Law firm with friendly staff and knowledgeable lawyers” come from? Those exact words do not exist on the internet. I believe that this summary is synthesized from the information in Google’s knowledge graph…which is created from the body of information that exists on the internet.

Lily Ray also shared an SGE result where instead of linking to GBP profiles or the business itself, Google links to places that have reviewed the business.

Here is a screenshot from Khushal Bherwani showing once again, how user reviews are summarized.

I think that cultivating reviews is so important. I do a horrible job myself. If my info, consulting or my newsletter has helped you over the years, can you leave me a review sharing how I have helped? Who knows, perhaps Google will use the words you leave to summarize why people find my content helpful.

Random information synthesized

Here’s another example shared by Lily Ray. It’s interesting that this factoid was surfaced from a site that does not rank organically at all for the term.

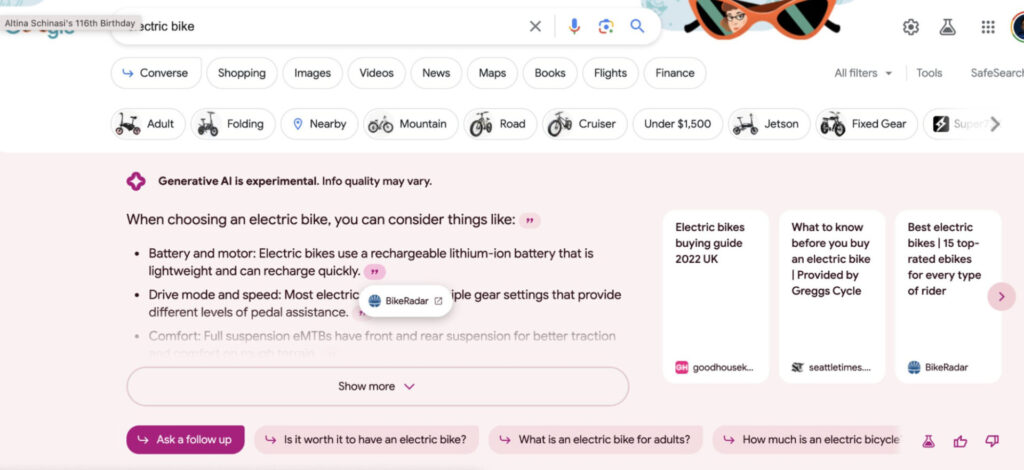

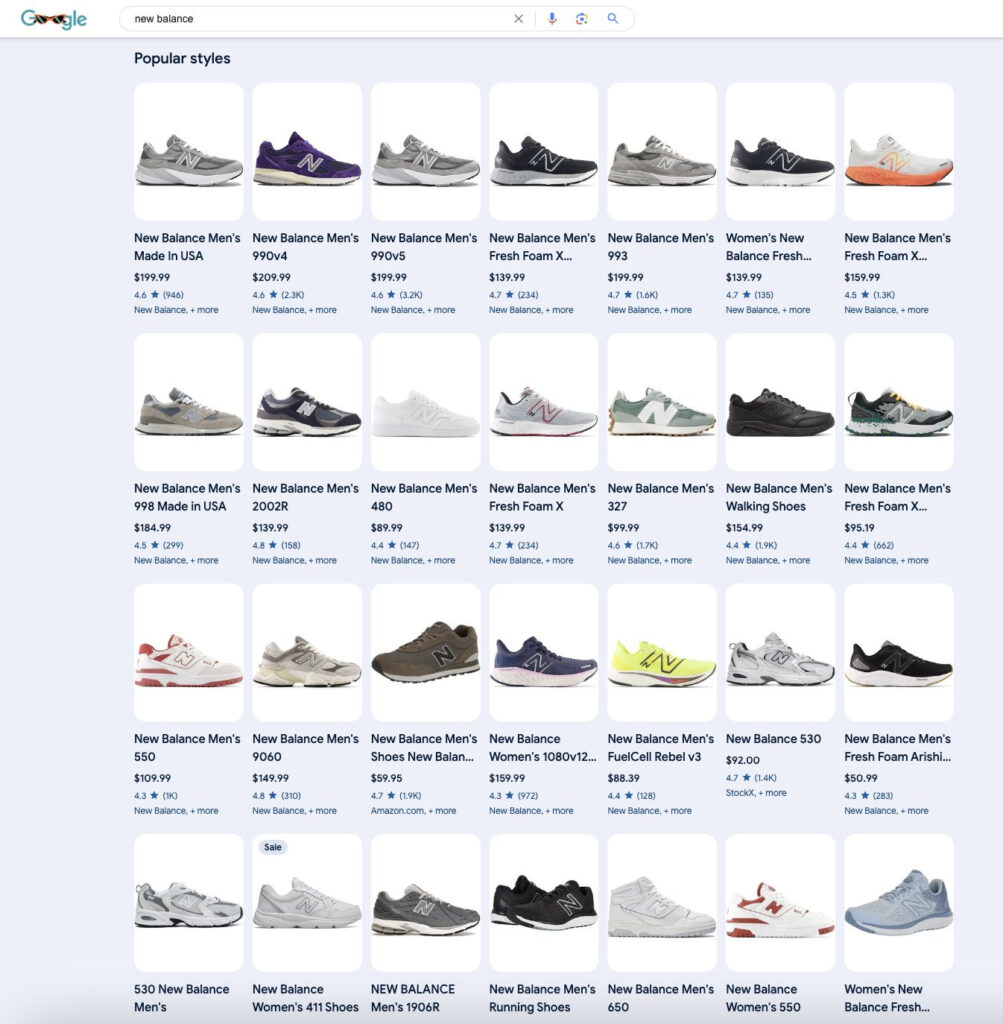

Juan Gonzalez shared these commercial SGE results:

Interesting topic filter bubbles:

Reference links:

Popular styles:

Links in SGE are often not helpful

Narattor: the links were not, in fact, the places where you can download the Monsterrat font. pic.twitter.com/h7pY7G0eTj

— Lily Ray 😏 (@lilyraynyc) August 6, 2023

SGE visits show up as Google/organic in GA

SGE shows up in Google Analytics as google/organic.

– @jes_scholz #mozcon(been wondering about this, thank you Jes!)

— Lily Ray 😏 (@lilyraynyc) August 8, 2023

ChatGPT

Custom instructions available to all

Custom instructions are now available to ChatGPT users on the free plan, except for in the EU & UK where we will be rolling it out soon! https://t.co/Y1IIjgDDPV

— OpenAI (@OpenAI) August 9, 2023

Now that ChatGPT has rolled out custom instructions to most users, try out this instruction — it makes GPT 4 far more accurate for me: (Concat the rest of this 🧵 together and put in your custom instruction section) pic.twitter.com/OD05ZqJlWq

— Jeremy Howard (@jeremyphoward) August 10, 2023

I’ve shared more on custom instructions in the section on great articles published this week. Also, this thread has some more examples of how others are using custom instructions. In the subscriber content, I’ve shared what mine are.

OpenAI has a new bot: GPTBot. You can block it.

Here’s is OpenAI’s new documentation on it’s user agent, GPTBot. If you don’t want OpenAI crawling your site, you can add this to your robots.txt:

User-agent: GPTBot

Disallow: /

What happened to GPT-4’s multimodal capabilities?

GPT-4 was announced with the ability to accept images and text inputs, but these features are not yet accessible. This article suggests that one of the reasons is that OpenAI is facing a shortage of GPU’s, the computers needed.

Web browsing will return to ChatGPT

This was posted by an OpenAI employee.

Please hang tight, it’s coming back. Nothing’s changed from the earlier communication. Browsing (with Bing) is an important capability for ChatGPT. https://t.co/7mfTZIvHyL pic.twitter.com/1MIlwILwWJ

— Adam.GPT (@TheRealAdamG) August 6, 2023

More AI Info

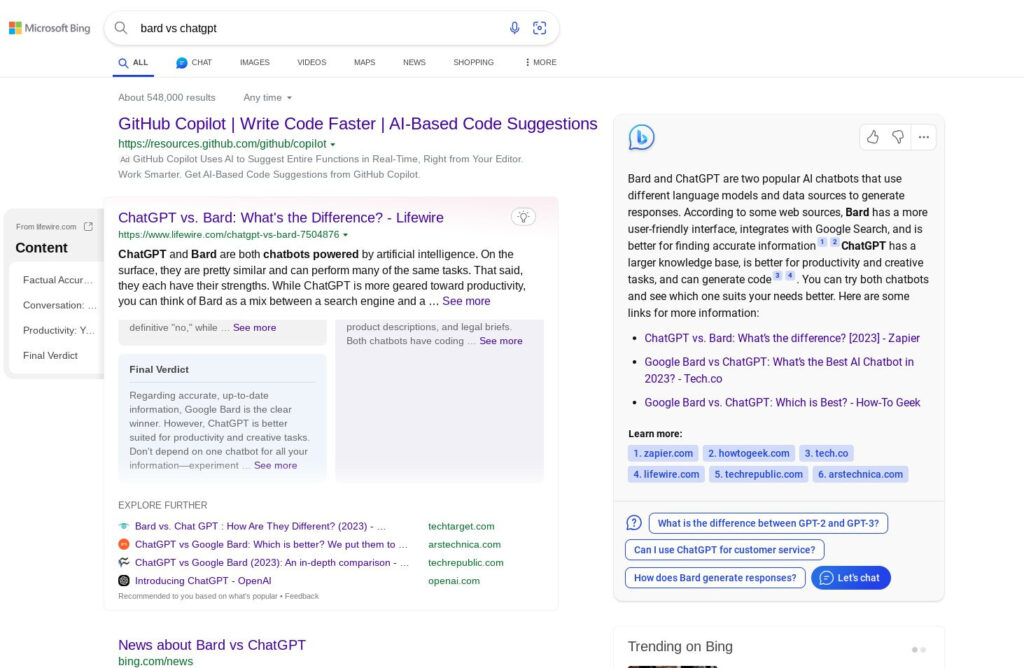

Have you seen Bing’s AI generated answers in Search? I was investigating a client with drops in Bing traffic and was shocked to see how much of the client’s site was included in Bing’s featured snippet collection alongside a ChatGPT generated answer. This is not my client’s keyword, but a similar SERP:

Bing announced AI enhanced SERPS in Feb 2023. There were reports of changes related to this being seen in mid-April 2023.

Google has told the Australian government that copyright law should be altered to allow for generative AI systems to scrape the internet. They want everything on the internet to be fair game for AI unless you’ve opted out.

Google and Universal Music Group are negotiating a deal to license artists’ vocals and melodies to users to create AI-generated music legally, amid debates over protecting copyrights versus allowing innovation with proper compensation.

The NYT changed its TOS to prevent AI companies from using its content to train their systems. A few months ago we learned that Google and the NYT entered into a deal for $100 million that allows Google to feature Times content on some of its platforms. I wonder if perhaps part of that deal was related to whether or not Google can use Times content for AI.

IBM has developed a prototype “brain-like” AI chip using analog components called memristors that mimic neural connections, promising more energy-efficient and less battery-draining AI for applications like smartphones.

Disney formed a task force to investigate using AI across its business units. They hope that AI can help control the soaring costs of movie and TV production.

New research from BlackBerry suggests many organizations are considering banning or restricting generative AI tools like ChatGPT in the workplace due to concerns about data security, privacy, and reputational risks.

Google launched Project IDX, an AI enabled browser based development.

Claude instant, version 1.2 is now available through their API. The latest model has less propensity to hallucinate and is more resistant to jailbreaks.

UK retailer John Lewis has partnered with Google Cloud in a 100 million pound agreement. They plan to use Google’s AI and machine learning tools to make its workforce more efficient, make better use of data insights and help curate products and services. For example, customers may be able to use an image scanning feature in the John Lewis App to show its home design stylists a room they’re looking to furnish.

Rupert Murdoch’s News Corporation reported a 75% drop in full-year profit due to lower print and digital advertising revenue, but sees opportunities with AI to reduce costs and create new revenue streams. “We are already in active negotiations to establish a value for our unique content sets and (intellectual property) that will play a crucial role in the future of AI.”

Here’s an interesting article I was honoured to be a part of on Time.com. A reporter contacted me to ask about a site that appeared to be using AI to create copious amounts of content. My suspicion is that they were trying to create topical authority by producing many, many pages and topical hubs. Because this business is legitimately a medical practice with many locations, I think they were able to get away with this spam.

This article was probably good for my EEAT. Not only am I mentioned in an authoritative place, but in conjunction with topics such as the helpful content system, Google’s algorithms, AI and EEAT itself. 😀

The company that was outed by this Time article published a blog post in response.

Other interesting industry info

Experts are predicting that Google and Meta eventually banning Canadian News (because of Bill C-18) will be a disastrous move which will promote the spread of misinformation and fake news. Meta has already begun making it so that users cannot share links from Canadian News outlets on Facebook and Instagram. I am still seeing links to Canadian News on Google searches, but this will likely end soon too.

To reduce spam and fraud, YouTube is making Shorts links unclickable, removing banner links completely, and implementing new profile links so creators can highlight important content. Starting August 31st, links that appear in the Shorts comments section, Shorts descriptions, and the vertical live feed will no longer be clickable.

YouTube is killing your ability to link out from shorts…

Expanding the walled garden pic.twitter.com/rBMRHHypu1

— Miles Beckler (@milesbeckler) August 10, 2023

However, Google is doing some new things here:

Say hello to content links!

🩳 Edit any Short in Studio Desktop

🔗 Add a link to any related video, long, Short, or Live, public or private

🤳 Get a one-click, Remix-style button right on the Short

🤔 Use it to bridge Shorts to long-form, link multi-part Shorts, drive to live,… pic.twitter.com/tjmYqEWEdZ— YouTube Liaison (@YouTubeLiaison) August 10, 2023

Google slides is introducing a new feature that lets you draw on your slides while presenting.

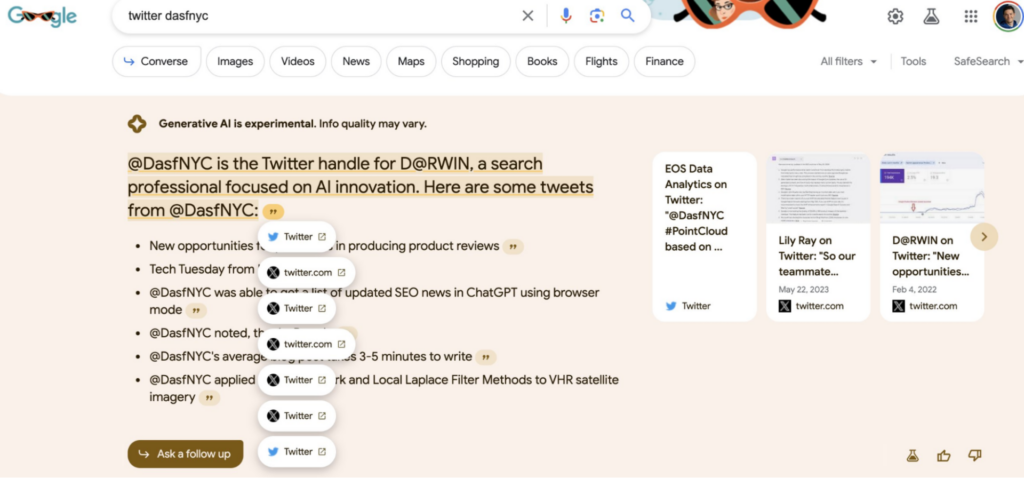

This chrome extension looks helpful! I have installed and expect it should help me.

The thing about the Tools QuickView Chrome extension is that it is so helpful and useful for SEOs.

While there have been other implementations a little similar to it, Tools QuickView expands on the concept of deep linking and makes available two new powerful features:

1/ https://t.co/MygWqgv7PW pic.twitter.com/7faXpyEgEw

— D@RWIN (@DarwinSantosNYC) August 9, 2023

Great articles published this week

In the subscriber content, I’ve shared Claude assisted summaries of these articles to save you time.

Why You Need a Knowledge Graph, And How to Build It – A Guide to Migrating from a Relational Database to a Graph Database by Stan Pugsley

Here’s why you should be using Custom Instructions in ChatGPT – Everything you need to know about ChatGPT’s custom instructions by Chandler Kilpatrick (I shared my custom instructions in the subscriber content.)

Over 67% of Domains Using Hreflang Have Issues (Study of 374,756 Domains) by Patrick Stox and Joshua Hardwick on the Ahrefs blog

Tracking brand rankings in ChatGPT? Here’s one way [Free Template] – Wil Reynolds

Local SEO

Are local marketers ready for SGE?

A survey from Brightlocal revealed that most local marketers are not fully prepared for the rollout of Google’s new Search Generative Experience (SGE). The poll of 378 users found 33% have not heard of SGE at all, and only 17% feel they have a confident understanding of what it is. Just 4% think SGE will make it easier for businesses to rank, while 73% don’t know how it will impact local search.

Reviews may be filtered in some areas due to explicit language

In France (all of Europe?) reviewer GPB reviews now include explicit language in the contribution area that specific reviews were filtered and are not showing. Previously they showed to reviewer and not to the business but carried no alert pic.twitter.com/0wMoYwvST3

— Mike Blumenthal (@mblumenthal) August 10, 2023

Do you really need to embed from YouTube?

The Sterling Sky team reported this fascinating case where a page’s traffic plummeted after removing a YouTube embed (replacing it with a Vimeo one) and returned once it was reinstated.

SEO Jobs

Looking for a new SEO job? SEOjobs.com is a job board curated by real SEOs for SEOs. Take a look at five of the hottest SEO job listing this week (below) and sign up for the weekly job listing email only available at SEOjobs.com.

SEO Specialist ~ Payhip ~ $60k-$90k ~ Remote (world wide)

Local SEO Director ~ On The Map Marketing ~ Miami, FL (Relocation Support + Bonus)

Subscriber Content

Investigating an odd May 25, 2023 traffic drop – assessing content across the helpful content criteria

I am currently investigating two different sites suffering a significant decline in traffic that starts on May 25, 2023.

I initially thought that perhaps this was another day where the helpful content system appears to have impacted sites. It was a good assumption considering almost every site I have analyzed in the last few months has had a drop that I feel I can attribute to an (un)helpful content classification.

However, neither of these sites have the typical type of content that I have seen impacted so far.

Read more in the Subscriber Content. I’m experimenting with using Claude to help me assess content quality across Google’s helpful content criteria. This was a fascinating case. I think that May 25 marked an important change to Google’s ability to understand user intent and match it with relevant content!

We also discuss whether having a “featured on” section on your site is a good idea.

The Search Bar is gearing up

I’ve been hinting about my new membership group where we discuss SEO and also AI. So far it’s going so well. I’m inviting people in slowly as I don’t want to be overwhelmed as the community grows. There’s a link in the subscriber content for those who want to join now.

This week we had so many great discussions. Here’s one where we discussed the FAQ changes.