How Google’s Helpful Content System Has Radically Changed Search

Or listen on Youtube:

In this long-awaited return episode of Search News, Dr. Marie Haynes explains how Google’s helpful content system has radically changed search rankings.

She provides background on her experience studying Google’s algorithms for over 16 years, and how the company has shifted to relying more on AI systems like helpful content rather than just links and keywords.

Marie walks through the evolution of major algorithm updates like Panda and Penguin, and what they revealed about Google’s capabilities and focus on content quality. She explains how machine learning models can now predict helpful content, with training data from quality raters.

The episode provides real-world examples of sites that were impacted by recent helpful content updates, and what specific changes Google rewarded. Marie also offers advice for those struggling with helpful content suppression and wanting to better align with Google’s criteria.

This episode will completely change your understanding of modern search rankings, and what you should prioritize on your site for visibility and traffic in 2023 and beyond. Don’t miss Marie’s long-awaited insider perspective on Google’s helpful content!

Subscribe

Apple Podcasts | Spotify | Google Podcasts | Soundcloud

Transcript

Intro

Welcome to another episode of Search News You Can Use, with me, Dr. Marie Haynes.

It’s been a while since I said that and I want to thank you for your patience, as I took some time off to reassess what’s happening with the search landscape.

Today I want to share with you how I believe Google’s Helpful Content system has radically changed Search. This is a really big deal for SEOs and it has taken me months to be able to formulate my thoughts on this. In this episode I’m going to take you through some history going back to the days where the Penguin algorithm devastated sites and ending with how the Helpful Content system is doing the same today.

My Background

If you’ve been a regular listener, you know that I’ve been studying Google’s algorithms for a long time.

When I started, I was a veterinarian. In 2008, I made a website to help my clients and other people on the internet get good veterinary advice online and then I got sucked into this thing called SEO …trying to figure out…how do I get more people to come to my website.

And then, for the last 16 years, I’ve been obsessively trying to understand how Google works…honestly, I don’t even know why! Eventually, that turned into consulting with others. I ran an agency for several years and have spoken all around the world on what Google’s algorithms are built to reward and let me tell you that I think that so many SEOs are focusing on Google’s algorithms as they existed before AI was an integral part of how they rank websites.

So in today’s episode, what I’m going to do is share with you how AI systems like the Helpful Content system have radically changed how Google ranks search results.

I am betting that many of the tasks that you do for your website on a regular basis…things you do because they’re seen as good standard SEO practice…do very little to actually improve Google rankings because they’re not really doing much to make your content more helpful than competitors.

I’ve been doing site audits and assessing traffic drops for…gosh, my first site review was 2012. So 11 years of thoroughly analyzing sites that are struggling to rank…I started by trying to unravel the Penguin algorithm. Then, many of the sites coming to me for advice were hit by Panda – I’m going to talk more about Panda soon. Then were the days of the updates Glenn Gabe called Phantom updates. We were told by Google these were actually something we should call Core Updates.

And then, let’s talk about the end of 2022. This is when I stopped doing podcast because there was just too much to understand and so many changes happening with how Google ranked results. I was finding that every site I was analyzing appeared to be impacted by the Helpful Content system, but I was having trouble not only explaining that, but coming up with practical strategies for recovery.

Discussing Google’s Helpful Content System…with Google

The very first Helpful Content update rolled out on August 25th, 2022. Now, just a few weeks before that, I had an email from Google saying, “Would you like to talk to us about this new algorithm change that we’re going to have?”

I mean, yes?

I got on a video call with Danny Sullivan from Google and Alex Youn, Head of Communications and Public Affairs and they explained to me as much as they could about this new Helpful Content system. It was a vague discussion.

I felt they wanted to get the message out that this update targets. I want to get this right. Content that exists just for SEO purposes. They had a different name for the update rather than the Helpful Content update, they were going to call it the People First update. Because it’s meant to reward content that’s created for people – for existing audiences…and it punishes content that is created primarily for search engines.

It’s a little bit hard to grasp what that means…content created for search engines. Hopefully by the end of this episode you’ll have a better understanding of whether this is the type of content you are producing. I’ve spoken with a few site owners this week alone who had an eye opening moment when I pointed out the parts of their content that they wrote for search engines not people.

So…Google wanted to ensure that people still understood that much of what we do in the name of SEO is good and helpful…Google is not against SEO…They have a whole guide to encourage us with good SEO practices. But also, Google is aware that a lot of content that does well on the web does so more because of a knowledge of SEO and what it is that Google’s algorithms reward rather than actually being the best option to put in front of searchers.

Which type of SEO do you do?

I want to talk about two types of SEO. I want you to think about which camp you’re in.

One type of SEO is, Getting businesses found. If I’m a plumber and there are a hundred other plumbers in my city. I’m gonna need the help of an SEO or somebody who understands search engines, Particularly Google, to figure out… How do I get seen on that first page? Or even, how do my customers that I currently have find me online?

Or maybe I have a store that exists in real life, that has customers that buy my products. And I would like to have more customers find me online. That business needs to be found and can benefit from the help of an SEO in most cases to help them get found.

No matter what happens in the future with AI and large language model tools like ChatGPT or Bard changing search, businesses will still need to consult with those of us who understand the online landscape. SEO is not dead.

However, there’s a second type of SEO. And I am concerned for this group.

A lot of you make money mostly from creating content online or from driving people to content online. But that content was not really created to serve your existing audience. Rather, you create this content because of the opportunity that Google gave us.

The more you can get people to use Google to come to your Content, the more money you make. And the more money Google makes. It’s a fantastic system and I’ve heard some people say that there’s no way that Google would use AI to answer search queries rather than send people to websites because they’d be cutting off their own ad revenue… , that’s a whole other topic. I think AI will completely reshape how money is made on the internet…especially when we talk about Project Tailwind, or Google’s NotebookLM that will let us use AI that is grounded in our own Google docs. I wrote about that in episode 295 of my newsletter this week. Hopefully I’ll get more podcast episodes in where I can talk about these opportunities as they unfold.

So, Many of you do SEO because we have this opportunity to create content and then use our SEO skills to drive traffic to that content which allows us or our clients to make money. That’s different than doing SEO for a business with an existing audience. This is important. One of Google’s questions they tell us to ask ourselves to determine if we are producing helpful content is:

- Do you have an existing or intended audience for your business or site that would find the content useful if they came directly to you?

Last year. Barry Schwartz from Search Engine Roundtable did an interview with H.J Kim From Google. And they talked about the Panda algorithm filter.

Why Google Panda was created

Panda was a change that google made to their search engines. Starting in 2011.

And it’s very important. It was a change to improve the quality of the sites in the search results.

Google gave us a list of questions to consider that are still important today. You can find a link to those questions in the notes for this episode. They’ve been modified somewhat over the years. Eventually Google moved them to a new page on core updates…and now are on Google’s page on creating helpful content.

These questions are incredibly important.

Does your content clearly demonstrate first-hand expertise and a depth of knowledge?

or

If someone researched the site producing the content, would they come away with an impression that it is well-trusted or widely-recognized as an authority on its topic?

Does the content provide original information, reporting, research, or analysis? which goes along with the question, “Does the content provide insightful analysis or interesting information that is beyond the obvious?”

When Google gave us the original version of these questions with the introduction of Panda, they actually said in their blog post that they write algorithms to try and replicate the types of things that a human would find useful.

Here’s what they say,

“Below are some questions that one could use to assess the “quality” of a page or an article. These are the kinds of questions we ask ourselves as we write algorithms that attempt to assess site quality. Think of it as our take at encoding what we think our users want.”

So, back to this interview, Barry Schwartz did with HJ Kim. He was talking about Panda. And he said that Panda was launched because Google was worried the web was going in a direction they didn’t want it to go with people creating copious amounts of content just for the sake of making content.

So even back in 2011, Google recognized that they were creating a monster in that websites, many websites figured out that if we can create content, And, If we can understand how Google’s algorithms work, Then we can get a lot of visitors to that content, and we can make a lot of money. And so massive corporations formed to create content.

And, I can’t imagine how many people are employed overall by these corporations.

So in 2017 I had this realization as I was analyzing a client with a traffic drop. I was seeing that the pages that were outranking them were ones that better aligned with Google’s criteria. They had expert authors with really good author bios and a number of other things that Google laid out in their criteria. And that’s when I realized that it may just be possible for Google to build algorithms that actually reward what they say they want to…and if that’s true then holy cow, that’s something we should be paying attention to isn’t it? Yet, there really wasn’t any logical explanation as to how they would do this.

Now that I understand more about Google’s use of machine learning systems, it makes perfect sense to me how they can identify which content is likely to be helpful.

For many SEOs, especially those who have been in SEO for longer than I have or even have done more practical SEO work than I have….the things that are focused on the most are technical improvements and links.

Did Google learn about spam via manual actions?

I want to talk about links…and then I’ll get back to explaining more on how the Helpful Content system works and why it’s so important.

The link building industry is massive. So much money has been spent on links over the last decade or so.

My first start in Actually charging money to do SEO work was in understanding what Google values in terms of links.

I was in the SEO chat forums. And there was a lot of talk about Google penalties…manual actions. Where Google would individually decide that your site should be penalized because it’s breaking their guidelines. I found it an interesting topic that a search engine can just manually decide to penalize a website and take a business out of their search results or suppress them severely.

And very few people understood how to get back into the search results and rank well again.

What it took to remove these penalties, it was, it was brutal. And so this guy approached me in the forum… he sold eyeglasses. And he had built a lot of links because links worked.

And when I say built links, this is back just before Penguin came out. He built links by finding every directory, he could and putting a link to his website anchored with a keyword like eyewear or Ray-Ban sunglasses and he would go to sites that would allow him to publish articles. There was Ezine articles and Articles Base was another and so many other places where you could write your own article, and link back to your own site. And there were services where you could say what anchor text you wanted and then purchase mentions that would be inserted in blog posts that got paid to link out.

So he would write an article about Ray Ban sunglasses and link back to his website. And that type of thing worked so well until he got penalized. In a lot of cases you could predict. If you just get this number of links, Then you can rank number one for this topic. That worked for SEO for a while and that guy made so much money on that website until he got the Google penalty for unnatural links. This was a manual penalty, not Penguin, but Google manually deciding to penalize the site so it ranked for almost nothing.

And, so, he asked me, he said, Can I pay you to remove the penalty on my website? And I said, I’m a veterinarian… I’m just interested in SEO, but I don’t do it professionally or anything and He said well why don’t we try?

And if you succeed I’ll pay you three hundred dollars. I was like oh that’s a challenge! So I read every forum post, I could find…every Google blog post, every Matt Cutts video… Anything at all that I could find put together all of Google’s advice and all of the collective advice of SEOs discussing penalties on the internet.

This is before we had the disavow tool… and I kind of figured out what we had to do. And it took forever. We had to identify which links Google considered unnatural, reach out to each of the site owners publishing that link and ask for it to be taken down or nofollowed, and then keep track of our progress. It was way more than $300 worth of work. But I figured it out and we got that penalty lifted. And for the next client I took, now that I knew I could do it, I charged 10x that amount. I really believe that when you’re learning something there’s great value in doing some work for free or low cost to get your feet wet and prove to yourself that your work is worth charging for.

Now that site, even though it got the manual action lifted, never recovered after we removed the penalty. Some sites have. I’ve removed many many penalties since then and have seen some fantastic recoveries but that site never recovered. A lot of sites don’t. The reason is that those links worked until Google figured out how to stop them from working.

They worked to convince Google’s algorithms that, hey, this site about eyewear and Lots of people are talking about it and linking to it…. A bunch of websites are saying this is the place where you have to buy Ray Bans, but Google had trouble determining with their algorithms that a link from Ezine articles was not actually a vote from someone else about this site. And, at the time, they could not figure out how to stop those links from making this site look better than it was other than manually neutralizing those links and in some cases completely taking the site out of the search results. A manual action meant that somebody actually like an actual human being has looked at the website, looked at the backlink profile and decided you’re breaking Google’s guidelines.

Now if that website was created today, nobody would build links that way. On low quality directories. On directories like bestPRdirectory dot com or high PageRank directory dot com.

But at the time, Google, couldn’t figure out how to stop those things from working. That kind of link was actually quite helpful before Penguin. So they started giving out these manual actions.

And then in april of 2012. They ran an algorithmic update that they called the penguin update.

And now that i’m involved in forum conversations on manual link actions and how google values links and how penalties work, People are looking to me for advice on this penguin update. The penguin update, if you were not around in SEO, in 2012 was Catastrophic. There were sites that had 90% or more of their traffic completely disappear with this update.

There were empires that were built on understanding how google valued links in their algorithms and how to manipulate that, that were dead overnight. I know so many people whose story is that they were making thousands, sometimes millions of dollars building links…and then penguin happened and all of their rankings, or worse, their clients’ rankings were gone.

With the penguin algorithm, Google figured out how to algorithmically deal with the worst of the worst problems in terms of people manipulating rankings with links.

I’m just realizing something now. Let’s go back to those manual actions. I’ve often wondered, why would Google spend that much time and resources on manual actions…the number of people they must have paid to review reconsideration requests. There was a time where I was submitting multiple requests per day…with so many sites reaching out to me with new manual actions. I hired people, I trained my neighbours and friends to remove penalties…there were so many sites manually penalized by Google.

Why did they invest so much time into the process of manual review?

I think that whole time google was learning. As we gave them spreadsheets of links we admittedly had made and were trying to remove, or eventually disavow, those were examples they could use to train their machine learned systems about spam.

I know I was learning. Every time I removed a penalty, i saw another link scheme. I learned more about what types of manipulation worked. And, which types Google was targeting with the manual actions.

Links.

Links were core of google’s algorithm for a long time. Links are the thing that made google better than all the other search engines. I used Excite for many years.

Lycos. Yahoo! They all existed before Google. And it was Google’s use of links…the, idea that if lots of people link to a piece of content, then, It must be a pretty good piece of content…that was revolutionary.

Now, the Penguin algorithm is a strange thing.

Each time, Google ran a Penguin update, I would say, see Google is getting better at ignoring unnatural links and you should all stop building links just to prop up your rankings.

Yet, people who built links would continually tell me that many types of links were still clearly being rewarded by Google’s algorithms. I think many still are today.

Google’s documentation on their ranking systems tells us PageRank (PageRank is why we build links) was the core of Google’s ranking algorithm, and it’s still important today, but how they use it has changed significantly over the years.

When you can truly get people to link to you, to be recommending you, not Because of your marketing efforts, but because, I mean, you can do some marketing efforts, you want to get the word out. ….

But If you can do stuff that earns links, earns recommendations from those who matter in your industry, I do think those are still very valuable, they speak to your E-A-T. They are one of the many criteria Google can use to evaluate whether content is likely to be helpful.

Links always have been important. But I think they’re way way. Way less important than most SEOs think.

With all of this said, if you are struggling to get good links, you can reach out to me and I have some connections from companies that I feel do good work in this area. But a good link builder – someone who can truly help you attract the kind of link Google wants to recognize and reward is quite expensive because it’s not easy getting the kind of link that Google wants to consider in their algorithms.

Manual actions evolved

So, Let’s go back to Penguin.

When Google first announced Penguin, the announcement didn’t really talk about links. It talked about manipulation, keyword stuffing, it showed screenshots of just a whole bunch of keywords. And, It talked about web spam in general but it took a little while before the SEO community unanimously agreed that Penguin was primarily about links. And then Matt Cutts who was Head of Web, Spam at Google, Confirmed that On Twitter…That. Yes, links were the primary area of concern for Penguin hit sites.

I spent. Many years Trying to understand Penguin.

Because lots of legitimate businesses who paid for what ended up being low quality SEO, kind of like shortcut SEO, where they just built links because links worked…real businesses were hurt by Penguin. And there was very little Guidance from Google on what a business could do if they were affected. I had many businesses turn to me for help.

And here I am a veterinarian with an interest in SEO trying to learn all I can to help these businesses. And then eventually Google gave us the disavow tool. And I experimented with using it and from my experience in removing manual actions, when you have a manual action for unnatural links, It is difficult to get it removed unless you made efforts to remove or disavow almost every single link that was made for SEO purposes. You do not want to get a manual action.

It was super interesting to see how the types of links Google chose to penalize for changed over the years. Years ago, we’d see sites penalized for widespread article publishing and low quality directory links. But, gradually it got harder and harder to distinguish a website’s unnatural links from their truly earned links.

For several years, I had an entire team of people sitting around a table, going through the links pointing to a website, and deciding together whether each was a link that was made for SEO purposes or whether it was a link that Google’s algorithms were likely to consider valuable and worthy of counting. Our process was to, to, just be very aggressive and get rid of anything that smelled like SEO. Because if we didn’t do this, we would fail our reconsideration request repeatedly until we had been wildly cutthroat in identifying links made for SEO purposes.

One quick tip – if you’re dealing with a manual action and you’ve had several failed attempts…sometimes I would send a reconsideration request that said, “Look, we’ve done all we can to identify links made for SEO. We’ve removed what we can and disavowed the rest. There is nothing more we can do.” And then often, magically Google would remove the penalty.

Who knows…perhaps they were using these sites that had built unnatural links and squeezing them to give them as many examples as possible of links made just for SEO so that they could use those to train AI Spam models to identify unnatural links.

The reason why I’m saying this, is that In 2012 when I was removing manual penalties. The manual penalties were for things that Google couldn’t figure out algorithmically like, at the time low quality directory, keyword anchored links. By 2020 the manual actions Google was giving us were for super sophisticated link building campaigns that were extremely difficult to distinguish from naturally earned links.

The Evolution of Penguin Shows a Shift to AI

Now. Let me tell you about what happened to Penguin in 2016. There’s a funny story. If you’ve seen people say on Twitter, Oh, there’s an algorithm update. Marie Haynes must be on vacation. This is why I, Spent so much of my life trying to understand the Penguin algorithm. I had so many businesses that were just waiting on my advice knowing that, no one really knew exactly what was best for sites impacted, but I was doing my best at trying to figure it out. This is kind of where we’re at today with understanding the Helpful Content and also Reviews systems.

Between 2014 and 2016 I had so many clients that had filed disavows and were waiting for Penguin to run again to see if they would recover…because back then, you could not recover from Penguin until Google ran another update. And then at one point, a Google employee said…Somebody asked them what’s happening with Penguin because…I’m not the only one that had businesses waiting to recover from Penguin. And a Google employee said, well, hang in there, we will update Penguin within the next quarter.

And then two years went by almost and we still had not had a Penguin update. So, I had business after business that we were like maybe we should just start over with a brand new website. We had all these strategies. I did have some businesses that started over.

We did weird things with the way we redirected via an intermediate domain blocked by robots – to stop passing signals of unnatural links…and then in some cases even with our best efforts, the penalty still followed them. We tried so many different strategies to help these legitimate businesses that were suppressed by Google and waiting forever. And some argued that the businesses deserved to suffer because they’d tried to cut corners with low quality link building, but many of these businesses just hired someone and had no clue that that person was breaking Google’s guidelines by building massive numbers of links.

When Google finally did run another Penguin update it was September of 2016 and it was also the first time in years that I had decided to travel. Here’s where the funny part about me traveling comes in.

So, after years of waiting, I was on a trip, on a train with barely any internet connection and Penguin finally ran. And then a few other times Google has run an update while I’m traveling. So, I’m telling you I haven’t traveled in a while…Covid changed so much. But I’m speaking at a couple of events in the fall so be warned.

Now, why did it take so long for Google to re-run Penguin? Let me tell you about Penguin, 4.0. In 2016. When Penguin 4.0 happened. Google was kind of vague about it. They told us that Penguin was now more granular in its ability to assess links, and also that instead of penalizing websites, it now was able to neutralize the effect of unnatural links. That’s a big deal.

The thing was though…they didn’t really officially communicate any of this. We found that information out in random social media posts not in official Google blog posts. There was very little from Google and the average business owner certainly had no clue what was happening.

With Penguin 4.0, Google lifted off the suppression on many sites.

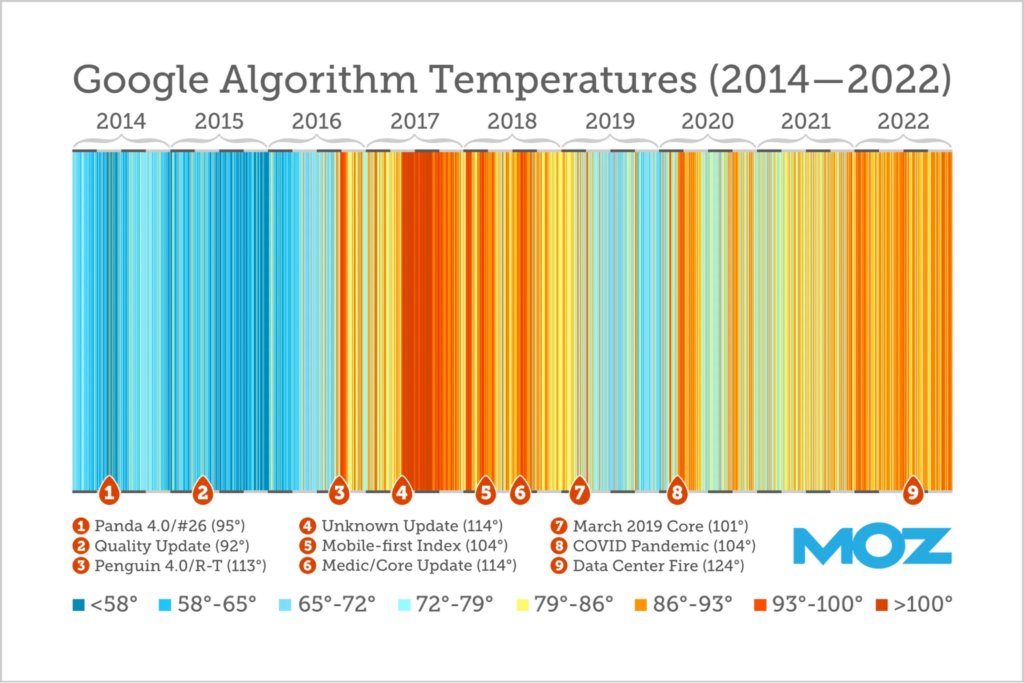

There were many of them that began to rank again. Even sites that did not disavow. Now, I had all sorts of theories at the time on what happened with Penguin, but there’s something that I didn’t know, there’s a, very important piece of information that I didn’t know that. I only just recently found out when Dr. Pete Myers from Moz published an article on Google’s updates over the years.

Dr. Pete is a data scientist. He’s a really cool guy. He put together, this heat map document of Google algorithm updates. And, you know, if you hear me tweeting recently, I’ll say like, oh the SEMrush sensor or Mozcast, which is Dr. Pete’s Tool is high today. Meaning that there’s a lot of shake up in the search results and so probably Google’s doing some type of update to their algorithm that’s significant enough to Impact, a lot of sites.

And lately, these tools have been showing wild turbulence almost every day, but that wasn’t always the case.

Well. If you look at this heat map…where red means that there is a lot more algorithm turbulence, there’s a really obvious point where it starts to just turn orange and then it’s consistently orange or red from that point on. Well, you can probably see where I’m going with this story …that that date where things shifted to a state of constant turbulence was in late September of 2016, which was when Penguin 4.0 ran. So, Now, it makes sense to me.

Lately, we’ve had a few statements from Google telling us. That they’ve been what they call an AI-first company for 7 years now. 7 years ago was 2016.

So, I expect that. Penguin 4.0. Was actually the introduction of new AI systems into Google’s algorithms. The more they can rely on AI systems to determine which pages are high quality and helpful, the less they need to rely on links as a proxy. Instead of saying, “This page has lots of links pointing to it, therefore it must be one that people find helpful” they can now say, “This content has several characteristics that align with our model that is designed to determine whether content is helpful.”

Patterns Show Google Rewards More Helpful Content

Now, each time Google ran an update. Whether it was an announced update like a Core Update, or a Product Reviews update, or more recently, a Helpful Content update, Every time I analyzed sites that were struggling, I’d look at content that Google had elevated and try and find patterns on what had changed.

One of these updates…not announced as an update, but a day where many sites saw wild changes in rankings was February 7th, 2017. Someone from Google told me this actually was an unannounced Panda update, but we had no official word from Google on what happened. You might remember us talking about “Fred” in March of 2017. I think “Fred” started a month before that.

When I analyzed content impacted on this day, the pattern that I noticed is that the sites that Google promoted with this update had author bios where the authors had the type of expertise users might find helpful, as described in Google’s Panda questions.

So, I did a review in early 2018 for a site that had previously suffered a catastrophic Feb 2017 drop. I shared my thoughts on what Google’s algorithms were rewarding and they took that advice seriously and they went out and they hired expert Authors and they did a bunch of a bunch of other things too like improve how they used references, and other things that possibly improved how well they were aligned with Google’s quality criteria. I gave them a document with over 100 pages of thoughts on how competitors better demonstrated E-A-T and how they could make changes to improve.

And this certainly wasn’t an inexpensive decision for them but it paid off tremendously with the August 1st, 2018, medical update. They had incredible increases in very profitable keywords.

For the last several years with almost every single significant Google update whether it’s announced or not, there’s just one super obvious pattern. It’s not author bios. It’s not reputation (although that can be a factor). But there is one specific obvious difference when I look at pages that Google’s algorithms started to prefer and compare those to the client I am analyzing.

I have a whole system that I’ve made now where I can determine which keywords have declined in an update and which competitors find some examples of competitors that clearly Google has elevated one page.

It’s amazing how many times we see that one page just shoots out of nowhere to start outranking the site I’m analyzing, often coinciding with the day of an update. There’s one really obvious thing. And that’s that. The page that.

Google elevated. Is a better page to meet the needs of someone who searched for that query.

The page is more helpful.

So, let’s talk about how Google identifies which content is likely to be helpful.

The Helpful Content System

Let’s talk about the Helpful Content system.

I recently wrote a massive article on this system. It took me over 6 months to research and write. My hope is that this article, and this podcast episode help shift your mindset so that instead of focusing mostly on producing pages that are technically sound, load quickly and make good use of internal links…I mean those are all good things, but I’d love to see more focus on producing content that actually is the best option for Google to put in front of someone.

Google’s Helpful Content system uses AI to reward sites with consistently helpful content, and demote sites whose content isn’t usually the best option to put in front of searchers. It’s a hard concept for us to grasp because it doesn’t fit into what we have been taught about how Google’s algorithms work. As SEOs we understand PageRank and for many years PageRank was at the core of Google’s algorithms.

Here’s what Google says in their documentation on the many systems that are used in ranking:

Link analysis systems and PageRank

We have various systems that understand how pages link to each other as a way to determine what pages are about and which might be most helpful in response to a query. Among these is PageRank, one of our core ranking systems used when Google first launched. Those curious can learn more by reading the original PageRank research paper and patent. How PageRank works has evolved a lot since then, and it continues to be part of our core ranking systems.

PageRank is a component of E-A-T. Links are still signals that Google can use when assessing whether a page is likely to be helpful. For many years, links were the best signal Google could use. If you write a new article and a bunch of people link to it, especially if those links were from relevant sources, or better yet, authoritative, relevant sources, then there is a really good chance that people are going to find that page helpful.

But, now Google has the Helpful Content system. It generates a signal they can use that says whether your content is likely to be helpful or not. If your traffic has been declining and you can’t figure out why, there’s a good chance this system is to blame.

When the Helpful Content system first launched in August of 2022 we didn’t see a massive impact. A few months later, especially in November, I was finding that every site that came to me for a traffic drop assessment was likely impacted by this system. I saw so many sites with continuing declines in traffic that started either the first week of November or specifically on November 15. And in every single case when I looked at which content suffered and compared it to content that started to thrive, it was really obvious that the competitor’s content was more helpful.

Case Study Examples of Helpful Content

Let me give you some examples. In one case, the website was offering online flower delivery. The landing page I was assessing started with a paragraph that said something like, “Are you looking for online flower delivery? We offer blah, blah blah…” a bunch of words that humans are very likely to skip over. Because the user came to that page, not to learn about what online flower delivery is…but to order some flowers.

Why do we put words like that on our pages? It’s for search engines! Those words are part of why this site used to rank well in the past as it looked relevant to the topic because of them.

The page that started outranking my client had at the very top of their page a form you could use to order their flowers. That was the part of the content that met the searcher’s need. It was more helpful.

In another case, a page was writing about the fastest WordPress themes. Again, their article started with words like, “The faster your website runs, the better…A fast theme offers this and that…” Then, a few scrolls down the page there was their recommendation for some fast themes.

The page that outranked them had a little bit of the same type of verbiage at the top, but within the first scroll of content they had a chart that listed the themes they recommended and a brief explanation as to why.

I wouldn’t say they were the absolute best option for searchers…but, their page was likely more helpful than the one for the site I was reviewing. And also, this site was one that actually sells WordPress themes…which gives them first hand experience. One of Google’s helpful content criteria is, again, “Do you have an existing audience for your business or site that would find the content useful?”

For the site I analyzed, this will be hard to overcome. Because they are a site that writes about WordPress themes, in order to outrank the site that actually sells WordPress themes and has real life experience with real life customers, they are going to need to find ways to make their content substantially more valuable and helpful than what currently exists online and that is no easy task.

So, how could an algorithm know that It’s not like Google says, “OK, there are 3 points for having a table and maybe 2 points because the page loads fast…” No…instead what they do is they use machine learning which is AI to build a model that is really good at looking at a whole bunch of characteristics of your content and then predicting whether your site’s pages are likely to be helpful.

Machine Learning Models Predict Helpful Content

The way that machine learning works is it’s a mathematical model that looks at many examples of content that’s helpful and also many examples of unhelpful content. Then, the model learns to recognize the characteristics that tend to make content helpful or unhelpful. And in doing so, it can predict whether any content on the web is likely to be helpful.

And it turns out that a couple of years ago the Quality Rater Guidelines were modified to add a line that said they will be providing Google with examples of helpful and unhelpful results. I wrote an article this week on how the raters are used if you want more info on that.

There is a fantastic article by Stephen Wolfram that explains how neural networks work…He’s talking about ChatGPT in his article…I’ve linked to it in the show notes. It blew my mind when I realized it’s just all math. The very same day I read this article I had to help my 9th grader with her math homework. She was studying linear algebra and learning that there is an equation, y=mx+b, I’m not going to explain the math now, but this equation can be used to determine whether any particular datapoint is likely to be on a line that you’ve graphed out. y=mx+b is essentially a mathematical model that can predict whether a datapoint aligns with a specific line.

With machine learning, Google can create much more complex mathematical models with many more variables than just x and y. But, the concept is the same – the Helpful Content system finds the right mathematical equation to create a model that predicts whether content is aligned with what a user is likely to find helpful.

If this model finds that content on your site is generally not aligned with what they want the system to reward, then they can place a classification on your site that classifies it as unhelpful. And this will make it more difficult for any of your content to rank well.

OK…still with me, cause that’s a lot and I think I’ll be unpacking this for some time as podcast continues.

Here’s what we need to know. Google tells us that, and this is a quote from Google, “Search runs on hundreds of algorithms and machine learning models and we’re able to improve it when our systems, new and old – can play well together. Each algorithm and model has a specialized role, and they trigger at different times and in distinct combinations to help deliver the most helpful results.”

Ultimately…we don’t need to understand the math Google is using because we already know what the model is built to reward – the helpful content criteria Google has given us!

I’m sure you have a lot of questions about this system. I’ve explained much more in my article called Google’s Helpful Content and other AI systems May be Impacting your site’s visibility. And I’ve linked to it below.

If you’ve been impacted by a Helpful Content Classification

If you think you’re impacted by this system there’s a whole bunch more in my Helpful Content workbook. There are many exercises in the workbook to help you determine what it is that is causing your helpful content suppression.

If you think you’re impacted by this system there’s a whole bunch more in my Helpful Content workbook. There are many exercises in the workbook to help you determine what it is that is causing your helpful content suppression.

I want to thank you again for your patience in waiting for Podcast to resume. I put it on hold so I could focus more on understanding and communicating what’s important. And I really want to thank those of you who reached out to tell me that you missed podcast. I’ve missed it too!

I’m not sure if I’ll be on a regular cadence as before, but I do plan to put out more content. I also have another project I’m soon about to share more on and that’s a community for us to discuss these things more.

Thanks so much for listening, and I wish you the best of luck with your rankings!

More from Marie:

Purchase my workbook to help you assess your content and improve its helpfulness

https://www.mariehaynes.com/product/creating-helpful-content-workbook/

Have me assess your site:

https://www.mariehaynes.com/work-with-marie/

Book time on my calendar to brainstorm with me about your site:

https://calendly.com/mariehaynes/brainstorming-with-marie?

Links mentioned in this episode:

https://www.mariehaynes.com/newsletter/episode-295/ (Latest episode of newsletter where we discuss Project Tailwind/NotebookLM)

https://developers.google.com/search/docs/fundamentals/creating-helpful-content (Google’s Guidance on creating helpful content)

https://searchengineland.com/whats-now-next-with-google-search-smx-next-keynote-389628 (Barry Schwartz and HJ Kim interview)

https://developers.google.com/search/blog/2011/05/more-guidance-on-building-high-quality (Panda questions)

https://www.mariehaynes.com/google-ai-systems/ (My article on how the helpful content system works)

https://moz.com/blog/nine-years-of-google-algorithm (Dr. Pete Myers heatmap showing Penguin algo turbulence)

https://writings.stephenwolfram.com/2023/02/what-is-chatgpt-doing-and-why-does-it-work/ (understanding the math behind systems like the helpful content system)

https://www.mariehaynes.com/what-we-know-about-googles-quality-raters/ How raters rate sites.