The inner workings of Google's search algorithm remain shrouded in secrecy, yet one important piece of the ranking puzzle involves an army of over 16,000 contractors known as quality raters. Just what do these raters evaluate when they visit websites, and how much influence do their judgements have over search rankings?

This article will demystify the role of quality raters by exploring what we know so far on who they are, what guidelines they follow, and whether their brief visits could impact your site's performance in SERPs.

In my recent article explaining Google's helpful content system, I discussed how Google uses ratings from quality raters to provide machine learning systems with examples of helpful and unhelpful search results. Since writing that article, I've received numerous questions about these mysterious quality raters. This motivated me to dig deeper into who they are and how they influence search rankings.

Who are Google’s Quality Raters?

Google contracts an army of over 16,000 quality raters around the world tasked with evaluating search results. These raters assess pages based on Google's in-depth Quality Rater's Guidelines (QRG) that spell out what constitutes high quality results.

Raters come from all around the world with a diversity of ages, genders, races, religions and political affiliations. If you want to learn even more about what raters do and how to become one, I’d recommend this fascinating read from Cyrus Shepard about how he became a quality rater. It was difficult to pass the test on the QRG!

What do the quality raters do?

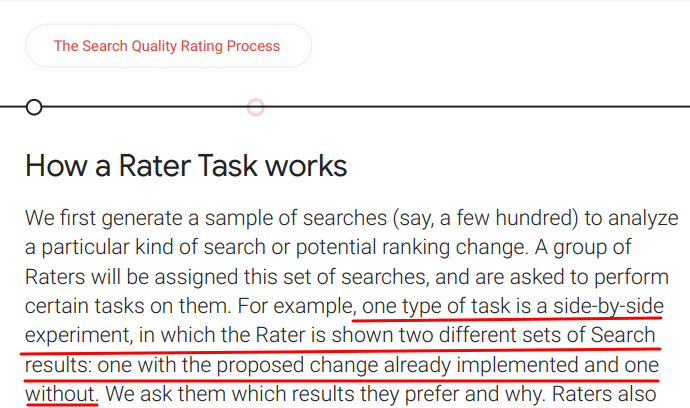

As described in Google's overview, raters are given A/B tests pitting two sets of search results against each other. This helps Google know if changes they are introducing to their algorithms are good or not.

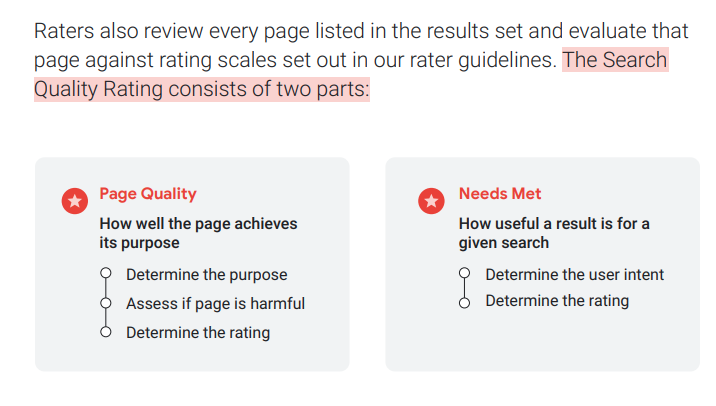

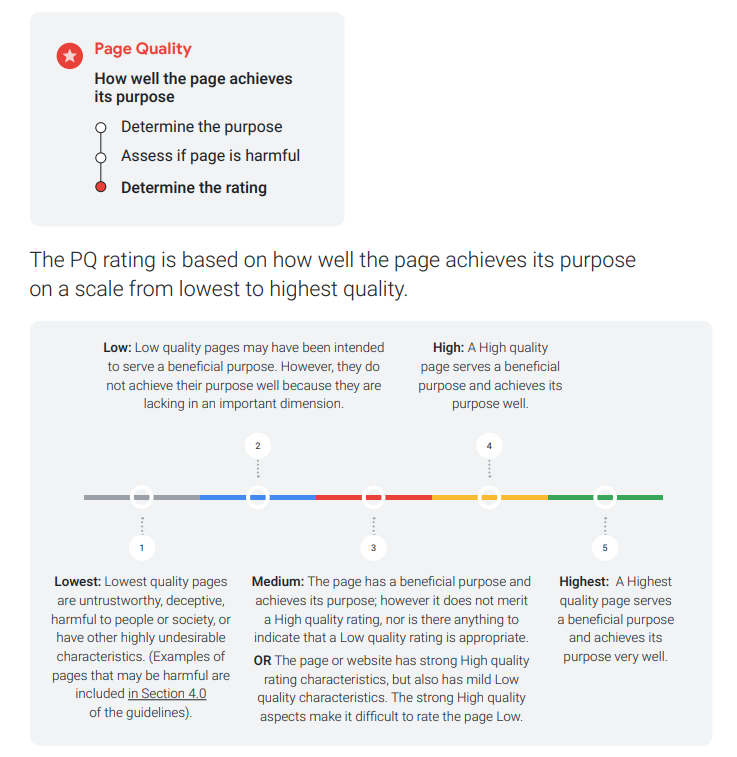

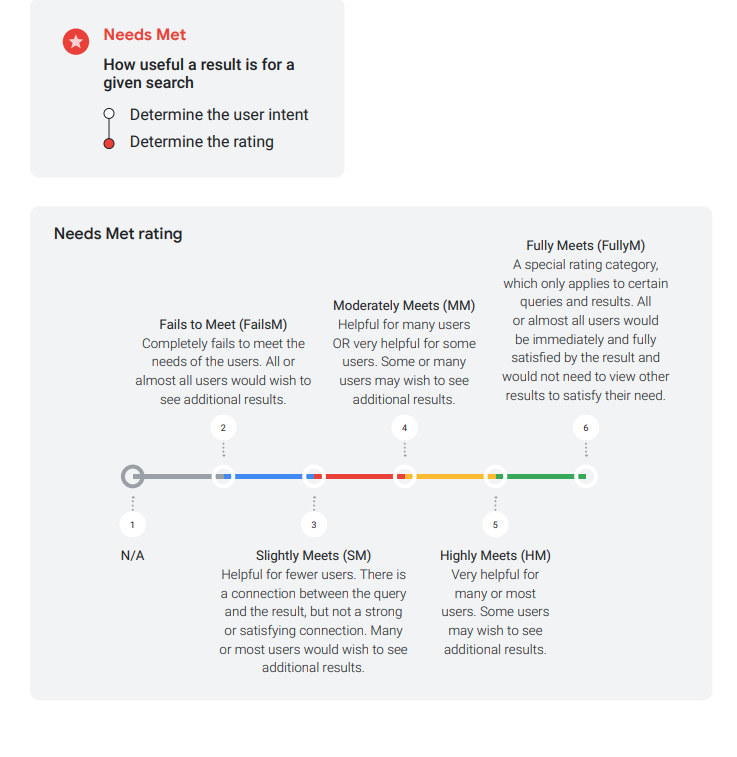

Raters also score each page on the search result on scales measuring page quality (E-E-A-T) and how fully the page meets the searcher's needs.

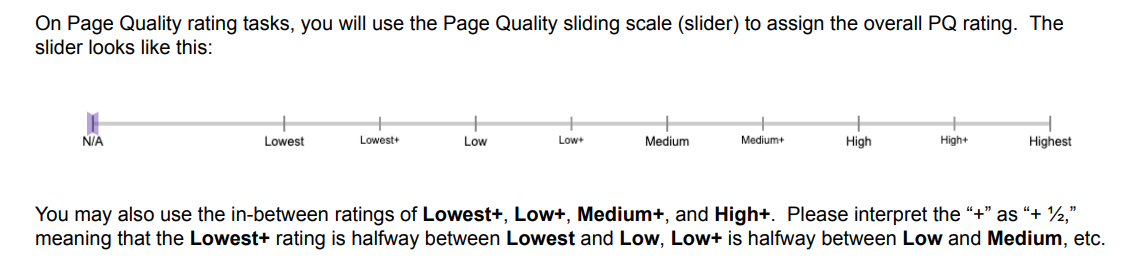

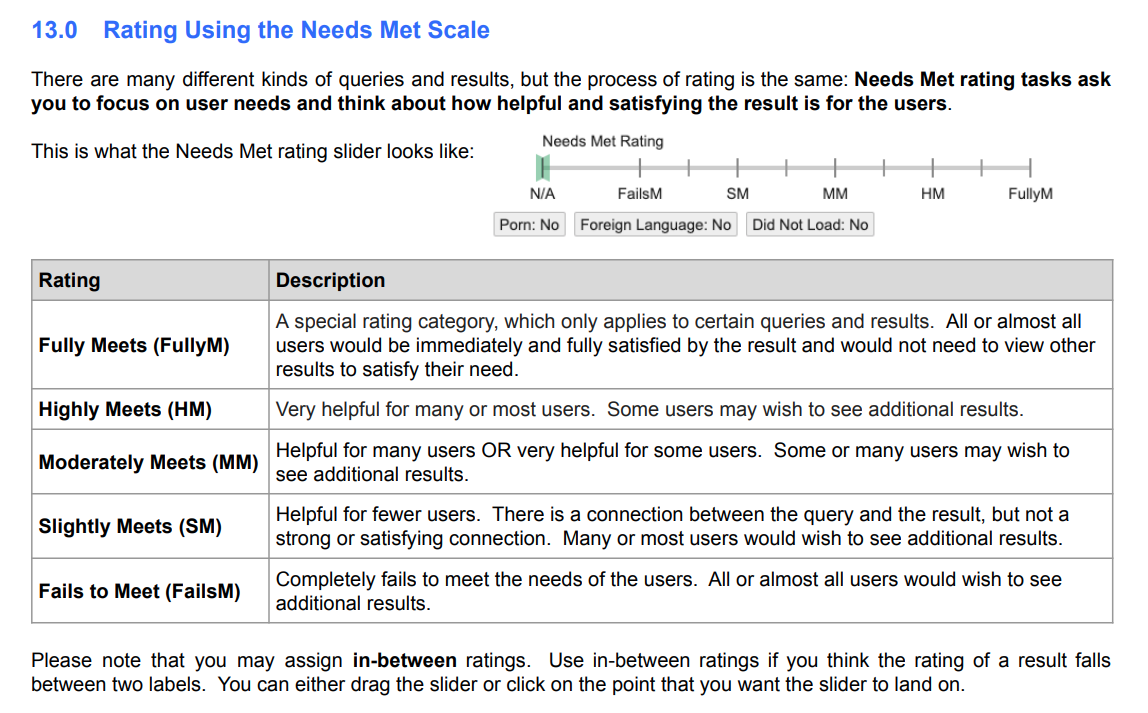

The QRG shows the sliding scale the raters use to make these determinations of Page Quality and Needs Met:

These ratings are used by Google to refine machine learning algorithms designed to surface pages that users are likely to find helpful. Those systems learn by looking at many examples of results that a searcher would find helpful and also ones that are not helpful. Again, I’ve written a whole bunch more on this in my article about the helpful content system.

Did Google Deny Using Quality Raters For Machine Learning?

In the past, Google explicitly denied that data from quality raters was used to train its machine learning ranking algorithms. However, there are signs this may have changed over the past few years.

Google's Search Liaison Danny Sullivan stated in 2018 that rater data does not directly influence rankings. He compared it to diners providing feedback cards to a restaurant. Also in 2018, Jennifer Slegg directly asked Danny whether Google uses quality rater data for machine learning.

His reply was “We don’t use it that way.”

That tweet has since been deleted.

Jump ahead a few years, and Google's statements indicate they likely do leverage rater signals as part of the data used to train ranking algorithms.

In 2021, Google’s page on how search works told us they use machine learning in their ranking systems, saying,

“Beyond looking at keywords, our systems also analyze if content is relevant to a query in other ways. We also use aggregate and anonymized interaction data to assess whether search results are relevant to queries. We transform that data into signals that help our machine-learned systems better estimate relevance.”

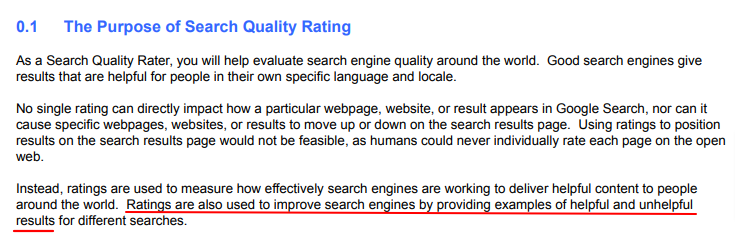

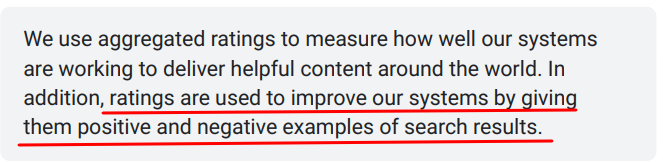

In July of 2022 the QRG changed. In addition to saying that ratings from the raters were used to measure how well search engine algorithms are performing, they added, “ratings are also used to improve search engines by providing examples of helpful and unhelpful results for different searches.”

This is reiterated in Google’s documentation on the QRG:

You can read more about Google’s systems in the following Google documents:

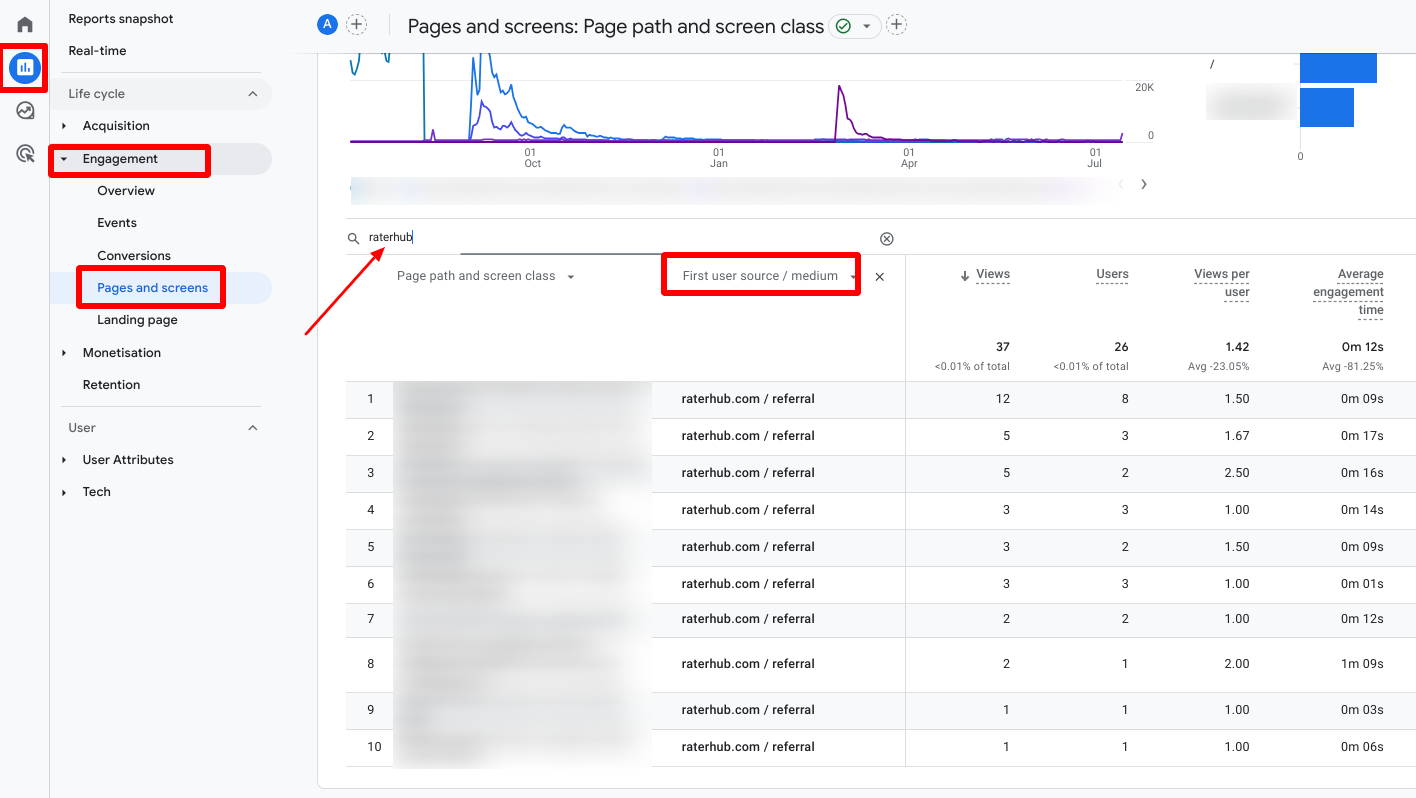

How to determine if a quality rater has visited your site

You may spot visits from quality raters in your Google Analytics data. To check for them in GA4, go to Engagement → Pages and screens. Switch the secondary dimension to source/medium. Visits from raterhub.com are likely raters.

(Note: If you don’t know how to do this in GA4, you’re not alone. I had to ask Brie Anderson. I highly recommend her GA4 course.)

How does a rater assess quality and needs met?

When assessing page quality, raters try to assume the mindset of a typical searcher for that query. Within seconds, they make subjective judgments about whether the page appear helpful, useful, and likely to satisfy intent.

Raters may consider high-level signals like site design, ease of finding main content, level of distracting ads and page speed. However, they do not do an in-depth analysis of page content and accuracy.

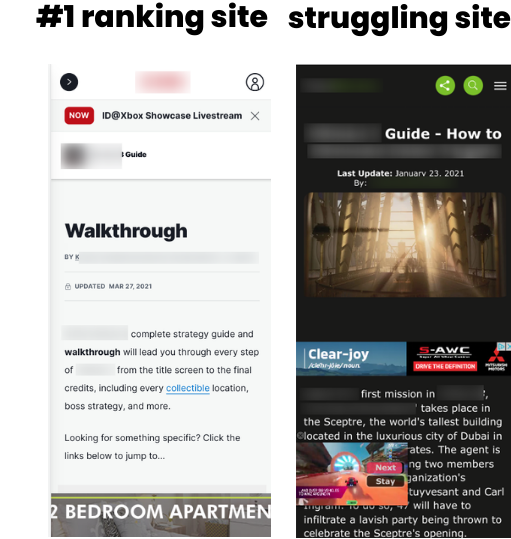

Here’s how I picture this happening. Let’s speculate on one of these queries the raters were told to evaluate. As the page pictured below contains a walkthrough for a video game, it may have been video game name walkthrough or video game name guide.

The site on the left ranks #1 for both of these. The site on the right has been slipping in rankings for a while now.

If you had only a few seconds to decide, which of these would you consider to be the page that’s more likely to meet the needs of this searcher?

The site on the left certainly looks more likely to be helpful for someone who is looking for a game walkthrough. The page on the right is a good site, but there are a few things that Google’s guidance on quality content mention as things to consider. In this case, I expect the most important parts come from Google’s documentation on providing a good page experience, which is all a part of helpfulness.

- Does content display well for mobile devices when viewed on them?

- Does the content lack an excessive amount of ads that distract from or interfere with the main content?

- How easily can visitors navigate to or locate the main content of your pages?

While popover ads can be acceptable, I’m not a fan of ads that immediately cover over the content searchers landed on the page to read.

More importantly though is the last question I highlighted. How easily can visitors navigate to the main content?

What the QRG says about “Main Content”

The raters are instructed to evaluate whether the main content on a page is easy to find.

According to the QRG, the main content is any part of the page that directly helps the page achieve its purpose, and also, the page title.

The main content is the part or parts of the page that answer the question that prompted the searcher to pick up their phone or open their computer and do a search.

Raters look for whether visitors can quickly navigate to the portion of the page most vital for fulfilling the user intent. Pages that bury or hide the main content will get low ratings on "Needs Met" and other QRG criteria.

How the Raters Assess Page Quality and Needs Met

Raters are asked to rate pages on sliding scales as we discussed above.

In terms of Page Quality, I would say that the page we are analyzing would be considered “medium” or “medium+” on this scale the raters are given:

In terms of needs met, I suspect this content would be considered “Moderately Meets”:

The page is helpful for many users, but some users may wish to see additional results.

The site pictured on the right has decently helpful content. But, when I reviewed this site, for most keywords it is clear that other pages on the internet are better at quickly communicating to the searcher that their needs will be met, and then fulfilling the reason why they searched.

It can be insightful to look at pages raters assessed on your site. But those individual pages are just one piece of the puzzle. For systems like the helpful content system, improving quality across all of your content is needed, not just pages raters saw.

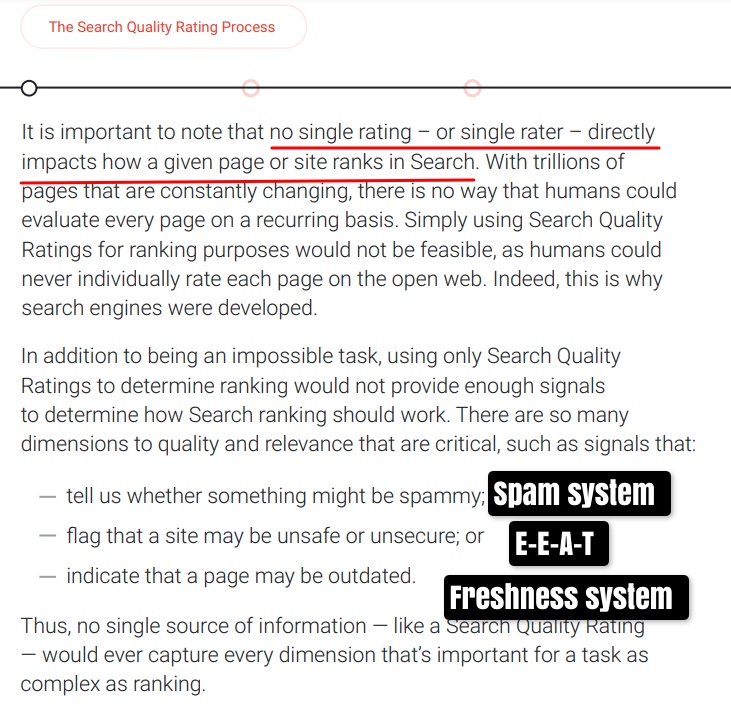

Can quality raters impact your rankings?

Google states that ratings from quality raters do not directly influence search rankings. I've added a couple of labels to Google's documentation below:

If traffic declines after a rater visit, the visit itself is not the cause. Google's algorithms are shaped by machine learning across millions of data points - not just one rater assessing one page.

Quality raters are valuable contributors who provide Google with real-world feedback to improve algorithms over time. However, their brief subjective ratings are just one of many signals that influence Google's overall ranking systems.

Focus on optimizing for overall site quality and user experience, not hyper-analyzing limited rater feedback!

Summary

Here's the tl;dr for this article:

-

Google employs over 16,000 quality raters worldwide to evaluate search results based on the Quality Rater Guidelines (QRG).

-

Raters conduct A/B tests on search results and provide feedback on which set better satisfies the query.

-

They also rate individual pages on scales for qualities like expertise, trustworthiness, and meeting the user's needs.

-

Ratings help train machine learning systems to identify high quality, helpful content.

-

In 2018, Google denied using rater data for ML, but statements since suggest they now leverage it.

-

Raters make quick, subjective judgments of results based on high-level factors such as the ease of finding main content.

-

Focus of optimization should be overall site quality and UX, not any one page visited by raters.

-

While raters shape algorithms, their ratings are one of many signals. Traffic declines are not directly caused by rater visits.

-

Finding rater visits in GA can provide info on pages they assessed, but limited value otherwise.

If you’ve found this information useful, may I recommend two resources I have written:

My workbook to help you understand user intent and create helpful content published July 2023, based on understanding the QRG and Google’s guidance on content quality. $150

My guide to the Quality Rater’s Guidelines. It used to be $99 but it’s reduced to $20 as I have not updated it in a few years. The core principles discussed in this book have not changed. It still sells regularly and really should help you understand E-A-T and site quality.

Also, you may like my newsletter which I have written for many years now to keep you up to date on the most important happenings in search.

Comments

Another helpful post Marie – thanks 🙂

Helpful very post, Marie, thanks.

I received 5 visitors from India from raterhub.com totaling 27 pageviews. These visitors opened the Terms of Use, Privacy Policy, About, and Contact pages. I don’t know what their objective is, if this will somehow impact the site’s indexing.