There has been a lot of news lately about Google’s efforts to demote Russian Propaganda so that it does not rank well in the search results, and more specifically, in Google News results. When Google chairman, Eric Schmidt was recently asked about this issue he said, “We’re working on detecting this kind of scenario you’re describing and deranking those kinds of sites.” He specifically called out RT (Russian Television) and Sputnik News.

However, he also said that Google did not want to specifically censor these sites. “We don’t want to ban the sites. That’s not how we operate,” Schmidt said. “I am strongly not in favour of censorship. I am very strongly in favour of ranking. It’s what we do.”

What many are asking now is how Google will accomplish this task.

How does Google demote websites?

There are two ways in which Google can demote a website. One is a manual demotion. The second is an algorithmic one.

Manual demotions

A manual demotion comes in the form of a manual action from Google. If your website is registered with Google Search Console and you receive a manual action, you will receive an email from Google along with a warning in your Google Search Console Dashboard

Here is an example of a manual warning for a site that violated Google’s Webmaster Guidelines by purchasing links in order to manipulate their Google rankings:

As far as we know, there is no manual action that Google levies in terms of “fake news”.

Rather, for sites that are deemed to be publishing fake news, Google prefers to deal with these algorithmically. This means that if a site is demoted for this reason, the publisher will not receive any notification via email or in their Google Search Console about the demotion. This is known as an algorithmic demotion.

Algorithmic demotions

Google has a number of different algorithms that help determine website quality. The Panda algorithm, released in 2011 was a major step forward in helping to surface high quality websites by demoting sites that had significant quality issues. Google’s core algorithm is also continually changing in an effort to get better and better at surfacing high quality sites.

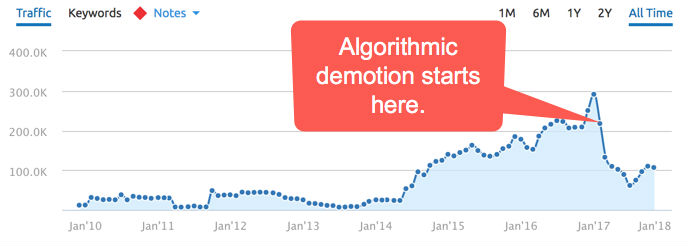

Here is an example of a site that suffered an algorithmic demotion. These traffic estimates, from SEMRush.com, show that the site’s traffic which it received from Google started to drop dramatically in February of 2017:

When a site has an algorithmic demotion, it is almost as if there is a heavy anchor holding the site down and impeding its ability to rank well for many search terms.

How does Google algorithmically determine quality?

We have seen many instances where a website has been demoted because Google’s algorithms deemed it to be a low quality site. But how does Google determine quality?

In February of 2011, Google announced a change to their algorithms which eventually became known as the Panda algorithm. In Google’s announcement they said the following:

“This update is designed to reduce rankings for low-quality sites—sites which are low-value add for users, copy content from other websites or sites that are just not very useful. At the same time, it will provide better rankings for high-quality sites—sites with original content and information such as research, in-depth reports, thoughtful analysis and so on.”

The Panda algorithm did a decent job at reducing rankings for many ultra low quality sites. But, Google’s goal is to get much better at accurately determining what is high quality. They are accomplishing this by training an army of humans to assess quality by using a document called the Quality Raters’ Guidelines.

What do the Quality Raters’ Guidelines have to do with Russian propaganda?

Google has contracts with over 10,000 people who are called quality raters. These people are given the Quality Raters’ Guidelines handbook to learn how to assess which websites are high or low quality sites. This handbook is approximately 160 pages long and filled with information on what Google considers to be a good website. (Note: I’ve just released a book on how we use the information in the Quality Raters’ Guidelines to help websites perform better on Google searches.)

Each of the quality raters is assigned a large number of websites to review in the eyes of these guidelines. Once they have made their assessments, Google’s engineers take these opinions and tweak Google’s algorithms so that they do a better job at surfacing only information that is good and trustworthy. It is believed that much of this information is used by machine learning (ML) algorithms. Human beings determine quality, and then this information is used by the ML algorithms to make assumptions about every website in Google’s index.

In March of 2017, Google made changes to the Quality Raters’ Guidelines to give the raters more instructions on how to detect fake news. Here is a section that was added to the guidelines:

“High quality news articles should contain factually accurate content presented in a way that helps users achieve a better understanding of events. Established editorial policies and review processes are typically held by high quality news sources”

Google also added a section to say that news sites are to be considered YMYL (Your Money or Your Life) sites, meaning that they would be held to a higher level of quality:

“News articles or public/official information pages: webpages which are important for maintaining an informed citizenry, including information about local/state/national government processes, people, and laws; disaster response services; government programs and social services; news about important topics such as international events, business, politics, science, and technology.”

And finally, they added a section that states that a news publisher’s overall reputation can be used in order to help determine whether their work is of high quality:

“A website’s reputation can also help you understand what a website is best known for, and as a result how well it accomplishes its purpose. For example, newspapers may be known for high quality, independent investigative reporting while satire websites may be known for their humor.”

In April of 2017, Google announced that these issues which the quality raters had been assessing were now factored into their algorithms. Their announcement said, “ Last month, we updated our Search Quality Rater Guidelines to provide more detailed examples of low-quality webpages for raters to appropriately flag, which can include misleading information, unexpected offensive results, hoaxes and unsupported conspiracy theories.”

The SEO community named this update the “Owl Update”, saying that the algorithm was evolving to detect fake news, heavily biased content, rumors, conspiracies and myths.”

As such, the algorithms are not likely to specifically censor websites like RT and Sputnik news. But rather, the goal is to accurately determine whether or not the information published by these sites (and all news sites) is likely to be true. If the algorithm determines that particular sites are more likely to produce unsubstantiated news, hoaxes, conspiracy theories, etc., then those sites can see not only demotions in rankings for those stories, but ranking drops across all pages of their site.

Examples of low quality news sites from the QRG

The main question that I have is whether Google will teaching their quality raters to detect “fake news” that is specifically from a Russian source. Throughout the guidelines there are examples of sites that are considered low quality sites. The current copy of the guidelines that is available online has no reference at all to Russia or Russian news sites.

The guidelines do teach the raters to rate the following as low quality:

“A webpage or website looks like a news source or information page, but in fact has articles with factually inaccurate information to manipulate users in order to benefit a person, business, government, or other organization politically, monetarily, or otherwise.”

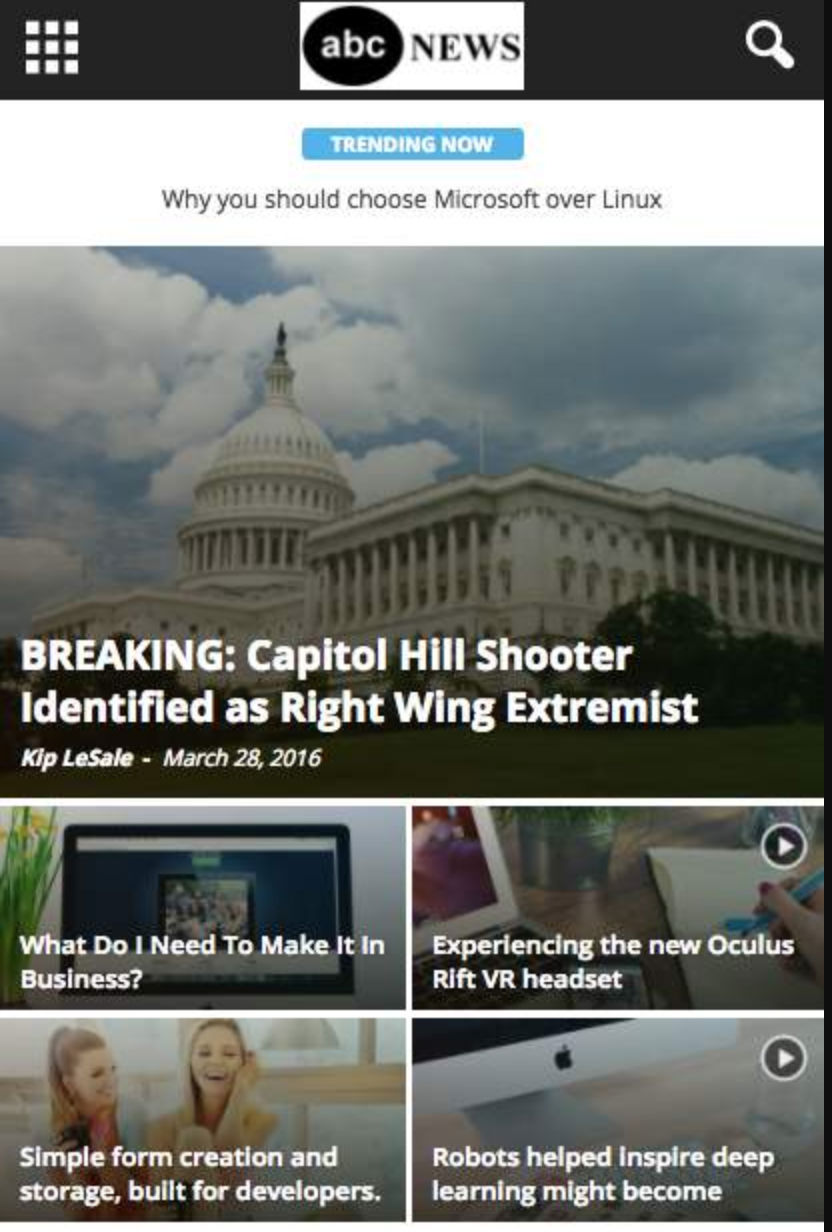

They also give specific examples of low quality news sites. Here is an example that is given of a site that is pretending to be ABC News. Quality raters are instructed to give this site the lowest possible rating:

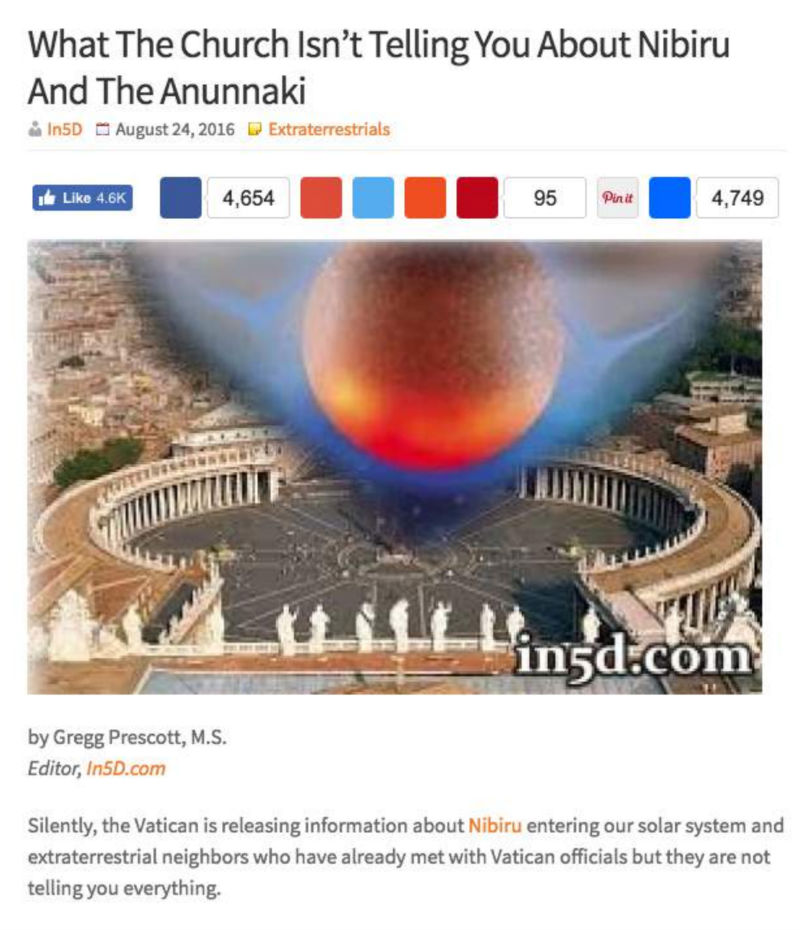

This site is also given as an example of the lowest quality type of news site because it presents an unsubstantiated fact as true news:

Google’s instructions to the quality raters tell them the following about the website mentioned above:

“The MC [main content] on this page contains factually unsupported theories related to the Vatican's knowledge of the planet Nibiru, the existence of aliens, and upcoming world events. Although various Vatican officials and scientists are quoted in the article, the quotations do not support the article's claims, and in some cases do not seem to come from the person quoted. The Nibiru cataclysm and related events have also been thoroughly debunked by authoritative sources ( Reference 1 , Reference 2 ). The demonstrably inaccurate content on this page can misinform users.”

Here is another example that Google gives for fake news:

This site is considered a low quality site because it contains information that is false. The guidelines say, “Note that no date is given, no sources are cited, and there is no author. This website is designed to look like a news source, but there is no information on the news organization that created the website or its content. The SC also features distracting pictures and outrageously titled links.”

Here is one more final example. This site was considered a low quality site because it included a fictitious quotation from Barack Obama that was presented as accurate news:

While many people would probably recognize that this news is false, this example brings up several questions for me on how an algorithm can recognize fake news.

Questions that arise

In my opinion, none of these examples specifically address Russian news propaganda. If it is indeed true that Russian news outlets are producing stories that are fake, this is going to be really hard to algorithmically detect.

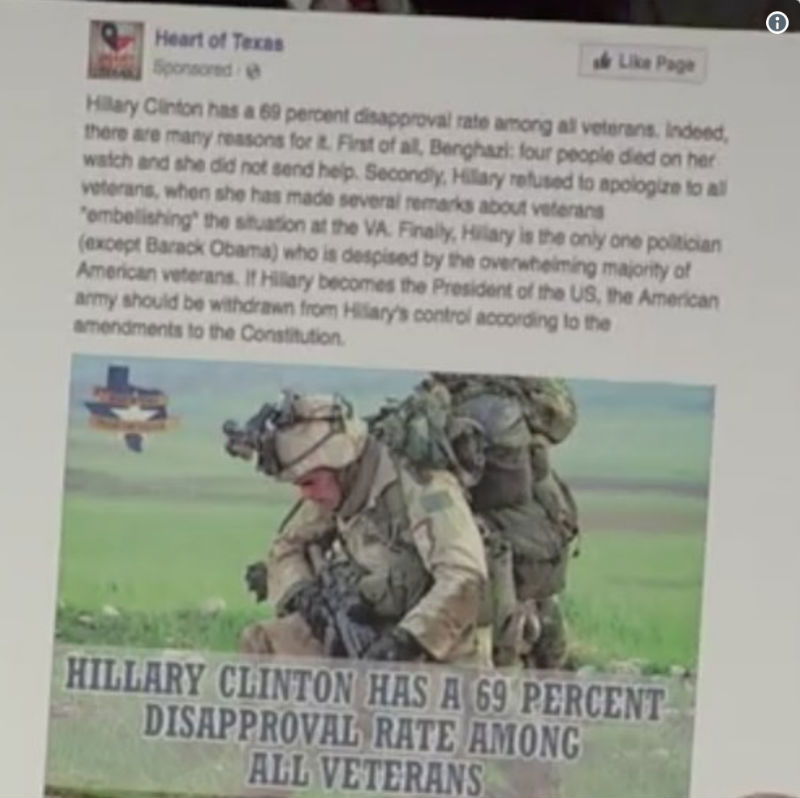

I do see how the current version of Google’s quality algorithms can help somewhat. Take for example, this Facebook ad that was apparently purchased with Russian currency:

The story claims that 69 percent of all veterans did not support Hillary Clinton. Google’s current algorithms will likely demote this type of news article because the claims lack supporting references.

But, I imagine that there are much more sneaky articles that are not so obviously false that are going to be really hard to demote algorithmically.

So how will Google specifically demote RT and Sputnik News as Eric Schmidt suggests? Will they train their quality raters by giving them examples of propaganda published on these sites? If so, who determines what is propaganda and what is true? Who decides whether something is a conspiracy theory or perhaps correct? If the vast majority of people believe something, does that make it true?

How Google answers these questions will affect how the quality raters assess sites which will in turn affect how the machine learning algorithm assesses all websites in terms of quality. If Google decides to train the quality raters by giving them specific examples from RT and Sputnik as propaganda, then I sure hope that they are correct in their assumptions. A machine only learns based on the information which it is fed. Whomever is responsible for training the quality raters to determine truth, is ultimately influencing what is presented on the entire internet as truth. And that is a huge responsibility.

Added: I was approached by Sputnik News to record a live news segment on the topic of Google potentially censoring Russian propaganda. You can hear the interview here:

Click here to contact me for further media inquiries.

Comments are closed.