You know that I love talking about vector search. I've had good success optimizing for vector search. Often though, that success does not last. I think I've realized why, and if this thought is true, it may turn out that doing a great job at optimizing for vector search could possibly do more harm than good.

What is vector search?

Vector search uses math to convert words and phrases into numbers in a way where the numbers represent multiple aspects or dimensions of the word. When those numbers are embedded into a multidimensional space, concepts that are similar to each other will appear close to each other in that space.

Here is an excellent video to help explain vector search even further.

RankBrain and RankEmbed BERT use vector embedding

Google's AI systems like RankBrain and RankEmbed BERT use vector embeddings and vector search. When a query is searched, the query (and, likely expansions of the query) are embedded into a vector space and content that is embedded nearby in that space is seen as likely to be relevant. (Here's more on this from a 2016 Search Engine Land article written by Danny Sullivan which says Google tells us we can learn more about RankBrain by reading this 2013 Google blog post on converting words to vectors.)

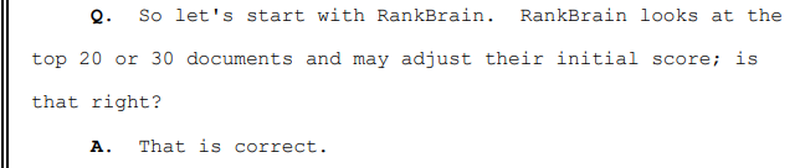

In the DOJ vs Google trial, we learned that RankBrain can be used to re-rank the top 20-30 results.

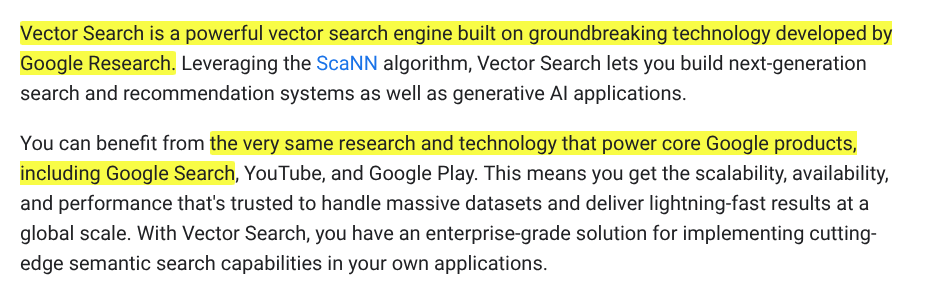

RankBrain has likely evolved tremendously since Google first told us about converting words to vectors. Still, there's no doubt that vectors and vector embeddings are important to Search. In fact, Google specifically tells us that vector search powers Google Search.

This is why we are seeing so much advice from SEOs to write in a way that looks good to vector search. Doing so will help our content look more relevant to machines. At least initially...

Google says writing for vector search won't help us rank better

A couple of years ago I had a fascinating conversation with Google's Gary Illyes about vector search. He told me it was something interesting to learn about, but knowing about vector search would not give me an advantage in Search.

I did not believe him. (Sorry Gary! I had to test things for myself before coming to the realization that you were right.)

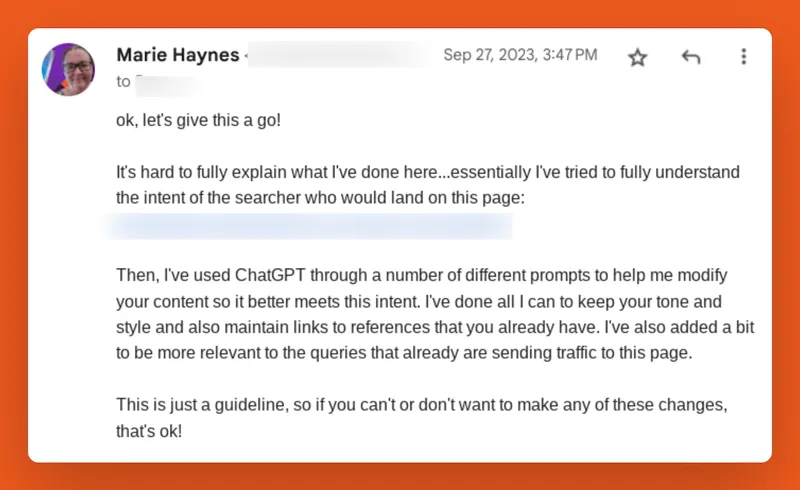

I developed a theory that I wrote about in my book. The concept involved expanding a query to include related micro-intents and then writing text that had well structured sentences that clearly and concisely answered each of those microintents. The idea was that this technique should cause the piece of content to be located in Google's vector space in a place that would be close to the query as well as the query expansions. We would therefore, look more relevant in the eyes of vector search.

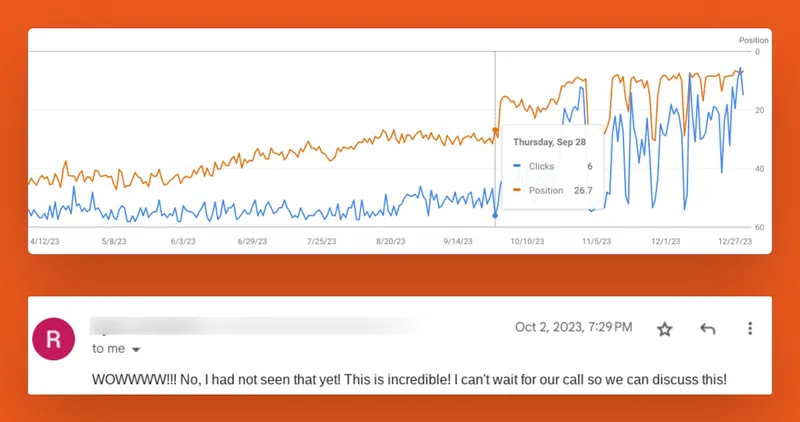

I had good success doing this:

In some cases, we retained these rankings. In quite a few cases though, we won them for a short while and then lost all of our gains.

How Google determines relevancy is evolving

I now understand why Gary encouraged me not to focus too much on vector search. I do think we can learn a lot by understanding Google's various methods for predicting which content a user will find relevant. But, I think that trying extensively to look good to Google's vector search systems can potentially do more harm than good.

Before I explain why, it's worth mentioning that Google has systems that are far more sophisticated than my simple explanation of vector search. For example, in late 2024 they wrote a paper describing a technique where they gave an LLM a piece of content and asked it to create a query that was relevant to the content and another query that seemed relevant, but really was not. Then, they used those pairs to train a system to better understand the nuances of relevancy. I've written much more here with my thoughts on how Google determines relevancy and helpfulness.

But let's get back to why focusing mostly on optimizing for vector search may be the wrong path to walk down.

When we write for vector search, we look good to machines. But, what if users don't agree that we are the most relevant and helpful choice?

Google predicts what searchers will click on and then refines those predictions

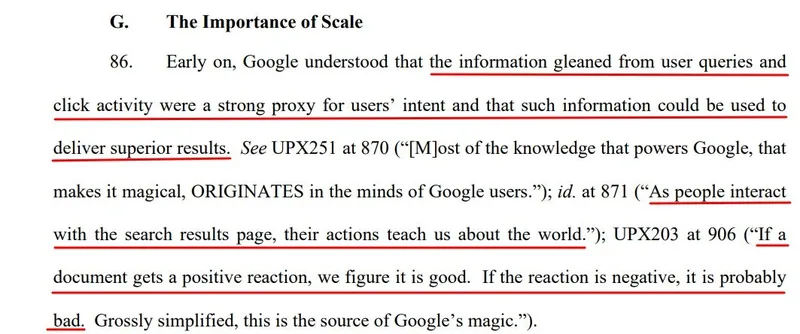

The DOJ vs Google trial shared a wealth of information on how Google learns from what people choose to engage with.

(documentation from the DOJ vs Google trial)

These capabilities improved even more with the development of the helpful content system, and eventually with the launch of the March 2024 core update, where Google learned to use a multitude of signals to determine what content truly satisfied searcher need.

There are many articles written on Google's click probability models that try and predict what people will click on and be satisfied with. Here is a Google paper on incorporating clicks, attention and satisfaction into a model that learns from the actions of searchers.

Google's systems work to present users with results they think they will find helpful. Then, they learn from what people actually did find helpful and machine learning systems use this information to improve upon their predictions.

Can you see where I'm headed with this?

I think it's possible that if you consistently look great to vector search systems and get chosen by Google as the most likely relevant result, but user actions don't confirm that you actually were the best result, then the systems will adjust to learn that your content isn't as good as the system originally predicts.

We could be doing more harm than good.

Should we ignore vector search?

I still think it's a good idea to understand the questions your audience has on a topic and do a good job of answering them in a way that is easy for machines to digest and present concisely.

However, I think that understanding should mostly come from truly understanding your audience rather than from using tools. This is why experience is so important. I think you can draw some inspiration from tools that mine the People Also Ask results, and even more inspiration from asking an LLM what questions a user likely has on a topic. But, ultimately your original, helpful value comes from your unique insight and knowledge of what your audience really will find helpful. When we write in this way, we look good to searchers who are seeking out original and truly insightful information, and at the same time, are optimizing for vector search. If we rely solely on the tools, then it's just the machines that love our content.

Here are a few of Google's bullet points on creating helpful content that I think are relevant to this discussion. These are not specifically things that Google's search systems try to measure, but rather, the types of things that users tend to like:

- Does the content provide original information, reporting, research, or analysis?

- Does the content provide insightful analysis or interesting information that is beyond the obvious?

- If the content draws on other sources, does it avoid simply copying or rewriting those sources, and instead provide substantial additional value and originality?

- Does the content provide substantial value when compared to other pages in search results?

- Is this content written or reviewed by an expert or enthusiast who demonstrably knows the topic well?

- Do you have an existing or intended audience for your business or site that would find the content useful if they came directly to you?

- Does your content clearly demonstrate first-hand expertise and a depth of knowledge (for example, expertise that comes from having actually used a product or service, or visiting a place)?

- Will someone reading your content leave feeling like they've had a satisfying experience?

- Is the content primarily made to attract visits from search engines?

- Are you mainly summarizing what others have to say without adding much value?

Google's recent advice on how to do well in their AI search systems tells us to focus on unique, valuable content for people. I think it's ok to think of vector search a little as we write that content. If we understand our audience's needs and write to answer those needs in a concise way, we'll be crafting content that's easier for machines to digest and for AI Mode and AIOs to quote. This may give us some initial advantage in ranking. But ultimately it is people who decide which pages are the most deserving of ranking well.

I think it's interesting to play around with understanding cosine similarity which is used to see if content is relevant to a query viewed through the lens of vector search, but if we focus too much on optimizing for machines rather than humans, we can teach Google to learn that our content really is not as good as their vector search systems predict. And in doing so, we may be teaching Google's systems to adjust to not prefer our content.

Marie

p.s. A day after I initially published the preview to this article in the Search Bar, Google's John Mueller wrote, "Optimizing sites for embeddings is *literally* keywords stuffing."

(This was originally written for my paid members of the Search Bar Pro Community. If you are serious about learning about AI as it changes not only Search, but our world, we'd love to have you join.)

Comments are closed.