Google’s MadeByGoogle event confirmed what I’ve been saying for a while now. Bard is going to become our personal assistant. Not only that, all of Pixel’s hardware – the Pixel Phone, Watch, Earbuds and more – will have Google’s AI latest hardware on board.

We are in for some wild changes in how we get our information and interact with AI.

Google Assistant was introduced seven years ago. (Google has previously told us they have been AI-first for seven years.)

Google said, “While Assistant is great at handling quick tasks like setting timers, giving weather updates and making quick calls, there is so much more that we’ve always envisioned a deeply capable personal assistant should be able to do. But the technology to deliver it didn’t exist until now.”

Google is aiming to make Bard our personal assistant powered by devices with upgraded GPUs and their own AI engine. Machine learning models enhance every aspect of a Pixel user’s experience.

In this article, we’ll discuss the following:

- Google’s broader focus on AI as it integrates AI hardware and chips into new Pixel phones, watches and earbuds.

- Google Assistant to be powered by Bard. (I speculate at the end about how this could radically change Search.)

- Google’s integration of AI into Fitbit for health monitoring and coaching, with new sensors for body temperature and EDA.

- Wild improvements in photos, video and more.

How is Google prioritizing AI in its new hardware?

Google is making a strong push to integrate AI into its latest hardware. The new Pixel 8 phones come with custom Google silicon designed to pair advanced software with personalized AI capabilities.

What is personal AI?

This is likely what the New York Times foreshadowed when they told us Google was working internally on a whole new search engine, one that will offer a far “more personalized experience than the company’s current service, attempting to anticipate users’ needs.”

The most exciting part of this event for me was when they talked about Bard. They left that to the end, but I’m going to start by talking about Bard coming to Google Assistant.

Bard is becoming our personal assistant

Bard burst on the scene months ago with a showing that disappointed me. I had hoped the chatbot would be significantly better than OpenAI’s ChatGPT. Yet, at the start, Bard was more prone to hallucination (making predictions for words and phrases that aren’t fully correct) and far less helpful.

This is changing. Over the last few weeks especially, I have found Bard to be extremely helpful. It is getting more accurate and much less prone to making things up. Its new extensions allow it to call on and use Google Maps, YouTube, and even connect with your Gmail. I’m finding myself turning to Bard because it’s often more helpful than doing a traditional Google Search.

Bard is becoming my personal assistant. I expect that Google’s new hardware advancements will make this true for many of us.

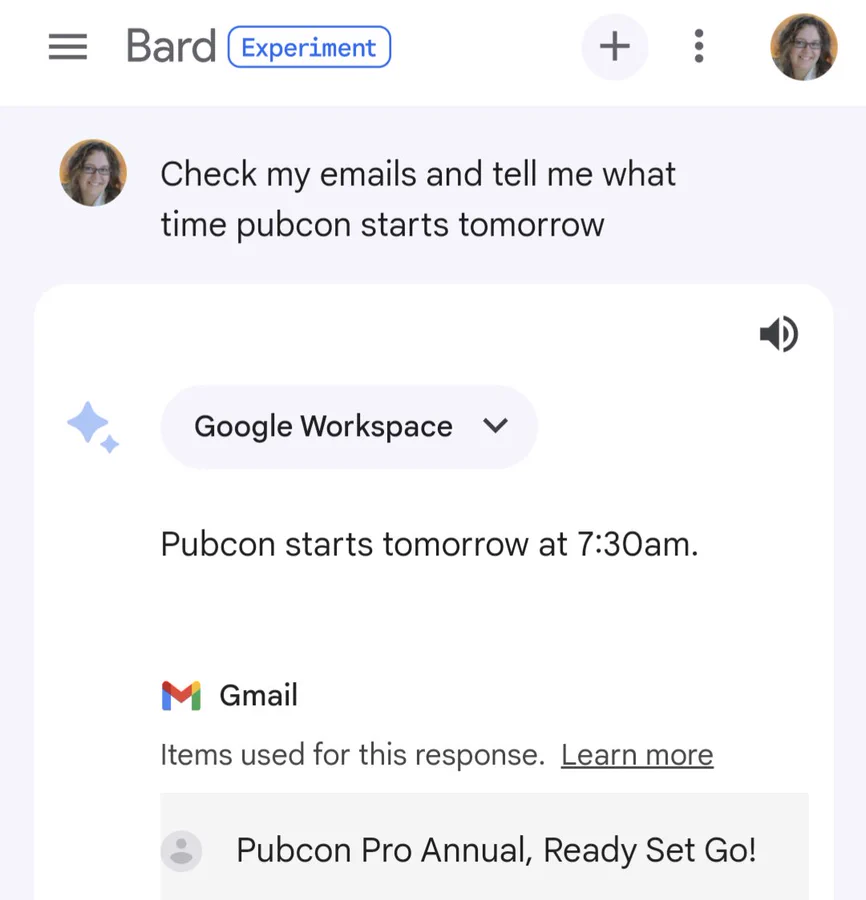

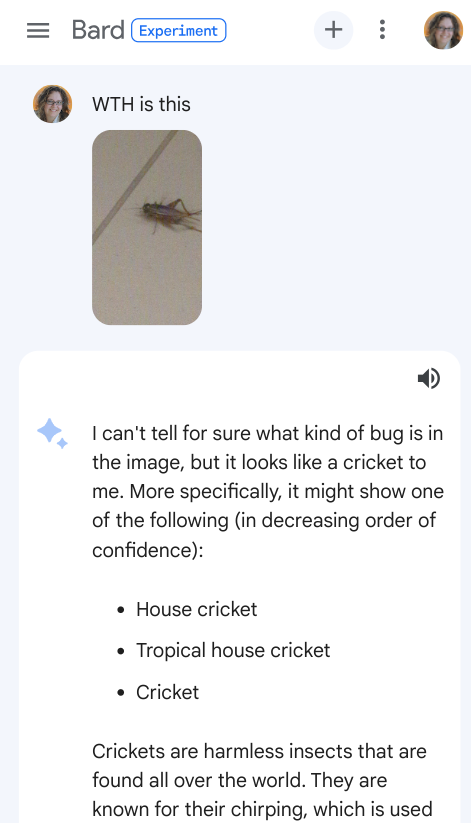

I was at a conference when Bard extensions were announced. I was able to ask Google to check my Gmail for the time the conference started. I also used Google Lens within Bard to find out more about a big bug that was on the bathroom floor. When I wanted more information, it sent me to a website with recent news.

In my next podcast episode I’m going to share about why Google’s Helpful Content System is so important for website owners to understand, especially if you want a chance at being a website that gets suggested by Bard. I was working on this episode, talking about how I think Bard will become our personal assistants when today’s announcements came out!

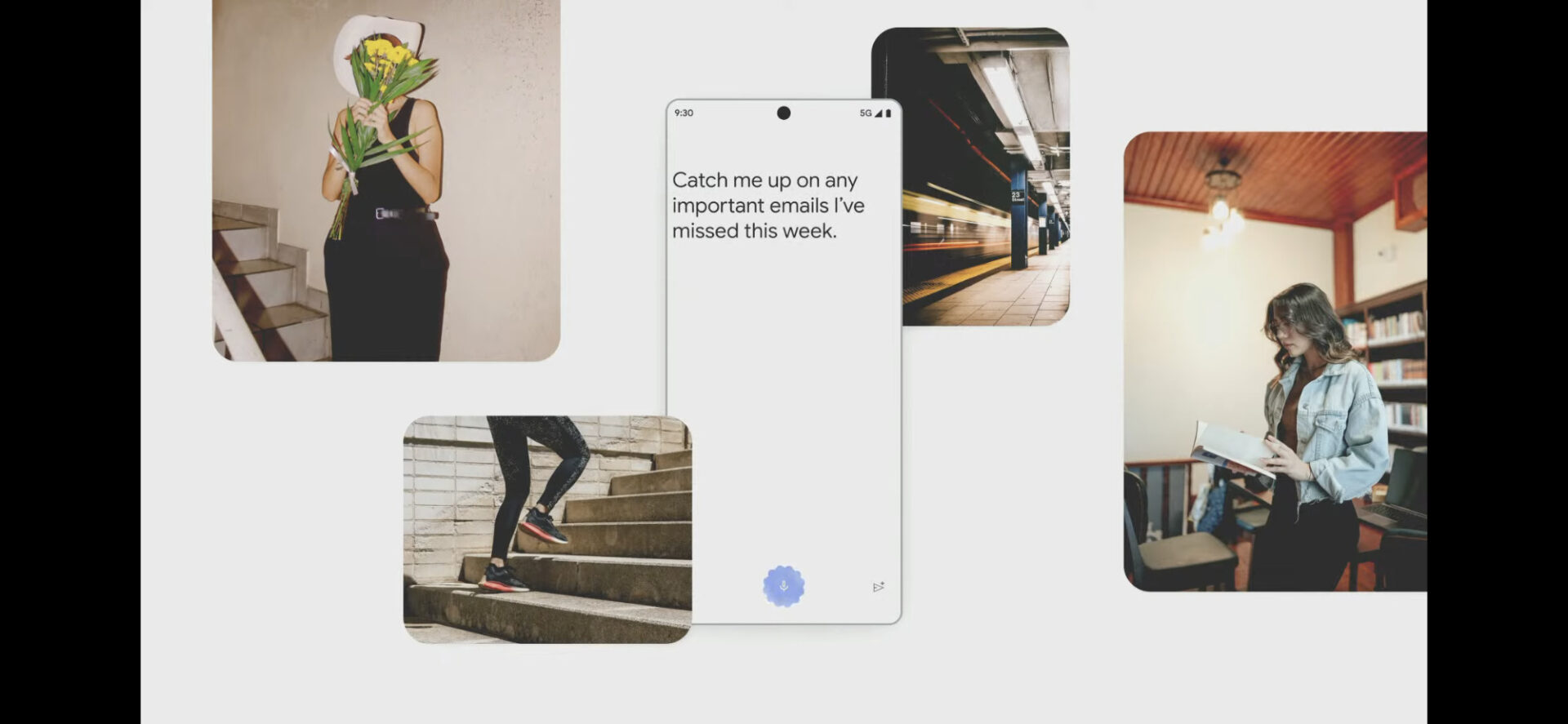

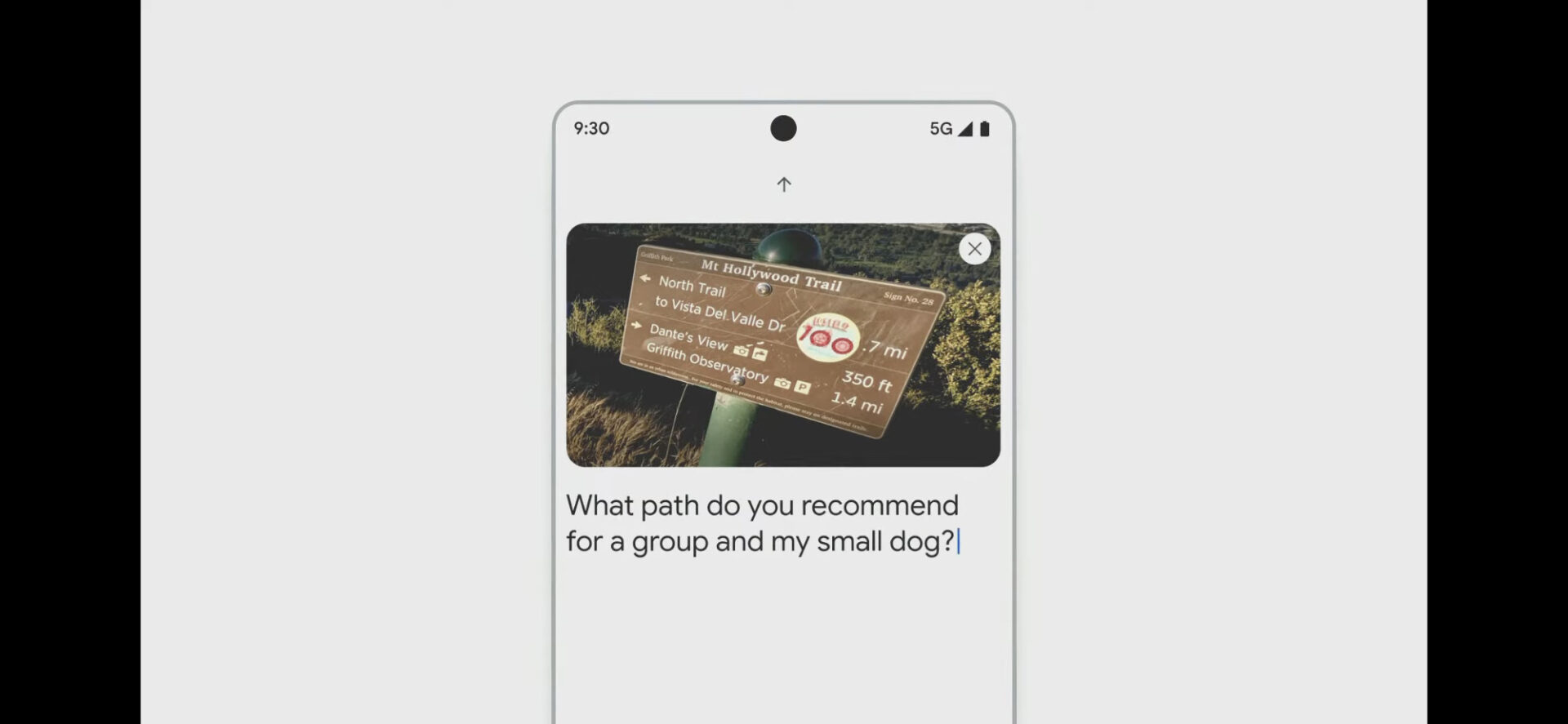

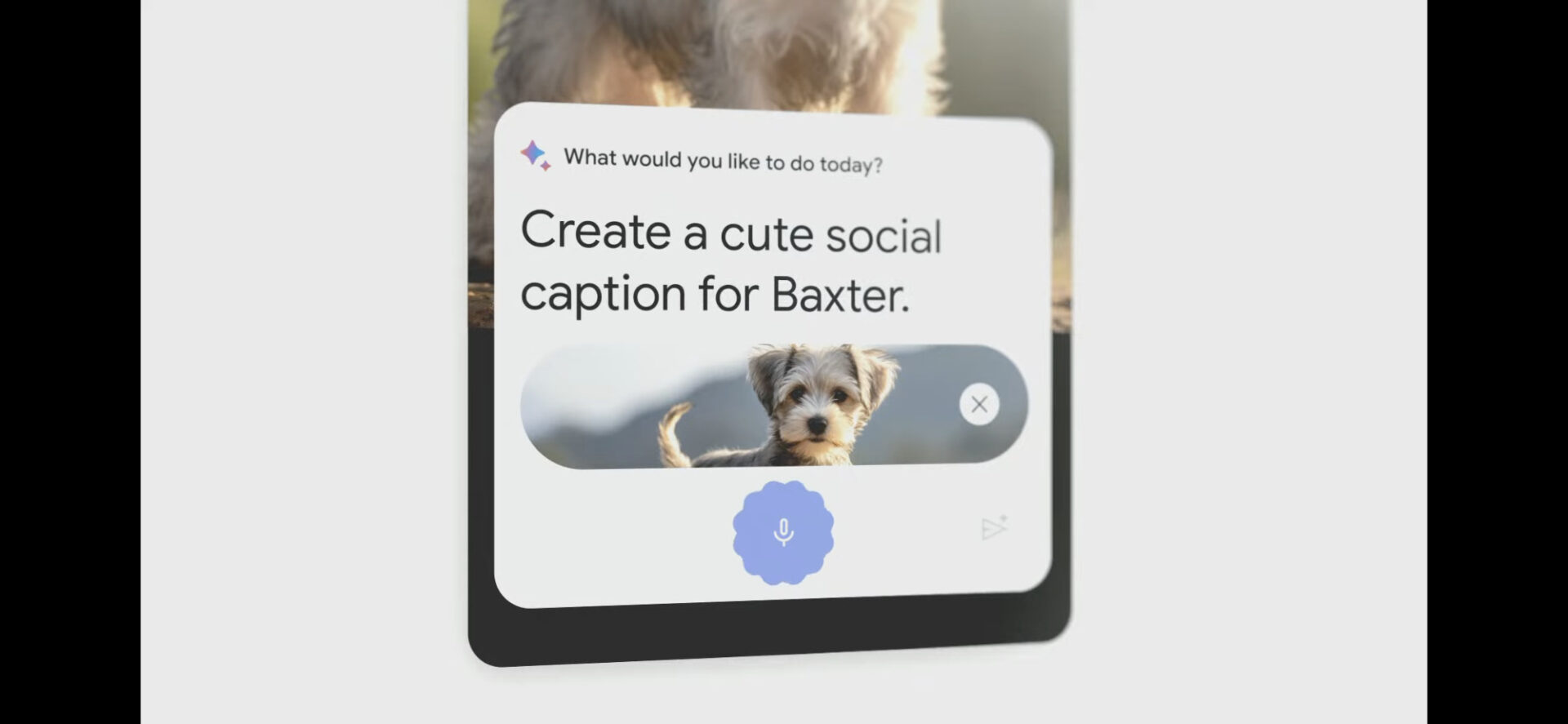

In Google’s MadeByGoogle event, they told us Bard can hear, speak and can see and in many cases, take action for you.

Here are some screenshots from the video showing Bard Assistant in action.

Converse with Bard about your emails:

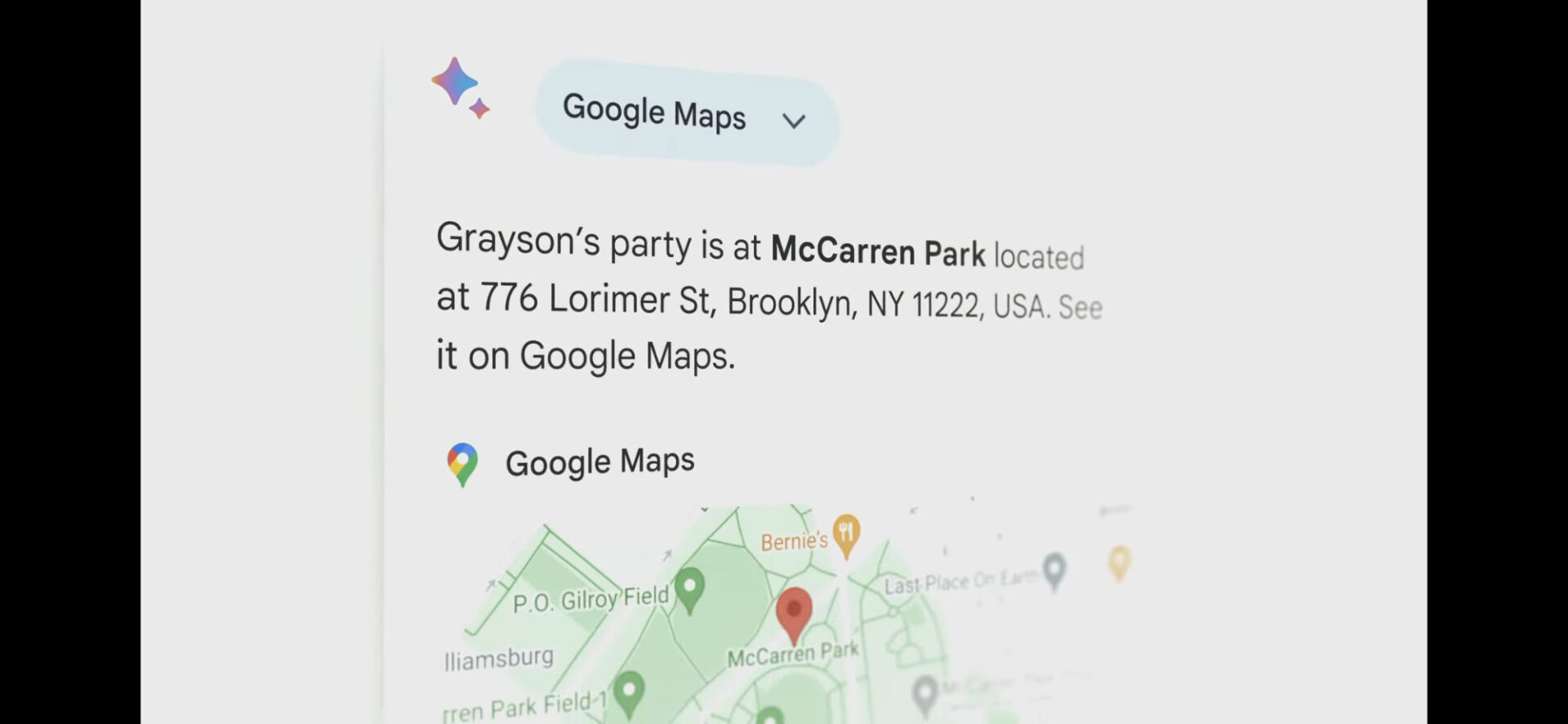

Call on Google maps when helpful.

Or Messages.

Use your camera to ask Bard questions about things you can see on your screen or with your camera.

Ask questions about what is on your screen.

Google says, “This conversation overlay is a completely new way to interact with your phone.”

Assistant with Bard is rolling out to select testers shortly and will be expanding availability as an opt-in experience in the next few months. I am not sure, but it sounds to me like this is just rolling out to Pixel 8 users. It sounds like the AI hardware (Tensor Processing Units) in the new phones is what’s needed to make this all happen.

Google says, “It’s a paradigm shift for computing and leads to more textual, more personalized and more powerful help.”

The new hardware in Pixel 8, earbuds and watch is a game changer for AI

This event really was a commercial to sell the Pixel 8 phone and other hardware. And it was effective. I’m about to hit purchase…but it’s not cheap!

The new Pixel Watch has additional sensors to measure skin temperature, and electrodermal activity (EDA). I’ll talk in a minute about how this connects with FitBit plus AI to do all sorts of things to help us get healthier.

Pixel Buds Pro use AI for real time noise cancellation. If you’re playing music and you start talking, the music stops.

The Pixel 8 and 8 Pro phones have the new Google Tensor G3 chip, designed specifically for on-device AI processing. The Pro phone is the first phone able to run complex generative AI models directly on the device itself, enabled by the more powerful Tensor G3 chip.

There’s also a seriously upgraded camera system and a new temperature sensor in the phone. It’s FDA approved to take your temperature or you can apparently use it to measure the temperature of the water while you boil baby bottles.

The camera upgrades are unreal.

Night Sight video enhancement uses both the Pixel’s camera and Google cloud servers to process low light footage in real time. Check out these examples of night photos where AI has enhanced them.

There are also a bunch of professional level photography features powered by AI.

On top of those:

“Best Shot” looks really cool. It captures multiple photos and then you can select the best expressions from each person in the photo and it will make it into the ideal group photo for you.

“Guided Frame” helps visually impaired users compose shots by recognizing faces and subjects.

“Magic Eraser” now works on larger objects and can intelligently fill the background after removing distractions.

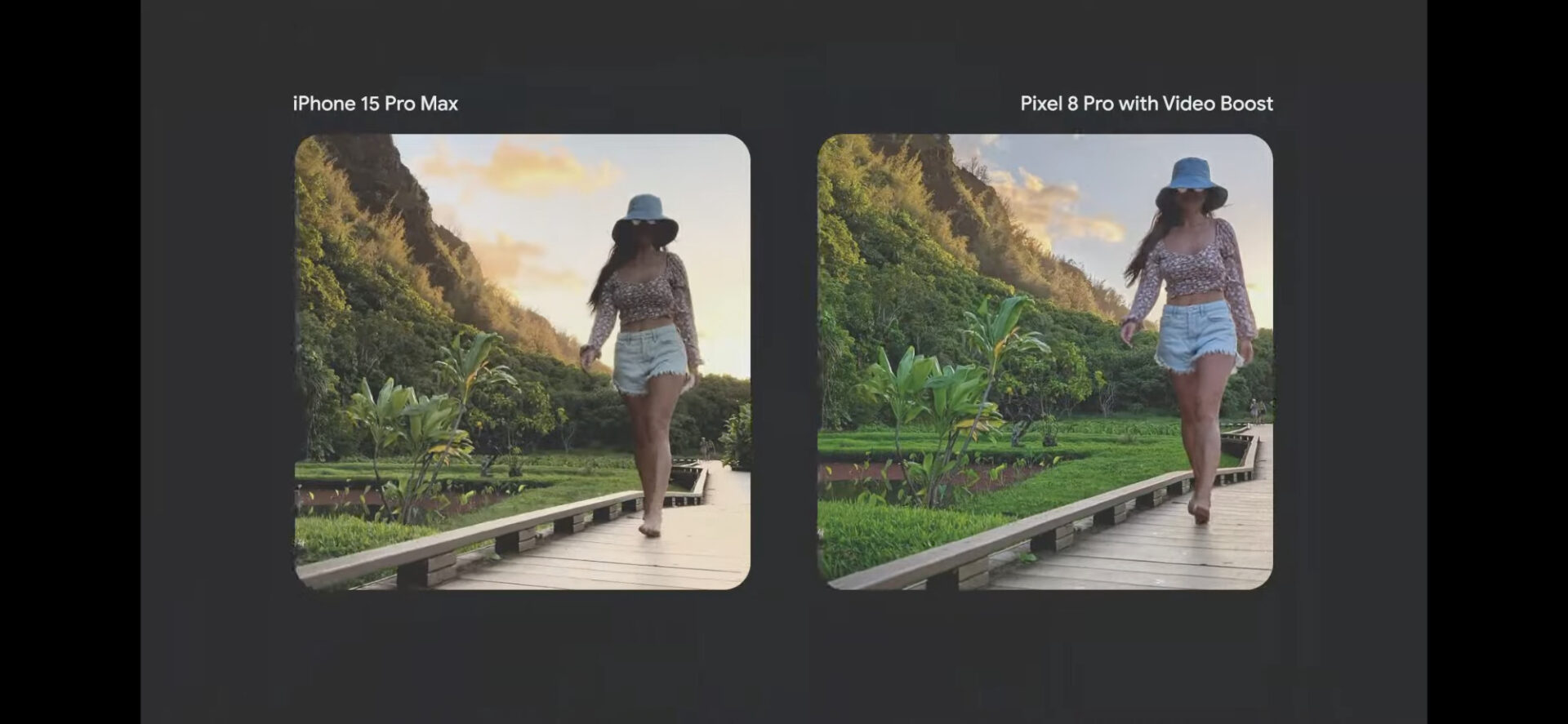

For video, “Video Boost” sends every single frame of your video to Google Cloud which uses HDR processing to deliver vivid, true-to-life colours and details. Google described it as pairing the Pixel hardware with its AI data centers to enhance each video in real-time.

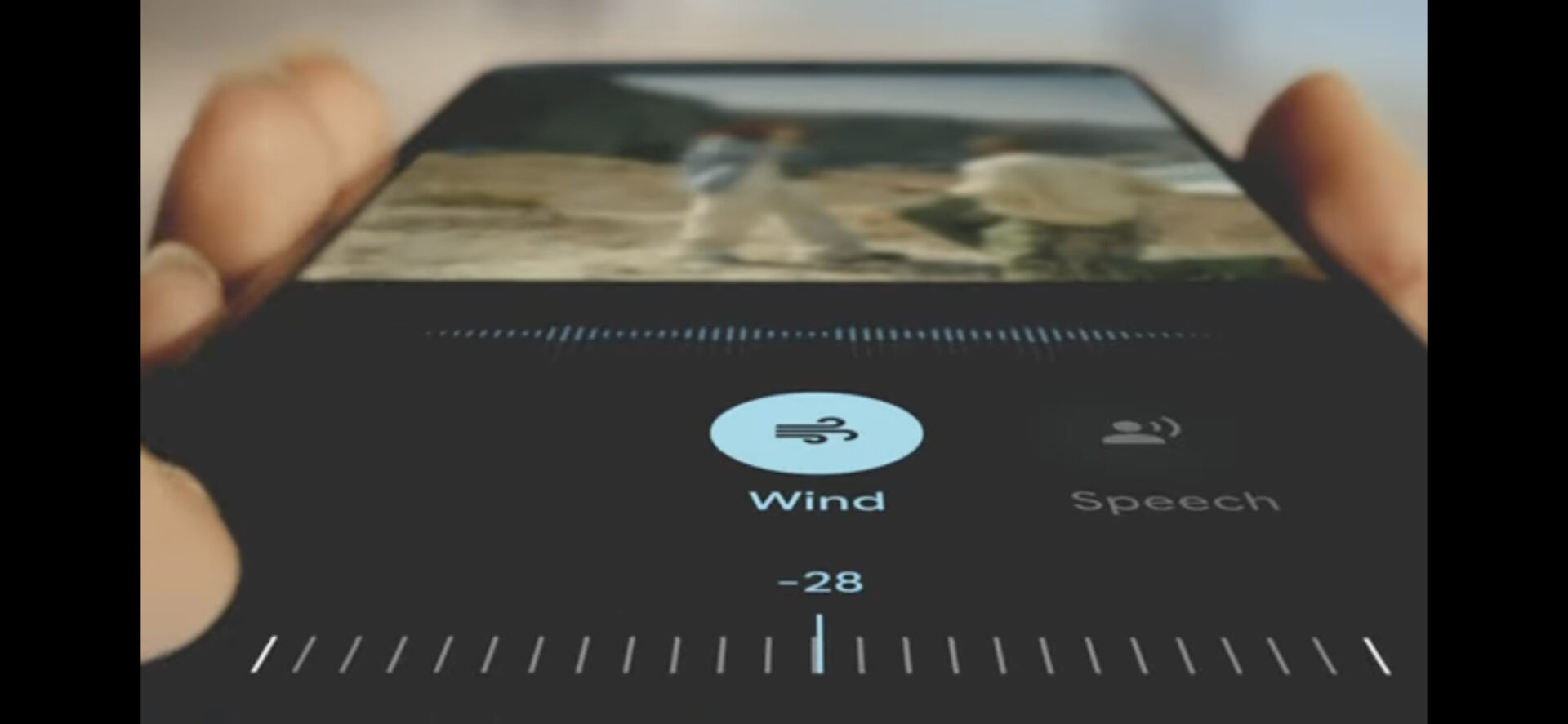

“Audio magic eraser” uses Tensor G3 and machine learning to sort different sounds into distinctive layers you can share. They showed an example of a video with a baby babbling and a dog barking in the background. The magic audio eraser completely took the dog barking out.

Fitbit

I am so incredibly excited for the things they announced for Fitbit. If you trust sharing your data with Google’s AI, Google aims to help improve your stress levels and guide you towards health.

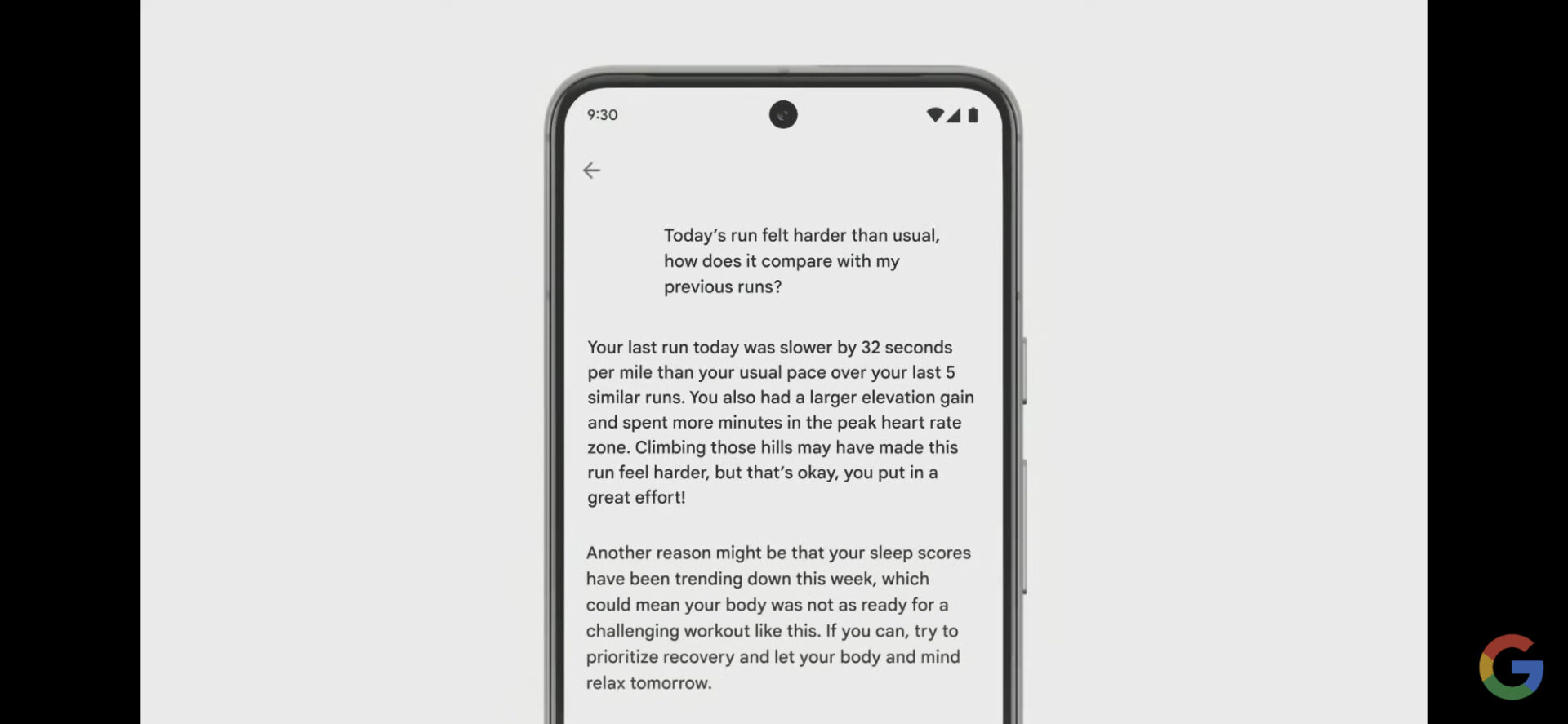

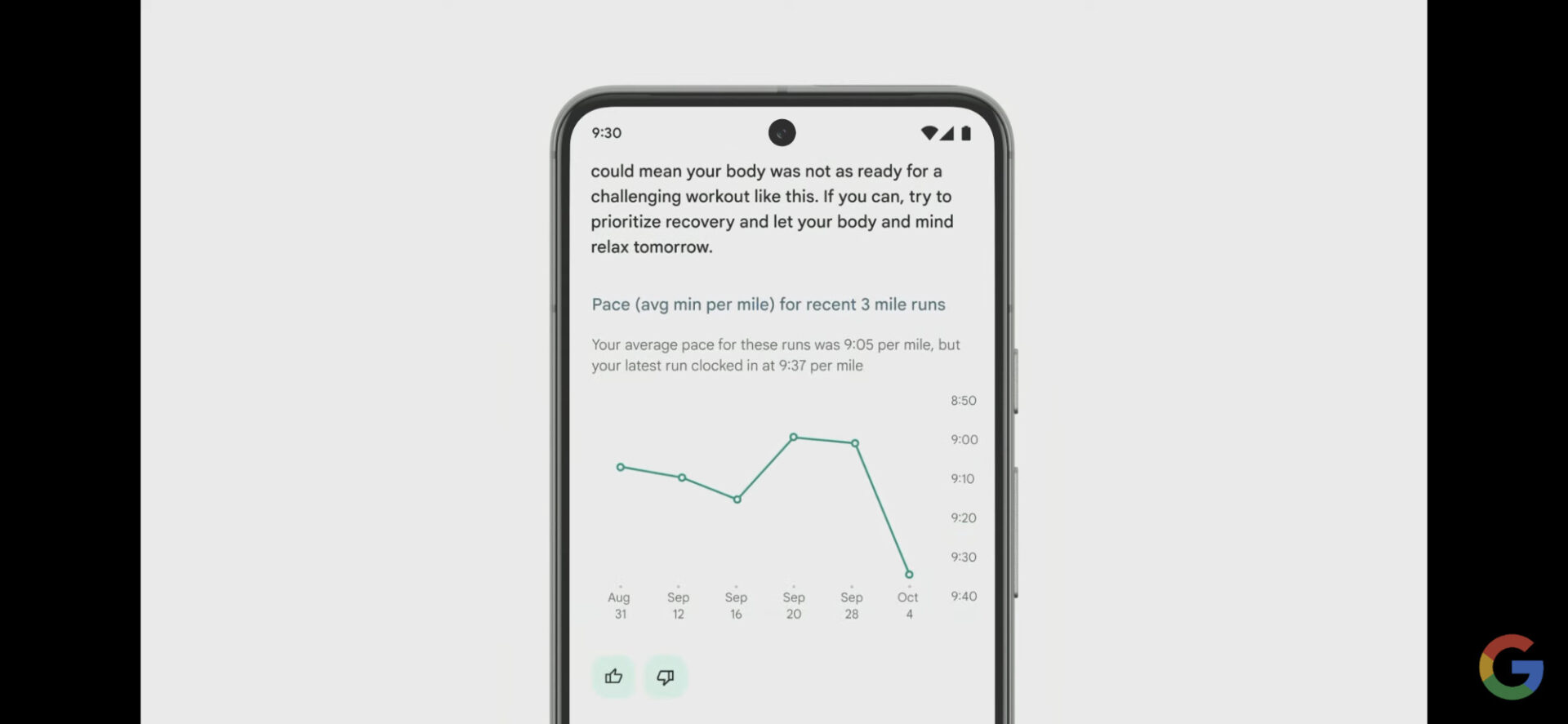

Fitbit was highlighted as an important part of Google’s focus on helpful AI experiences. The Pixel Watch includes advanced Fitbit sensors to detect EDA (stress detection), heart health monitoring, and other biometrics. Google announced they will roll out generative AI capabilities in Fitbit labs next year, allowing Pixel users early access.

They previewed using AI to surface patterns and provide tailored coaching recommendations.

I have been building something like this myself, using Bard plus HRV readings from my Google Watch combined with ChatGPT to converse about what is causing stress and what to do to remedy it. I knew this was where Fitbit was headed!

When stress is detected, you can converse about what you’re feeling.

Then, the Fitbit app can make suggestions to help.

Google says that looking ahead, they see many waus to use AI in Fitbit as a personal health coach and surface connections and correlations in your Fitbit data.

What about Search?

They did not discuss specifically how Search will change with the new Pixel phones. Here are some things they mentioned.

The AI can read websites to you, even if they are in a language you do not speak. Or summarize webpages.

Google’s phone keyboard, GBoard will have Google’s Large Language Models (LLMs) built in which will improve smart replies.

It makes sense to me that with an LLM on board, the Pixel phone is all set to be our personal assistant via Bard.

In my next podcast episode I will share more of my thoughts on this. Search is not going away. Google’s CEO Sundar Pichai told us that SGE (Search Generative Experience) is the future of Search. He said, “Over time this will just be how search works, and so, while we are taking deliberate steps, we are building the next major evolution of search.”

Whatever happens, Search on Google phones will be changing. You’ll get an AI generated answer either from SGE or from Bard, and when it makes sense, you’ll also be shown websites.

If your website mostly tells people information that is already known online, it is likely going to get harder to be found. But if your website offers information gain – new, interesting, original information that people will find helpful and that Bard and SGE do not already know, this is what Google wants to show people. Again, my next podcast episode will cover my thoughts on this more.

Summary

This event got me excited! I can see though how some might not be happy about AI being more and more a part of our lives. It’s a scary prospect. We’re becoming cyborgs. Our phones are already an integral part of most of our lives and now we’re getting more and more dependent and integrated as AI powers phones, watches and earbuds.

I expect some will revolt against having AI so pervasive in our lives. The way I see it, there’s really no way to stop it. Those who understand how to use AI and push the limits of how it can help us will have so much advantage over those who ignore it or criticize it.

If you’ve been unimpressed with Bard, I’d encourage you to give it another go. It’s not perfect, but it is getting better and better.

Here is a summary of this event and my thoughts:

- Personalized AI Assistance: Google Assistant is integrating Bard to offer a more conversational and personalized user experience. This could change the way we perform tasks and access information.

- Advanced Hardware: The new Tensor G3 chip in Pixel 8 and 8 Pro phones enables powerful on-device AI processing, taking mobile computing to a new level.

- Health Monitoring: With AI-integrated Fitbit sensors, Pixel Watch aims to become your digital health coach, offering insights into stress detection, heart health, and more.

- Photography and Video: AI-powered features like Magic Eraser, Best Shot and Video Boost in the Pixel 8 phones could revolutionize how we capture and edit photos and videos.

- The Future of Search: Although not explicitly stated, the integration of Large Language Models (LLMs) and Bard into Google’s ecosystem suggests a radical shift in how search may function in the future.

I’d recommend watching the whole video but if you want to skip to the bit about Bard becoming a part of Google Assistant, start at 1:02.20

If you found this interesting, you’ll love my newsletter.

If you want to learn how to create content that Google’s AI systems are likely to find helpful, you’ll get value out of my Creating Helpful Content Workbook.

More Content Like This

Google’s Vision for Search in 2023 and Beyond – Analysis of the Q2 2023 Earnings Call

The September helpful content update: Why you were affected and what you can do

My notes from the Google Cloud Next Keynote 2023 – Gen AI App builder🤯

Leave a Reply