Let me see if I can convince you!

I’ve shared a bunch in this video and summarized my thoughts in the article below. Also, this is the second blog post I've written on this topic in the last week. There is much more information on user-data and how Google uses it in my previous blog post.

Ranking has 3 components

We learned in the DOJ vs Google trial that Google’s ranking process involves 3 main components:

- Traditional systems are used for initial ranking

- AI Systems (such as RankBrain, DeepRank and RankEmbed BERT) re-rank the top 20-30 documents

- Those systems are fine tuned by Quality Rater scores, and more importantly IMO, results from live user tests.

The DOJ vs Google lawsuit talked extensively about how Google’s massive advantage stems from the large amounts of user data they use. In their appeal, Google said that they do not want to comply with the judge’s mandate to hand over user data to competitors. They listed two ways they use user data - in a system called Glue, a system which incorporates Navboost that looks at what users click on and engage with, and also in the RankEmbed model.

RankEmbed is fascinating. It embeds the user’s query into a vector space. Content that is likely to be relevant to that query will be found nearby. RankEmbed is fine-tuned by two things:

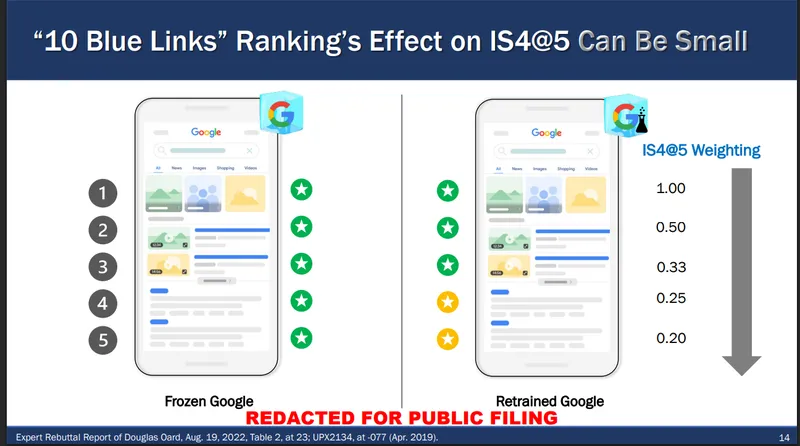

1) Ratings from the Quality Raters. They are given two sets of results - “Frozen” Google results and “Retrained” results - or in other words, the results of the newly trained and refined AI driven search algorithms. Their scores help Google’s systems understand whether the retrained algorithms are producing higher quality search results.

(From Douglas Oard's testimony re Frozen and Retrained Google)

2) Real world live experiments where a small percentage of real searchers are shown results from the old vs retrained algorithms. Their clicks and actions help fine-tune the system.

The ultimate goal of these systems is to continually improve on producing rankings that satisfy the searcher.

More thinking on live tests - users tell Google the types of pages that are helpful, not the actual pages

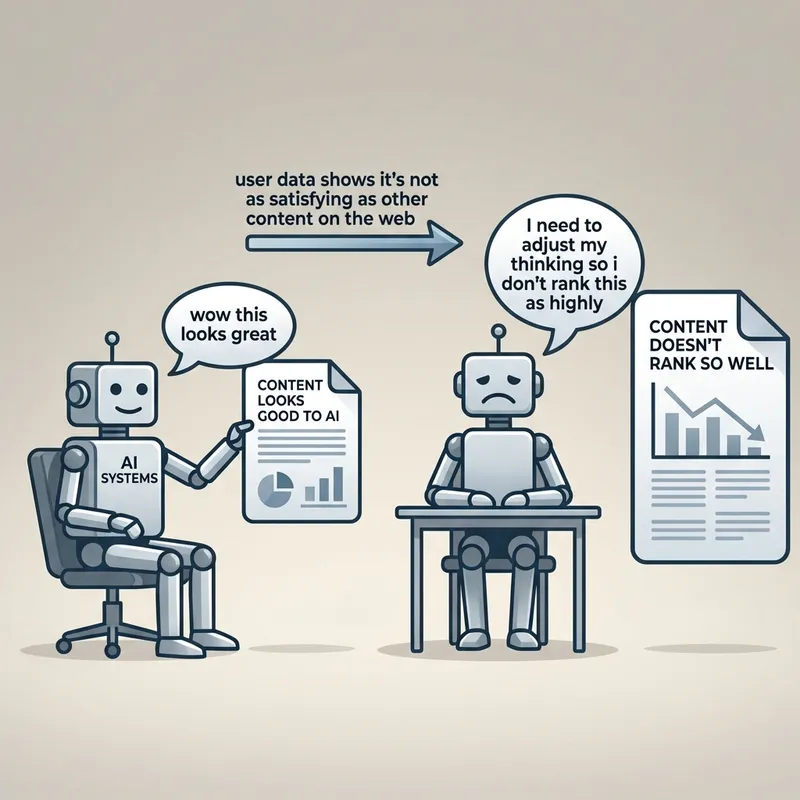

I’ve realized that Google’s live user tests aren't just about gathering data on specific pages. They are about training the system to recognize patterns. Google isn't necessarily tracking every single user interaction to rank that one specific URL. Instead, they are using that data to teach their AI what 'helpful' looks like. The system learns to identify the types of content that satisfy user intent, then predicts whether your site fits that successful mold.

They will continue to evolve their process in predicting which content is likely to be helpful. It definitely extends far beyond simple vector search. Google is continually finding new ways to understand user intent and how to meet it.

What this means for SEO

If you’re ranking in the top few pages of search, you have convinced the traditional ranking systems to put you in the ranking auction.

Once there, a multitude of AI systems work to predict which of the top results truly is the best for the searcher. This is even more important now that Google is starting to use “Personal Intelligence” in Gemini and AI Mode. My top search results will be tailored specifically for what Google’s systems think I will find helpful.

Once you start understanding how AI systems do search, which is primarily vector search, it can be tempting to work to reverse engineer these. If you’re optimizing by using a deep understanding of what vector search rewards (including using cosine similarity), you’re working to look good to the AI systems. I'd caution against diving in too deeply here.

Given that the systems are fine-tuned to continually improve upon producing results that are the most satisfying for the searcher, looking good to AI is nowhere near as important as truly being the result that is the most helpful. I would argue that optimizing for vector search can do more harm than good unless you truly do have the type of content that users go on to find more helpful than the other options they have. Otherwise, there’s a good chance you’re training the AI systems to not favour you.

I am once again offering site quality reviews.

For a limited time, I'm opening up site reviews in which I compare your pages against those that Google is preferring and give you loads of ideas for improving.

More information here on my site reviews.

My Advice

My advice is to optimize loosely for vector search. What I mean by this is to not obsess over keywords and cosine similarity, but instead to understand what it is your audience wants and be sure that your pages meet the specific needs they have. Is using a knowledge of Google's Query Fan-Out helpful here? To some degree yes, as it is helpful to know what questions users generally tend to have surrounding a query. But, I think that my same fears apply here as well. If you look really good to the AI systems trying to find content to satisfy the query fan-out, but users don't tend to agree, or if you're lacking other characteristics associated with helpfulness compared to competitors, you might train Google's systems to favour you less.

Make use of headings - not for the AI systems to see, but to help your readers understand that the things they are looking for are on your page.

Look at the pages that Google is ranking for queries that should lead to your page and truly ask yourself what it is about these pages that searchers are finding helpful. Look at how well they answer specific questions, whether they use good imagery, tables or other graphics, and how easy it is for the page to be skimmed and navigated. Work to figure out why this page was chosen as among the most likely to be helpful in satisfying the needs of searchers.

Instead of obsessing over keywords, work to improve the actual user experience. If you make your page more engaging, focusing more on metrics like scrolls and session duration, rankings should naturally improve.

And mostly, obsess over helpfulness. It can be helpful to have an external party look at your content and share why it may or may not be helpful. If you are interested, for a limited time I am once again offering site reviews in which I do just that - look at your pages and compare them to the ones Google's systems are rewarding and then give you loads of ideas for improving.

I have found that even though I have this understanding that search is built to continually learn and improve upon showing searchers pages they are likely to find helpful, I still find myself fighting the urge to optimize for machines rather than users. It is a hard habit to break! Given that Google's deep learning systems are working tirelessly on one goal - predicting which pages are likely to be helpful to the searcher - that should be our goal as well. As Google's documentation on helpful content suggests, the type of content that people tend to find helpful is content that is original, insightful and provides substantial value when compared to other pages in the search results.

If you liked this, you'll love my newsletter:

Or, join us in the Search Bar for up to the minute news on Search and AI.

Comments are closed.