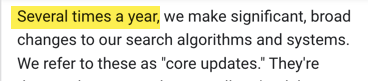

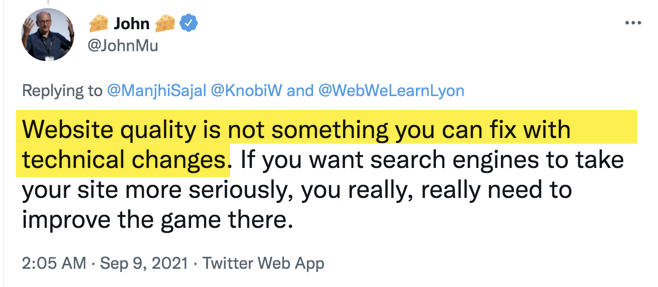

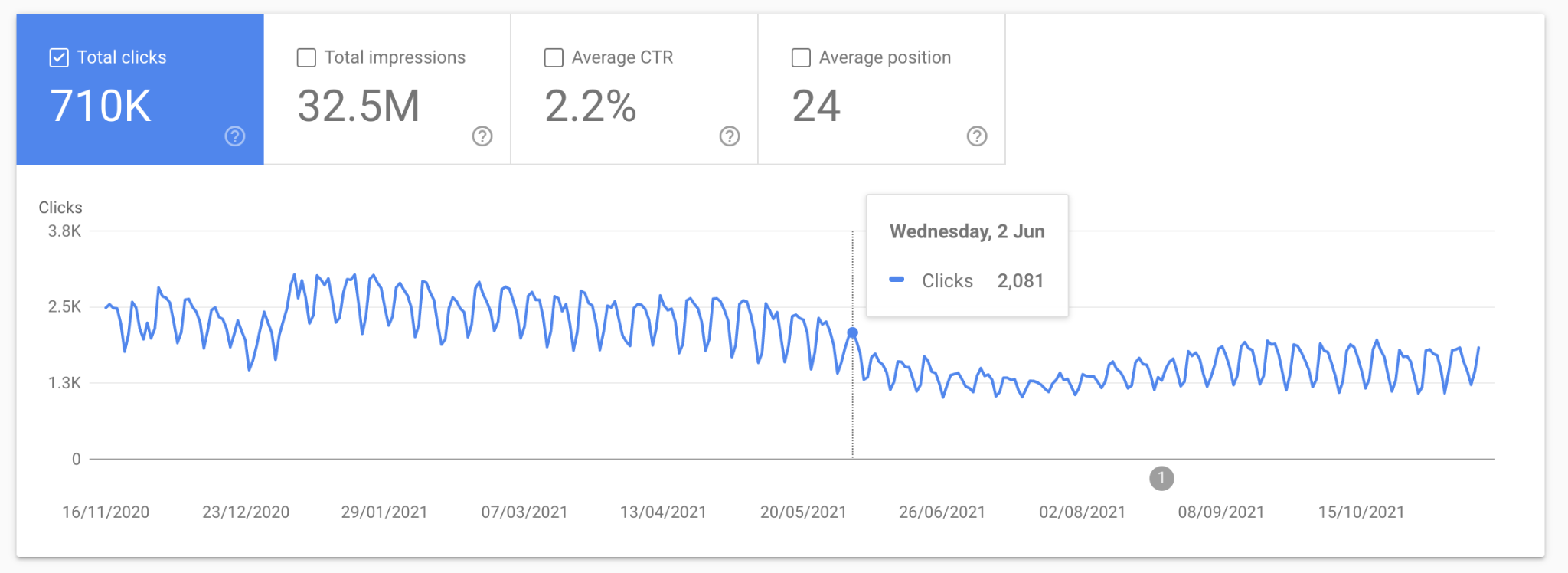

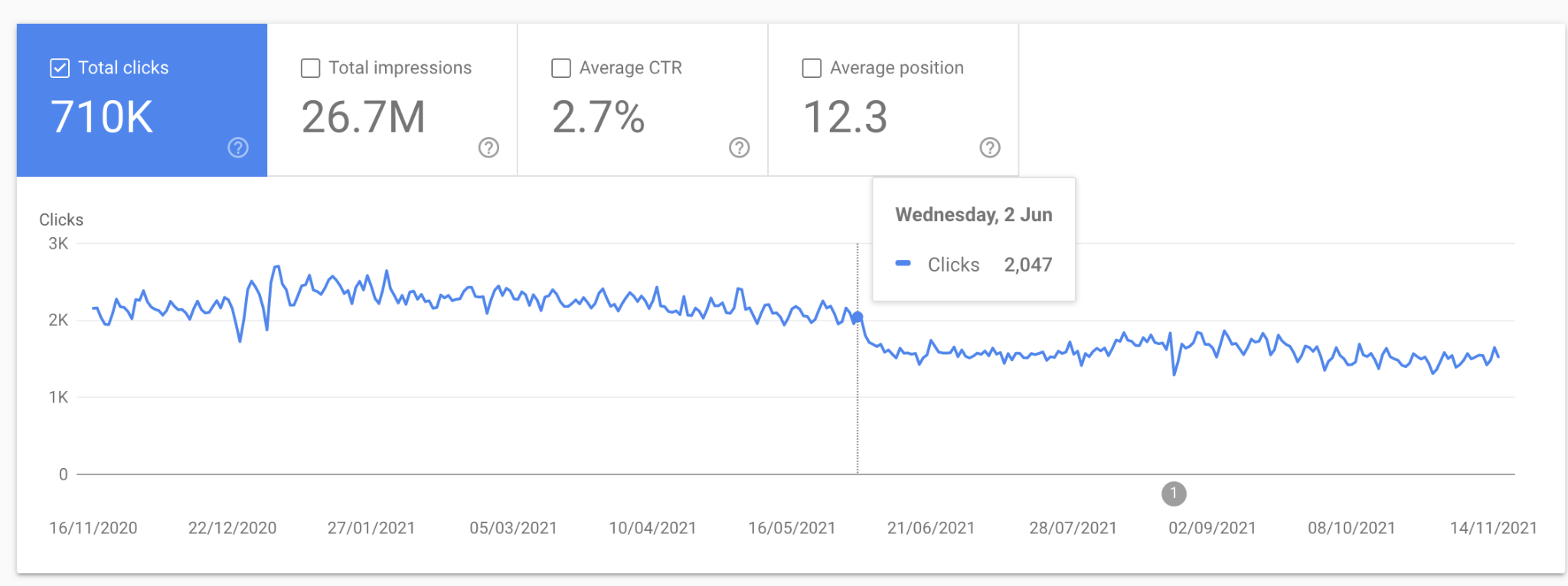

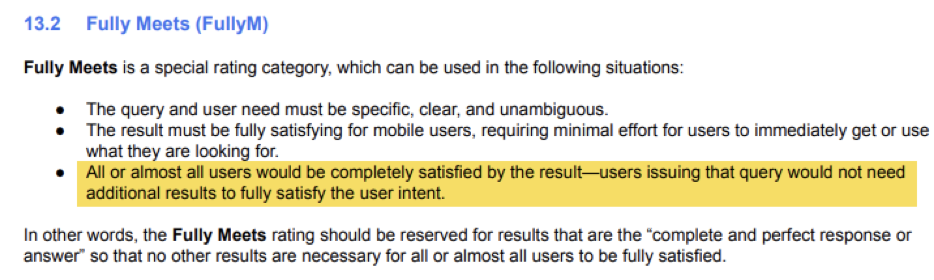

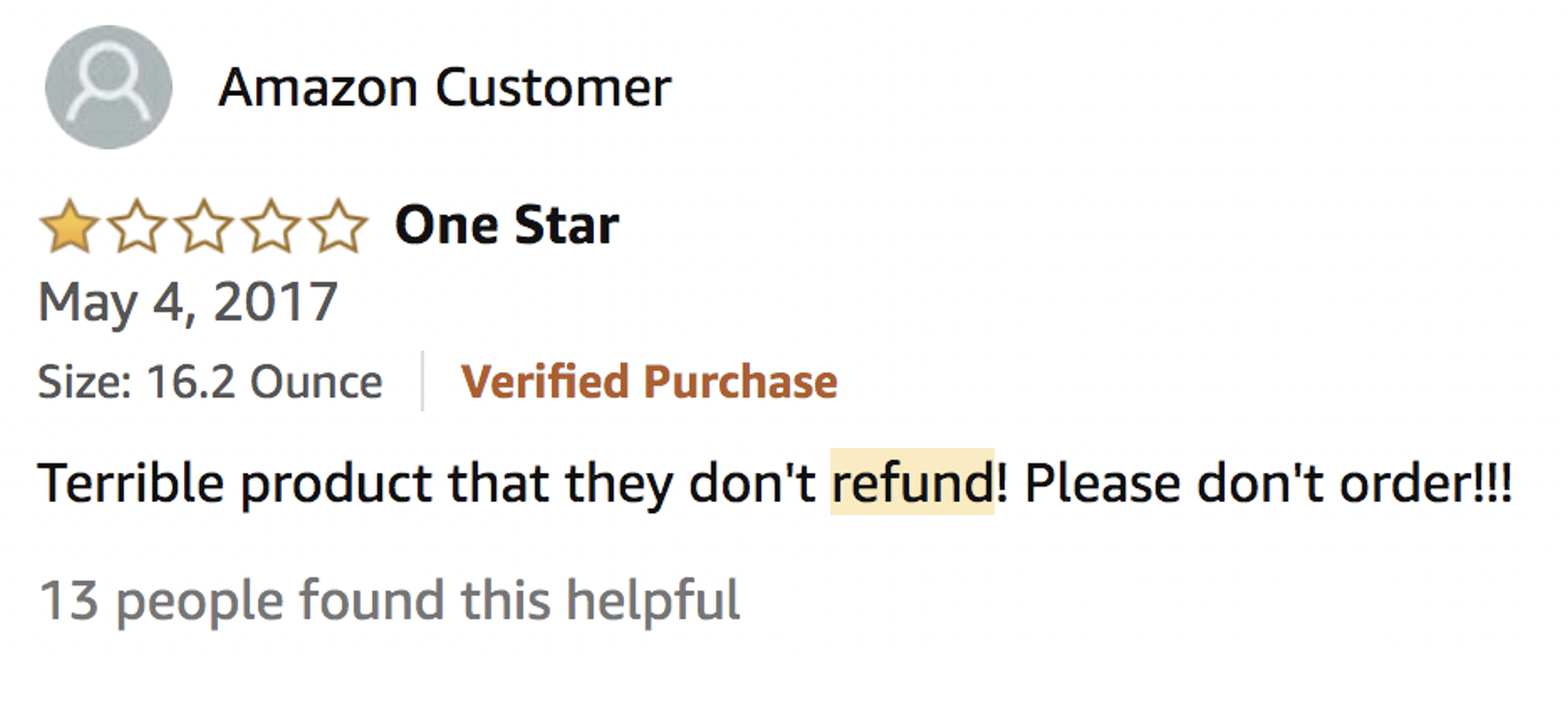

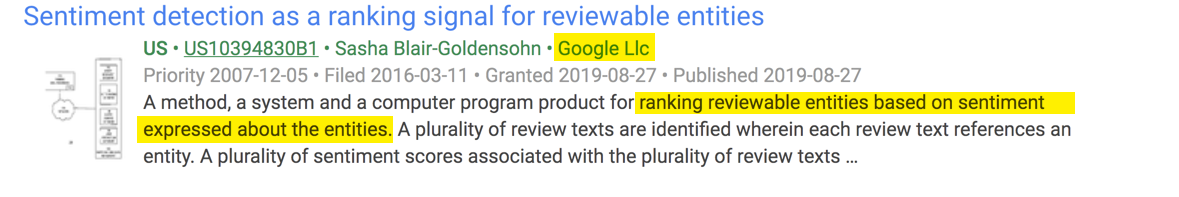

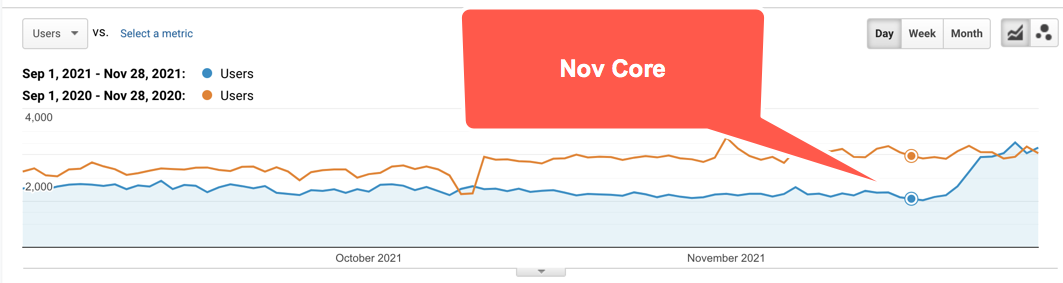

What if user satisfaction is the most important factor in SEO?

Let me see if I can convince you! I’ve shared a bunch in this video and summarized my thoughts in the article below. Also, this is the second blog post I've written on this topic in the last week. There is much more information on user-data and how Google uses it in my previous blog […]

Continue Reading