Two articles were published this week that spoke of the frustration most of us who have worked in SEO have felt over the dominance of large brands for many search results.

I’d like to share why I think Google has this problem, and why I think we will soon be seeing a change where more truly helpful content ranks.

A site called HouseFresh published an article to complement Glen Allsopp’s piece called “How 16 Companies are Dominating the World’s Google Search Results.” Glen pointed out how a seemingly diverse list of websites that rank well in Google search are actually mostly owned by a small group of well known brands. In a review of 10,000 keywords related to product review sites, Glen found that 16 large networks of brands dominated these results consistently.

This was the same complaint in the article written by Gisele Navarro and Danny Ashton on HouseFresh’s site. Brands that are ranking for product review terms like “best air purifiers for pet hair” include sites like Buzzfeed, Rolling Stone and Forbes, who are clearly not topic experts. And in some cases, the advice that they are giving about products contains made up references to rigorous testing with no supporting evidence and recommendations of products that clearly are not the best or in some cases are not even in business any more.

Meanwhile, Google tells us their AI system called the reviews system is designed to return the type of review content that people find helpful - content that:

- evaluates products from a user’s perspective

- demonstrates knowledge about the product

- provides visual information sharing your own experience

There appears to be a mismatch in what Google says they want to reward and what is ranking!

Why I think Google is ranking big brands and why this could soon change

If you ask most SEOs why large brands dominate the search results, the answer would clearly be because of links. Glen’s article mentions the word “link” 74 times. It makes sense. As SEO’s we are used to optimizing for Google’s search algorithms where we have known for years that PageRank is incredibly important to ranking.

Imagine the PageRank that flows through these networks of sites.

I think that for a time, this theory was correct. When PageRank was the cornerstone of Google’s ranking systems, those who could get links were the ones who rank.

There are many who would say that this is still the case today. After all, if you look at the link profile of any of the brands listed above, very few of us could compete in terms of PageRank.

Over the last decade or more, Google's reliance on PageRank as a signal to help approximate quality has shifted dramatically. I would argue today that while links are a component of the perceived authority of these sites, there are many other signals around the web and in the world itself that indicate they are considered authoritative enough for most people to trust their recommendations.

E-E-A-T is so important.

E-E-A-T matters. It’s related to links, but is not PageRank.

I recently updated my article on E-E-A-T with many new thoughts on E-E-A-T and its importance. This concept is a template through which every query is evaluated by Google. It makes use of many signals from around the web to put together a picture of an entity and what it is known for. It’s evaluated for every query and helps Google understand what type of authoritativeness, expertise and level of trustworthiness users tend to expect for the query they searched. If a query is YMYL, then the results that searchers are most likely to find helpful are going to be those they recognize as demonstrating expertise, authoritativeness and trustworthiness.

In 2019 Google told us that the way they determine E-A-T was by identifying signals that correlate with trustworthiness and authoritativeness. They previously had told us that there are over 200 of these signals that can be used in search. I would expect by now that number is much larger as there are a multitude of things that could be used in machine learning systems to help determine what is likely to be considered authentic. Google’s machine learning systems are continually learning how to best use the signals available to them.

PageRank is the most well known of the signals used to approximate E-E-A-T, but most likely Google is using a myriad of signals available from all around the web to put together something akin to a mental image of a brand, what they are known for, and whatever other components the system decides should be considered as important for the query searched.

The brands pictured in the image above have loads of signals that can indicate to search engines that people tend to recognize them as authoritative. They clearly have E-A-T.

But what about E-E-A-T?

How does Google measure experience?

Google doesn’t really measure experience. Rather, they tell us that people often find that content that demonstrates experience is helpful. And Google's systems are built to reward content that people find helpful.

In 2022 Google taught their quality raters to consider real world experience when they make their assessments on whether a page is helpful or not.

When raters are reviewing the search results that are suggested before a new algorithm change is implemented, if they see pages that demonstrate some degree of real world experience as described in the guidelines, they’ll keep this in mind when deciding whether to mark these as examples where the search engine did a good job at returning a helpful result. Experience may be something that is noted in their page quality grid.

These examples are then used by machine learning systems that adjust their weights slightly as they learn what a good result is, so that they are more likely over time to recommend content that raters would consider a good, helpful response.

It’s not like a rater marks your site as demonstrating experience and then you can rank better. Rather, they give examples of content that are high quality so that over time, the system learns and shifts more and more to give a preference to the type of content that users find helpful.

Google's guidance on creating helpful content relates to experience

Here are some of the questions in their guidance on creating helpful content that relate to creating the type of content that usually can only be created out of first hand experience and knowledge on a topic:

- Does the content provide original information, reporting, research, or analysis?

- Does the content provide insightful analysis or interesting information that is beyond the obvious?

- If the content draws on other sources, does it avoid simply copying or rewriting those sources, and instead provide substantial additional value and originality?

- Does the content provide substantial value when compared to other pages in search results?

- Does the content present information in a way that makes you want to trust it, such as clear sourcing, evidence of the expertise involved, background about the author or the site that publishes it, such as through links to an author page or a site's About page?

- If someone researched the site producing the content, would they come away with an impression that it is well-trusted or widely-recognized as an authority on its topic?

- Is this content written or reviewed by an expert or enthusiast who demonstrably knows the topic well?

- Does the content have any easily-verified factual errors?

Here’s the wild thing though. Even though Google’s systems are built to reward content that looks like high quality when judged by these questions, Google has no idea whether your content really is helpful.

Google does not know if your content is factual.

Google does not know if you’ve actually tried a product.

Google does not know if your page is truly unique, original and substantially more valuable than other pages on the web.

They don’t measure these things.

Rather, they tell us that these are the types of things that users like. And their systems try to produce results that users like.

I believe that the main driver in rankings today is whether or not a search provides a satisfactory result for a searcher. That is something that Google can approximate well. They have all sorts of signals they can use to help determine if a search has been satisfied: clicks, returns to the search results, hovers, scrolls, and more. Each time a search has been satisfied, that sends a signal that essentially says, “Good job algo - you satisfied the search!”. The goal is to do more and more to learn to satisfy searches.

Google doesn’t actually understand whether content is accurate

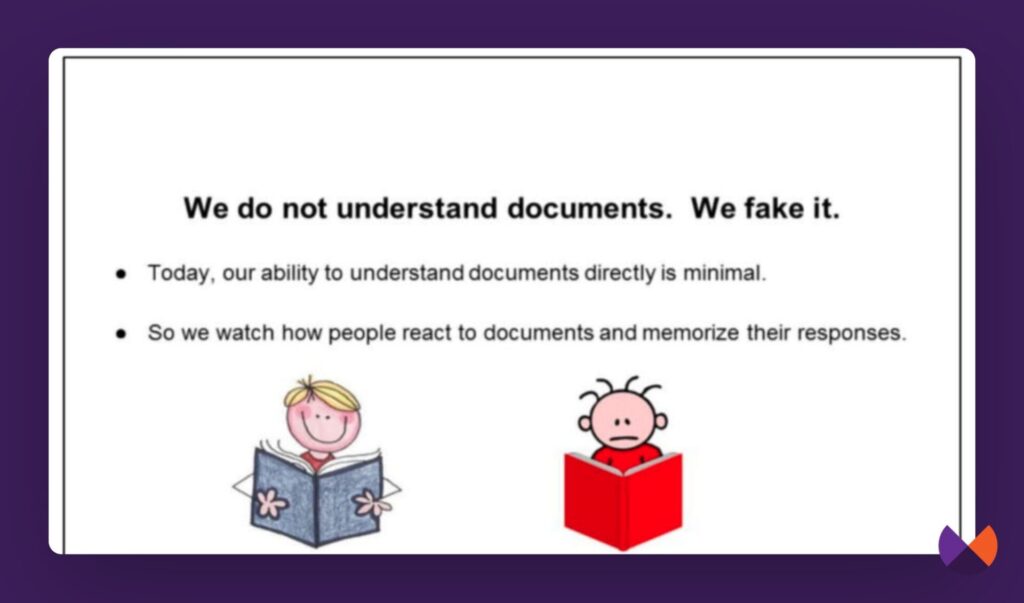

The DOJ vs Google antitrust trial has a presentation called “Q4 Search all hands” from 2016, in which we learn that Google does not understand documents. They watch how people react to documents and memorize their responses.

They say, “Beyond some basic stuff, we hardly look at documents. We look at people. If a document gets a positive reaction, we figure it is good. If the reaction is negative, it is probably bad. Grossly simplified, this is the source of Google’s magic.”

Can you see why Forbes, Buzzfeed and other big brands can rank well? If generally people like content and find it helpful, then search is doing a good job. Now, there's more to this - if over time content is consistently inaccurate, that really should be reflected in user actions. The system should figure things out. But, sometimes Google is going to recommend content that is popular, but not necessarily the best. But because it comes from a place users generally trust as authoritative, it's ok.

Google also says in that presentation that when this fake understanding fails, they look stupid.

Going back to the 2019 Google document that tells us how they fight disinformation, they say that they recognize that some topics are more prone to disinformation than others. They design their systems to prefer authority over other factors when a time of crisis is unfolding. I would argue that when Google determines that trust is important, they often will defer to ranking authoritative sites over some that perhaps are more deserving.

Product reviews - reviews that convince people where to spend their money, are an excellent example of this.

In a space that is filled with recommendations that are likely to be influenced by affiliate commissions and other economic interests, Google prefers to err on the side of caution in who they recommend. In many cases the system likely prefers authority over experience.

Why?

Google doesn’t know that Forbes has recommended a product that no longer exists, or isn’t really the best choice if you were to ask a true expert on this product. But, they likely have loads of signals that indicate that when people search for a product review and land on a Forbes page they tend to trust it and engage with it. And they have other signals that indicate that people tend to trust the content. As AJ Kohn recently wrote, the results are not perfect, but they are “goog enough.”

I believe that it is true that the SERPs were at one time shaped by PageRank, resulting in big brands dominating for many queries. Over time as the importance of PageRank faded and other signals were used to form E-E-A-T evaluations, the SERPs remained mostly the same. Why? Because users were, for the most part, satisfied with them.

How does this change?

One thing that I am realizing about machine learning systems is that they take time to train and to improve. I believe that advancements Google made this week will speed things up!

Google first gave us the “quality questions” with the release of Panda, 13 years ago. Imagine. 13 years of moving incrementally closer to a web where the results are reliable and helpful. It’s only been in the last year or so that Google introduced this idea of including experience in quality. And they clearly still have more learning to do.

It’s easy to give up on Google, especially when you look at some of the things that are ranking today. (I would argue though that for me personally, I usually can find a satisfying answer for most queries I search.)

Here’s why I am excited.

Gemini 1.5 drastically improves Google's AI capabilities

Gemini 1.5 was announced this week. In my paid newsletter this Friday I’m writing my understanding after studying the Mixture of Experts paper that explains why this model marks a dramatic and significant improvement in Google’s AI capabilities. Gemini 1.5 uses a new type of MoE architecture. It’s not just a neural network. It’s a whole system of neural networks, where each network is an expert in something that will help the system better understand its training data. The system learns how to create these expert networks and then they each learn and improve on understanding their task. Not only do each of the experts learn, the whole system learns to optimize which experts are used for each query. This AI architecture is fast and saves Google loads of time and money.

Gemini 1.5 is a substantial improvement in Google's AI capabilities.

It will likely learn and improve much more quickly than AI systems have to date. We are about to see an exponential increase in AI’s capabilities to learn.

What can be done with a MoE system is terribly exciting. But it is almost beyond comprehension what the possibilities are when we consider that Gemini is not only trained to understand the connections between the text it has been trained on, but also video, images and audio.

In 2022, a month before his passing, Bill Slawski wrote about how a MoE model could allow Google to train on extremely large datasets such as the entirety of YouTube. That day is now here.

What this means is that with Gemini 1.5, Google suddenly got better and faster at using AI in their systems and in understanding the connections between things in video and imagery. I really think this has to result in improving the systems that help identify which results are likely to be considered helpful by a searcher. Especially when we consider that this is the number one goal of the ranking system!

I believe that as the system continues to learn what is helpful, reliable and also demonstrates real world experience, we will see more and more truly interesting, original and uniquely helpful content ranking from smaller sites.

I expect we will see more and more “hidden gems” ranking soon - truly helpful content from people who are not big brands, but rather topic experts and enthusiasts.

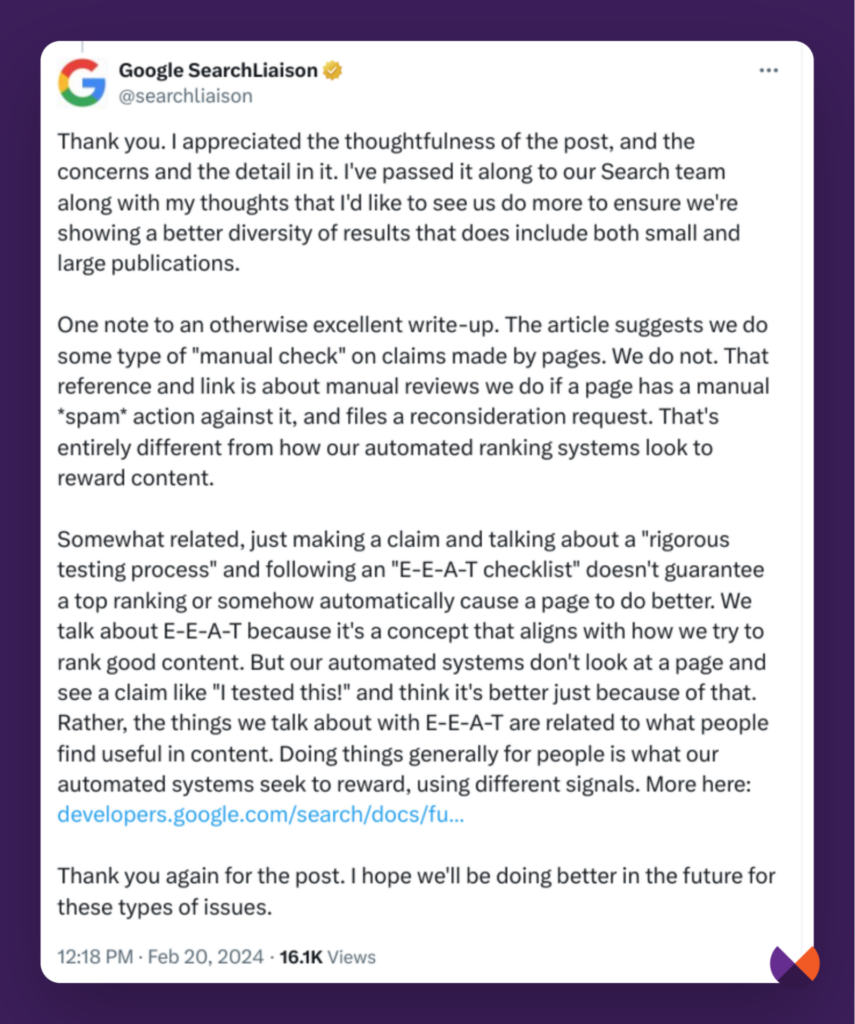

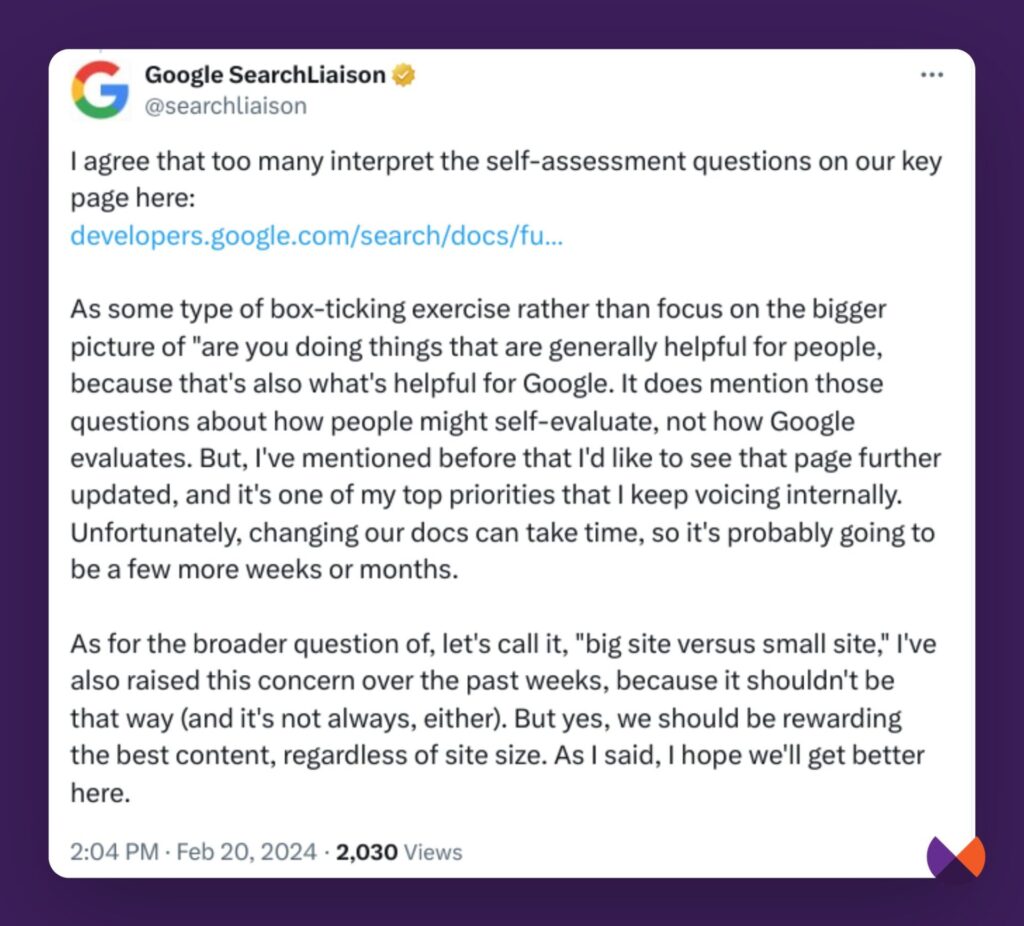

Danny Sullivan chimes in

Google’s Search Liaison added his thoughts to this discussion.

A few things stood out to me from Danny’s reply:

- Google’s systems don’t look at a page and see a claim of testing a product and think it’s better because of that.

- The things Google talks about with E-E-A-T are related to what people find useful in content.

- “Doing things generally for people is what our automated systems seek to reward, using different signals.”

He also added some encouraging words regarding big brands.

“As for the broader question of, let’s call it, ‘big site versus small site,’ I’ve also raised this concern over the past weeks, because it shouldn’t be that way.”

Danny agrees that Google should be rewarding the best content, regardless of site size. He has passed these concerns on to Google and believes that they will get better here.

My prediction

I think we are in for more updates to the helpful content system, and likely core updates as well now as Google uses their new capabilities provided by Gemini 1.5. I expect we will see a shift where Google’s systems are better able to recognize and reward content that truly is helpful and unique.

However, I think that it is going to take time for people to start truly creating great content. It’s really hard for us to get rid of our SEO mindset and put ourselves in the shoes of our searchers.

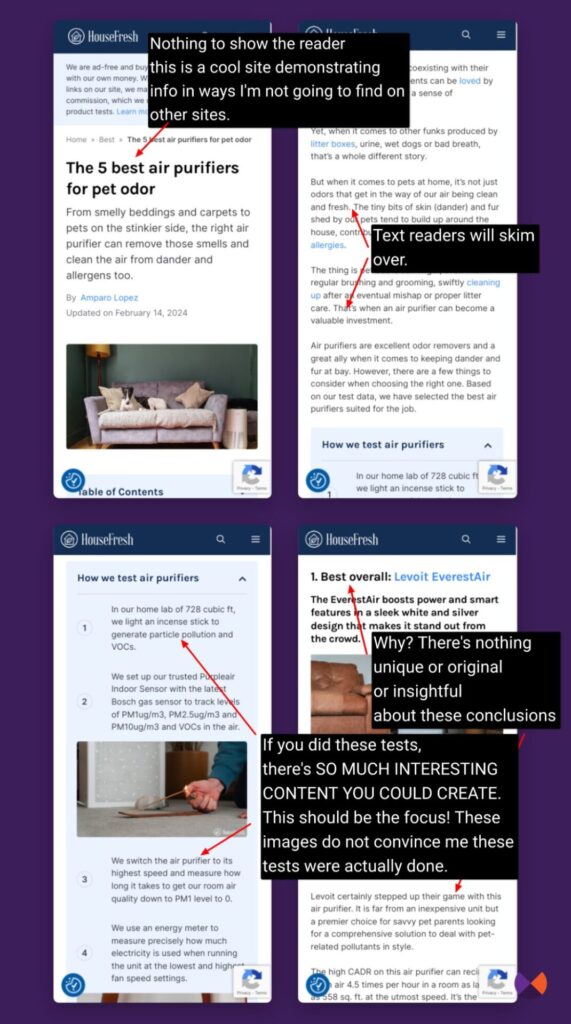

A quick review of HomeFresh’s site re helpfulness

Let's put ourselves in the shoes of someone who is consider buying an air purifier for pet odor. They're not really looking to read a full article, but rather, I'm betting they want to skim and learn a few things.

When I land on HouseFresh’s page on air purifiers for pet odor, I see a lot of attempts at demonstrating experience. But it’s really not evident to me as a searcher who’s trying to quickly figure out what products are best here that this is a blog that’s speaking to an audience out of a passion for making houses fresh and studying air purifiers rather than an affiliate site created for the opportunity that exists to make money online.

It is really hard for us as SEO’s to put ourselves in the shoes of a searcher and truly focus on the meeting the needs that caused them to come to our pages rather than on what it is that Google’s algorithms reward. I do believe that this article was borne out of experience. But I’m not convinced the average searcher would find it substantially more valuable than what else exists online.

A few things that stood out to me:

Now, I think it certainly is possible that this site tested products and demonstrates more experience than the big brands that are ranking.

However, in order to convince Google that you are offering content that users are consistently likely to find helpful, it’s not Google you need to convince. It’s users!

Why is Housefresh not ranking for their blog post?

The blog post I linked to at the start of this article that Housefresh wrote to complain about the dominance of big brands in the SERPs is currently ranking behind Reddit posts when you search for it. Many are flagging this as a failure of search once again.

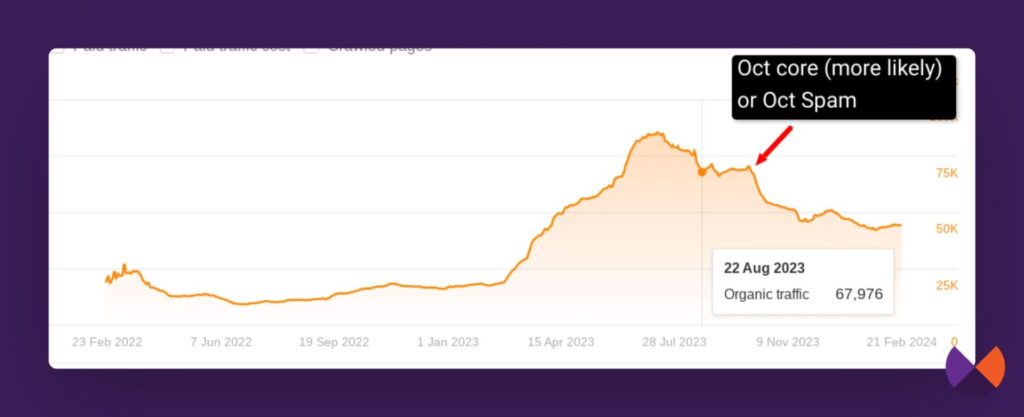

I have two thoughts on this. First, according to Ahrefs' data, it looks like this site has issues connected with both the August and October Core updates. This will impact rankings across the board.

But secondly, perhaps the system is predicting that people are likely to find the discussion on the article more interesting than the article itself? I know it doesn't seem right. But this is the point of search - to predict what people are likely to want to engage with.

This is probably a good place to mention my helpful content workbook. It will help you assess your site in the eyes of Google's guidance on helpful content. Buy it before I've published my next book (oh it is coming soon) and you will receive my book on how AI has changed Search for free once published.

On a goofy note, there’s been this wild trend where when I travel, Google runs big updates. In recent years, because I haven’t traveled much, Lily Ray took over this silly honor. Well, in early March both Lily and I will be attending Pubcon in Vegas. I’m teaching a masters group advanced workshop on understanding and succeeding in Google’s AI driven ranking systems. Perhaps we will have some updates to discuss? Perhaps we will start to see more cases where sites that have focused on creating helpful content that meets the needs of their audience are better able to outrank big brands.

Comments

If Gemini is what’s supposed to help Google understand content then god help us.

As always, great insights. These are the same thoughts I’ve been having but it’s like you organized everything in my mind and put it succinctly. I bookmarked the page to review regularly.

I just take issue with your criticism of HouseFresh’s competition “air purifier for pets” post. You said there is nothing demonstrating they have a cool site and mention they could create so much interesting content with their testing, but in the same breadth, explained “but it’s really not evident to me as a searcher who’s trying to quickly figure out what products are best here that this is a blog that’s speaking to an audience out of a passion”. I mean, which is it – if you’re a searcher QUICKLY trying to find the best product, then it really doesn’t matter what a review says. Google should show Amazon results with the most reviewed and highest-rated products in the category, and call it a day. The issue is, all publishers lose there.

I am reminded of how Steve Jobs didn’t give smartphone users what they were asking for, he/Apple gave them what was best for them and the things they didn’t know they needed or wanted. Google’s search results are incredibly responsive and, to be honest, maybe it’s the realist in me, but most people/searchers are kind of dumb. The average search user doesn’t know any better so, in many cases, they’ll absorb whatever information is presented to them. I’m not sure how to address this in search but deferring to the biggest media publishers on the internet just seems like a cop out.

Anyway, back to House Fresh and sites struggling to convey trust, how would YOU suggest a publisher quickly demonstrate to a reader “hey, you can trust me, I know what I’m talking about” other than building links?

Thanks, Marie! =)

Imagine if you were looking to buy an air purifier and you landed on a page from Home Fresh that instead of looking the same as everyone else’s showed really cool tests and studies and pictures and videos and examples of tests they did along with their stinky pets. This could be so much more helpful.

The problem is, we don’t have a precedent of people creating this kind of content. Which is necessary because this type of content is expensive and takes extensive effort.

I understand and appreciate that approach, but if Google is going to go ahead and rank crappy big media pages with their summarized “reviews”, how does it become feasible for smaller companies to invest that kind of money and resources into building those pages? Especially when, after it all gets published, the long term results just aren’t there.

The WireCutter is often used as the quintessential publisher doing it right, but what about this page: https://www.nytimes.com/wirecutter/reviews/best-beard-trimmer/? This article probably brings in over $1,000,000 in revenue per year and there isn’t a single image showing any kind of testing. In the “How We Tested” section, there is this tidbit: “For previous versions of this guide, we solicited the help of professional barbers to test the contenders. Following that, we tested trimmers with Wirecutter staffers who have different textures and styles of facial hair. We retested existing picks against 13 additional trimmers, seeking answers to these questions.” Above this, there is a picture of what seems to be the author/tester sitting in a barber chair at a local barbershop.

At some point, Google and, by extension, the SEO industry needs to look in the mirror and stop placing all the blame on small to medium-sized publishers who just aren’t “establishing enough EEAT”.

For nearly a decade, I built, grew and managed 4 websites that earned over 200M visits and nearly $1M/year, having survived numerous algo updates through the years. Suddenly, the HCU says all my sites are low-quality and unhelpful, and I’ll be lucky to crack $150K this year. In Google’s eyes, makes you wonder how I could have been doing it all wrong for so long and still had loyal, direct visitors make up about 15% of my readership.

I do think we will see a shift where Google can better recognize and reward content that truly is helpful like they describe. Gemini 1.5 gives Google even more capability to do that.

Regarding the beard trimming page – it likely ranks because it’s one that people tend to engage with and find satisfying. When Google’s documentation tells us things that we should do to write good review content, they’re not saying these are the rules. Rather, they’re saying, “This is the type of thing people tend to like.”

So, if you’re the NYT, it doesn’t take much to convince people to click on this article. And also people likely engage with it and send Google other signals that it is satisfying.

But, what if you’re not the NYT? I had a quick look and really couldn’t see a page that I felt offered significant value beyond what I’d get if I clicked on the first few authoritative results. EVERYONE is essentially writing the same article, following the same blueprint – “We tested x devices. Here’s what we found” followed by an article that describes briefly that they tested them, but um…no real interesting videos or images or super interesting info from the tests.

I did not see any (they may exist – I just had a quick look) pages that were truly unique, original and insightful. And boy, if I had a website that tested beard trimmers I could think of all sorts of interesting content that could be created on that topic that would help better more than the cookie cutter type of articles that currently exist.

Hey Marie, one thing that would be brilliant to have in your ace blog posts and newsletters – would be a practical ‘to do’ type section, bullet pointed at the end. So what actionable takeaways does this latest update/topic/news mean for us SEOs 🙂

plus a couple of key points perhaps.

I deal with a bunch of Results where google is just wrong, and to rank I had to mention the wrong part as well.

I basically phrased that wrong thing as competitors did, and then I corrected it. Google is not smart enough to see my negation and ranked me on page 1 finally. But I had to use the wrong phrase 1-1. Still my page is just one, from 2 mentioning the truth (the other one is the owner of the product) – hundred thousands others saying something completely wrong. Guess what ChatGPT and bard aka Gemini are saying is true, if even google lies in hundreds of featured snippets and people also ask boxes. (You can ask for this in a bunch of ways)

Just writing the truth, even with linking to the only source that should know the truth saying the same (and we are in contact – so I really know), was helping to rank with the truth. Its the owner – who should be more correct?

And its still not Pos1 just page 1. Since wiki is not talking about it, google seems to take what the majority is saying. People who follow and get rejected in this case will not google it again. This is just one thing I am dealing with – but I google so many things, the more you get into a niche, the more lies you get. But people believe in Google as a truth machine.

My topic is not niche at all, and still wrong if enough pages just don’t know. In this case – it is not experience, expertise, authority and trust – It’s just “say the same stuff as everyone does” – no matter if true. Or say what Google believes is true. This approach is a good idea – but not working (at least in german).

This is hard to answer without studying the specific content. But, I wanted to add something important here. Google cannot tell if your content is true or accurate. They can only estimate based on the information that exists on the web and how people interact with it. EEAT plays a role in some of this. Like the opinions of those seen as authorities likely matter more. But hard to say much more without seeing the specific example though.