The DOJ vs Google trial has finally wrapped up. The closing document is full of interesting insight into how Google’s algorithms work. While the final verdict of the trial is a separate story, the documents released gave us an unprecedented look inside Google's ranking systems.

In this article, I’ll share what we learned. It is wild to see how much information they gave us about Google's ranking signals!

Part 2 of this article on what we learned about Google's AI systems will be out in a few days.

Google improves by learning what users interact with. Every page is stored in the index along with signals such as clicks, queries and spam score. While PageRank remains a signal, it is just one of many, and in fact, is nowhere near as important as information stored about the webpage itself. AI Models such as RankEmbed BERT are fine tuned by user preference and quality rater scores. User data also determines how often Google crawls your pages.

Here is the court document if you'd like to read it yourself.

1. Google improves by learning what users interact with

Google says the key to their improvement is learning from what users interact with. Here are some quotes from the document:

"Learning from this user feedback is perhaps the central way that web ranking has improved for 15 years."

"Every [user] interaction gives us another example, another bit of training data: for this query, a human believed that result would be most relevant."

"Every time someone does a search, we give out some hopefully useful search results, but we also get back crystal-clear user feedback: this search result is better than that one."

"... it gives you a lot of information [about] which results are actually good or not, and you can memorize them."

Marie’s Thought: Our goal should be to make content that people find helpful. The guidelines Google gives us in their helpful content documentation are not a checklist of ranking factors, but rather, general advice on what people tend to find helpful. The actions of users teach Google which results are useful.

2. Every document in the index has a DocID

Each document is given a DocID. Each DocID has a set of signals, attributes or metadata. These signals include the page's popularity from user clicks, its quality and authoritativeness, crawl data, and an assigned spam score. While the DocID is a critical internal component for Google, it's not a metric that is publicly visible to website owners.

Note - the DocID has been a core component of Google's index since the beginning of Search. Google’s original PageRank paper says, “Every Web page has an associated ID number called a docID which is assigned whenever a new URL is parsed out of a Web page.” Over the years, Google has expanded on what is included in the DocID.

There is a map called a DocID to URL map.

The signals in the DocID include:

- popularity as measured by user intent, clicks, and feedback systems, including Navboost and Glue.

- quality measures including authoritativeness

- the time the URL was first seen

- the time the URL was last crawled

- spam score

- device type flag

- any other specified signal the [Technical Committee] recommends to be treated as significant to the ranking of search results

3. Ranking signals include clicks, content and queries

Google assigns a ranking score to each page and then, they rely on ranking signals to differentiate them.

Some of the Raw signals include

- the number of clicks

- the content itself

- the terms used within a query

Marie’s Thought: This doesn’t mean that CTR is a direct ranking factor! Rather, clicks a page gets are stored and can be used in multiple ways. They are some of the signals that can be used in systems that help determine rankings. For more, see my post on Navboost. While simply getting more clicks isn't a magic bullet, earning clicks from users who are genuinely satisfied with your content is a powerful, positive signal.

Then there are deep learning models that generate other signals such as the RankEmbed BERT signal.

Top level ranking signals include:

- quality

- popularity

- signals derived from deep learning models like RankEmbed BERT

All of these signals can be aggregated to create even more signals. And together these are all used to generate a final score for ranking.

4. Glue is a query log that records the activity of searchers

Google Glue is a big huge table of user activity. It collects the following:

- The text of the query

- The user’s language, location and device type

- What appears on the SERP (This includes the websites and SERP features.)

- What the user clicked on or hovered over

- How long they stayed on the SERP

- Query interpretations and suggestions including spelling correction and salient query terms

Google is learning from every search. The systems predict which results users will find helpful, including not just websites, but other SERP features such as AI Overviews, maps listings, People Also Ask results and more. Then they measure how users interact with those results. The machine learning systems continue to learn so that they can get better at predicting what searchers are likely to find helpful.

5. RankEmbed BERT is an AI ranking model trained by the quality raters & user activity

RankEmbed BERT is a ranking model that uses 70 days of search logs and also the scores of the quality raters who follow a detailed set of guidelines to assess a page's expertise, authoritativeness, and trustworthiness to measure the quality of Google’s organic search results. (This is why I created a workbook to help you assess your site like a quality rater. It helps you better align with what Google has taught the raters to assess as high quality.)

It is a deep learning system that has a strong understanding of natural language. The document says, “This allows the model to more efficiently identify the best documents to retrieve, even if a query lacks certain terms.”

RankEmbed is trained on 1/100th of the data used to train earlier ranking models, yet provides higher quality search results.

This system also uses information about each query in its calculations, including the salient terms that Google derived from the query and the web pages that were recommended to the user.

Marie's thought: In the Quality Rater Guidelines, the raters are told that "Ratings are also used to improve search engines by providing examples of helpful and unhelpful results for different searches." It's important to remember that a rater doesn't directly demote your site. Instead, their collective feedback teaches the AI models what 'good' and 'bad' results look like, which in turn might influence how your site is ranked in the future.

Google's deep learning systems are far too complicated for us to completely understand, but I think the idea is relatively simple. Similar to how a language model like ChatGPT predicts what words to say, a ranking model predicts which pages to rank. We know they store the query searched, the terms that are important in that query and also what actions users take. Therefore, it's logical to assume that those actions and also the ratings of the quality raters help the model know whether their predictions were good. If users show more signs of satisfaction and if raters' scores improve, then the algo is working well. If not, then the Google engineers need to work on improving things.

6. The webpage itself is more important than PageRank

This was the most interesting thing in the whole document in my opinion! We know that PageRank used to be the most important signal used as an input to your quality score. I thought it was interesting the wording they used in this document, “PageRank…is a single signal relating to distance from a known good source.”

Today, PageRank is just one of many signals used in ranking. In fact, it’s not the most important. Google’s expert in computer science and information retrieval, Dr. James Allan said, “Do you understand that most of Google’s quality signal is derived from the webpage itself?” That is quite the statement.

Marie’s Thought: I think this is incredibly important. Yes, links are a component of quality. But they pale in comparison to the signals the website itself sends. "Most of Google's quality signal is derived from the webpage itself." The signals in the section above this one are likely the most important - especially clicks on that page, and signals that come from deep learning models like RankEmbedBERT which are learning continuously to predict which pages are likely to be the best result for a searcher’s query.

7. User data determines how often your pages get crawled

I did not know this! The trial documents say, “Google has continuously deployed user data to, among other things, determine which websites to crawl, in what order, and what frequency.”

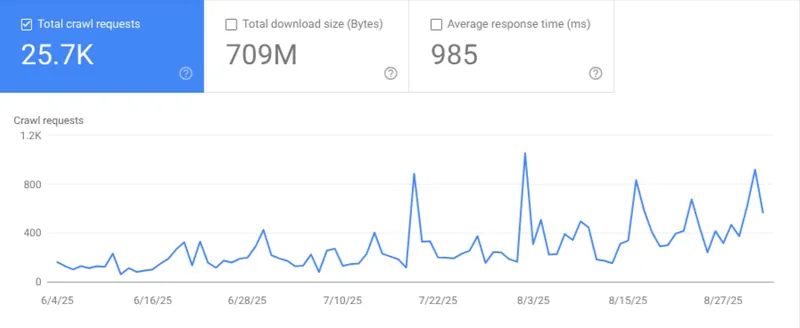

Marie’s Thought: You can check your crawl stats in Google Search Console under Settings → Crawl stats. Is Google regularly coming back to crawl your site?

If Google is crawling less often, it may be a sign that you need to make quality improvements, or do more to develop an audience that hungrily seeks out your content.

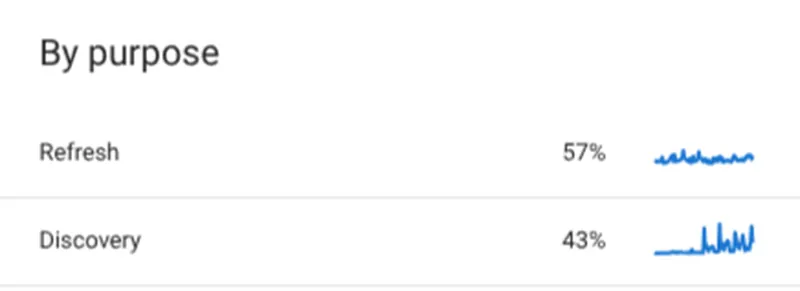

I think it’s super interesting to look at the crawl-purpose stats. They show you how many resources are spent crawling your old content vs. discovering new content you have produced. My personal thought is that if Google is crawling your site often to discover new content, this is a really good thing.

8. If you are not getting crawled it might be because of your spam score

Crawl frequency is determined by quality and popularity signals. Every site has a spam score which is considered when it comes to crawling as well. Boy I wish we could see our site's spam score. If you're not sure if you're doing things that could be considered spam by Google, my spam workbook may help.

9. Chrome data is used in ranking

Oh boy we have speculated on this a lot! Here is a conversation I had with Cindy Krum discussing whether signals from Chrome were used in ranking decisions. The trial did not go into great detail here, but said, “Two exhibits suggest that popularity is based on ‘Chrome visit data’ and ‘the number of anchors.’”

Marie’s Interpretation: It seems to me that popularity, which is just one of the signals used in ranking, can come from links or from people actually visiting and using your site. I think the latter is more important. Which is a better representation of whether people are finding a page more helpful - whether it has links? Or whether people are actively engaging with the page, submitting forms, scrolling, and buying products? To be clear, we don’t know that Google measures those activities in Chrome, but I don’t see why they would not. They would not likely be direct ranking factors. Rather, they would all be signals that can be used to help determine which pages searchers are finding useful.

Phew! That was a lot! There is more to come. In the next few days I'll share more in my blog on what we learned in the trial about Google's AI systems, including where the training data comes from - most of it is not the common crawl - about MAGIT that is used to train the text in AI Overviews and about Google Fast Search. Sign up for my newsletter if you'd like to be notified when it is live.

If you liked this, you'll love my newsletter!

Or, Join us in the Search Bar for real time news on SEO and AI.

Marie

More interesting Reading from my blog:

How Google determines relevancy and helpfulness

How Google’s Helpful Content System Has Radically Changed Search

Comments are closed.